Cybersecurity, AI, and Machine Learning: The Connection to GDPR

With businesses faced with a never-ending race with cybercriminals and continually evolving threats, the EU General Data Protection Regulation (GDPR) taking effect on May 25, 2018 further reinforces the need to strengthen cybersecurity. The GDPR is helping guide companies on secure approaches to managing customer data, strengthening the business case for prioritizing data protection throughout an organization. Under the GDPR, organizations are accountable for protecting “personal data against accidental or unlawful destruction or accidental loss and to prevent any unlawful forms of processing, in particular any unauthorised disclosure, dissemination or access, or alteration of personal data.”

However, while the GDPR will require businesses to comply with rules that will ultimately protect customer data, concerns have been raised regarding how it will tackle automation in data analytics as the market for artificial intelligence (AI) continues to grow. Some aspects of the GDPR (which provides definitions of "personal data," "consent," "processing," and "profiling") have left experts wondering if it will get in the way of AI innovation. The regulation specifies conditions for automated individual decision-making, including profiling. Aspects of concern to businesses include the following:

- Establishing explicit and informed consent from the data subject or user

- Ability to document and present to auditors details on the use of profiling

- Ability of a user to withdraw consent from profiling algorithms

- Identifying potential algorithmic biases

- Requiring human judgment in profiling decisions

The Article 29 Working Party, an independent EU advisory body made up of representatives from the data protection authority of each member state, provided guidelines on automated individual decision-making and profiling. The guidelines, in line with the GDPR, prohibit fully automated individual decision-making, including profiling, that has a legal or similarly significant effect, although there are exceptions to the rule. For one thing, measures should be implemented to protect the data subject’s rights, freedoms and legitimate interests. Another basis is user consent, which has been much discussed in relation to AI. According to the guide, companies that will depend on consent as the basis for profiling will need to show that users understand exactly what they are consenting to. Users should have sufficient relevant information about the use and consequences of the data processing to ensure that the consent they provide represents an informed choice.

Strictly abiding by these rules in the context of AI, the tech industry might need to retool its products and services to adapt to the GDPR era. For instance, it has been posited that cloud-based voice assistants may not be able to process voice commands from anyone if the consent is not explicitly given. Voice assistants might not be able to collect voice data if explicit consent is not given by the device owner, and more so, from any other user (for example, the people who live in the same household as the device owner) who attempts to give voice commands to or is around the digital assistant. This aspect of the GDPR poses challenges to how cloud-based voice assistants eventually perform tasks.

Cybersecurity and AI: Will GDPR slow down innovation?

A similar scenario may apply for cybersecurity companies when it comes to collecting and securing customer data. The cybersecurity industry is increasingly relying on AI and its subset, machine learning, to defend against threats. Under GDPR, cybersecurity companies are mandated to obtain explicit consent and explain to customers how their data will be processed by security engines that use AI technology.

As a result, cybersecurity companies need to implement additional measures around collecting and processing personal data to meet compliance requirements. But how extensive and complex will these changes be? Will compliance be a tedious chore that slows down AI innovation, as others surmise?

Fortunately, some leading security solutions have been developed that can seamlessly help businesses prioritize user privacy and protection essential to GDPR compliance while still employing AI technology. Our own efforts to prepare for GDPR implementation also included ensuring that Trend Micro solutions can be used as a part of the compliance journey. Trend Micro Cloud App Security™ and Trend Micro ScanMail™ Suite for Microsoft® Exchange™ email security solutions leverage AI and machine learning (in addition to other techniques) to detect and block business email compromise (BEC) threats. BEC, which has become a destructive threat to businesses over the years, is becoming harder and harder to detect using traditional approaches that don’t use advanced technology like AI and machine learning.

Trend Micro’s BEC detection techniques use two types of AI: an expert rule system and machine learning. The expert rule system mimics the knowledge of a security researcher by extracting characteristics which could indicate a forgery attempt. It looks at both attack characteristics like sender information, routing, and others, as well as characteristics of the email intention, like a request for action, financial implication, or sense of urgency. The “high-profile user” function applies additional scrutiny and correlation with spoofed senders (often executives) and their real email addresses at the target organization. Next, a machine learning model decides the weighting of each characteristic and compares it to millions of other good and bad emails to precisely identify BEC scams. The machine learning model is constantly learning to further improve its results over time.

The Trend Micro Writing Style DNA is a new artificial intelligence feature that formulates the “DNA” of a user’s writing style based on past written emails and compares it to suspected forgeries. It is designed for “high-profile users” such as C-level executives who are prone to being spoofed. It supplements existing BEC protection methods which use expert rules and machine learning to analyze email behavior (for example, using a free email service provider), email authorship (for example, checking an email sender’s writing style), and email intention (for example, payment or urgency).

AI-powered solutions: Steps toward GDPR compliance

To comply with GDPR, relevant Trend Micro products will obtain consent from the user through an end-user license agreement (EULA). We will provide subject-friendly disclosure on what kind of data our products/services collect, how it is processed and what for, and how the customer can opt-out of the data collection. Customers will also be provided an option to inquire and obtain a copy of their data and disable data collection features.

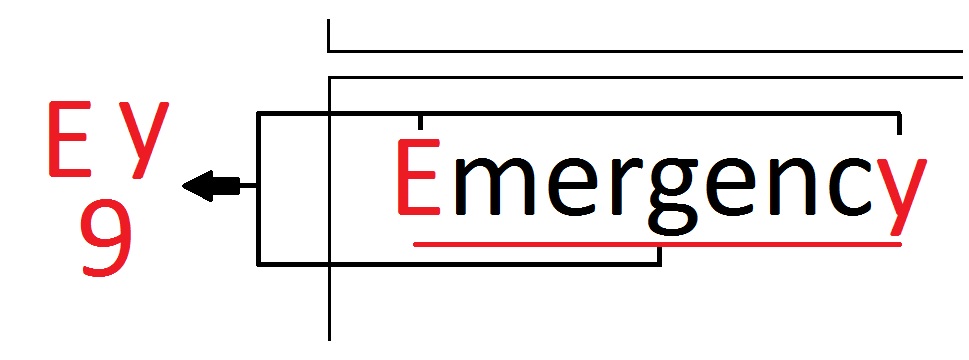

To avoid issues with customer data privacy, the product/service transforms the original raw email content into human-incomprehensible properties before transmitting it to the backend. The original email content is processed inside the customer’s email system, with only the generated properties transmitted externally. The training model’s built-in expert rules will carefully assess the characters within an email header or body, and only keep certain elements, for instance, the first and last letter character of a word, and its length. These properties will be the basis for assessing whether the email is a forgery or not.

Figure 1. Our AI-powered engine converts email content into human-incomprehensible properties before transmitting it externally for assessment. For example, certain elements of the word “Emergency” such as the first (E) and last (y) characters, and its length (9), among other factors, will be part of the properties that will be processed outside the customer’s email system.

Figure 1. Our AI-powered engine converts email content into human-incomprehensible properties before transmitting it externally for assessment. For example, certain elements of the word “Emergency” such as the first (E) and last (y) characters, and its length (9), among other factors, will be part of the properties that will be processed outside the customer’s email system.

The engine also has expert rules (for analyzing email service provider, forged sender, routing behavior, etc.) that are designed to discern which email needs to be processed. It is important to note that the connection and communication between product and service are encrypted to ensure that the data being transferred remains private and secured.

The engine used in our products and services works under human supervision, which is needed in order to tune the training model performance, as well as to handle false alarm cases. This ensures that human judgment is part of the equation whenever AI and machine learning-powered solutions are employed.

A last word on AI and GDPR compliance

The regulations that will be enforced by the GDPR should not be seen as roadblocks in the effective development and deployment of new security solutions and technologies for customers. The emergence of solutions like Cloud App Security and ScanMail Suite for Microsoft Exchange proves that cybersecurity companies — and likewise industries in general — can be flexible enough to adhere to GDPR regulations without deferring the use of advanced technologies like AI and machine learning. To succeed in this, a well-thought-out design is needed, one that manifests an understanding of what the GDPR is for — championing the protection of customer data and, in turn, privacy and safety of individuals. As cybersecurity companies, compliance should be seen in a positive light since the goal of cybersecurity is to look out for the security and safety of customers in the digital age — which will be further strengthened by the GDPR.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks