AI Assistants in the Future: Security Concerns and Risk Management

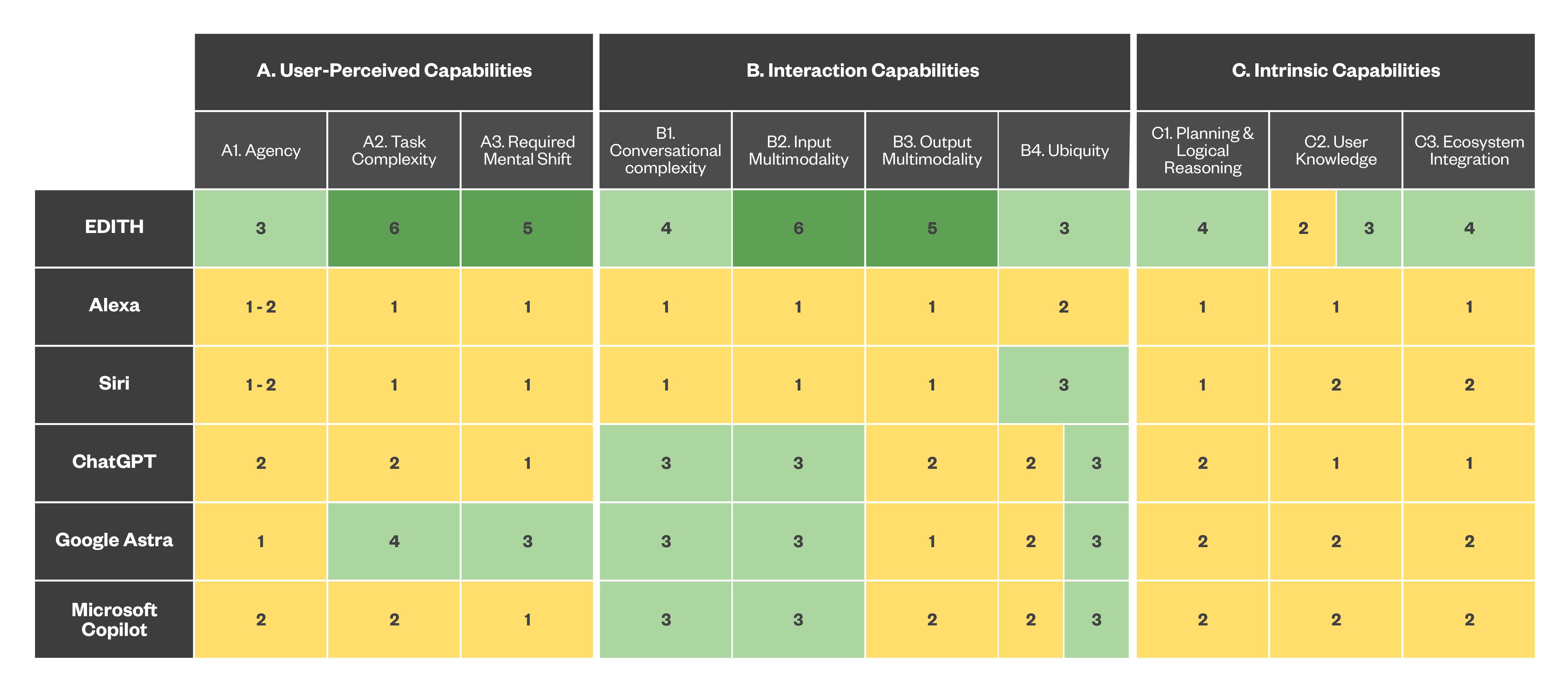

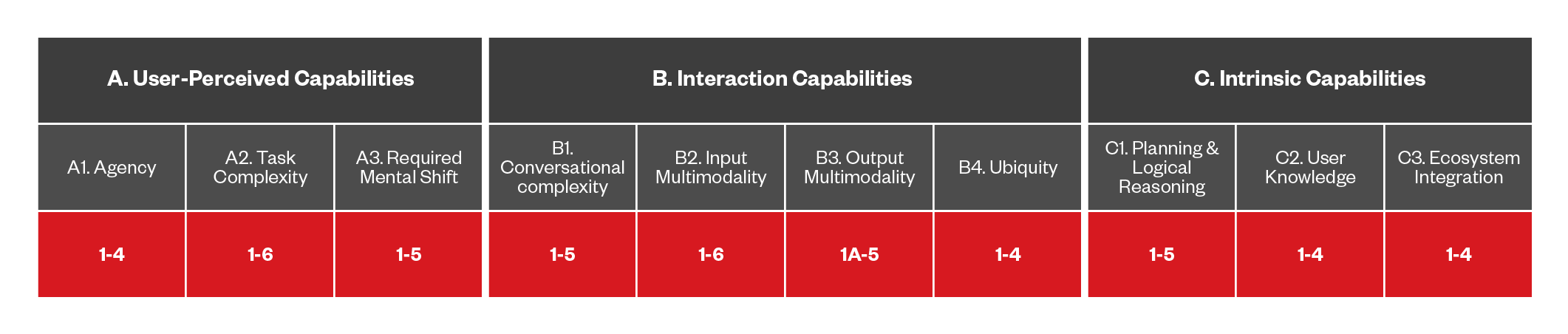

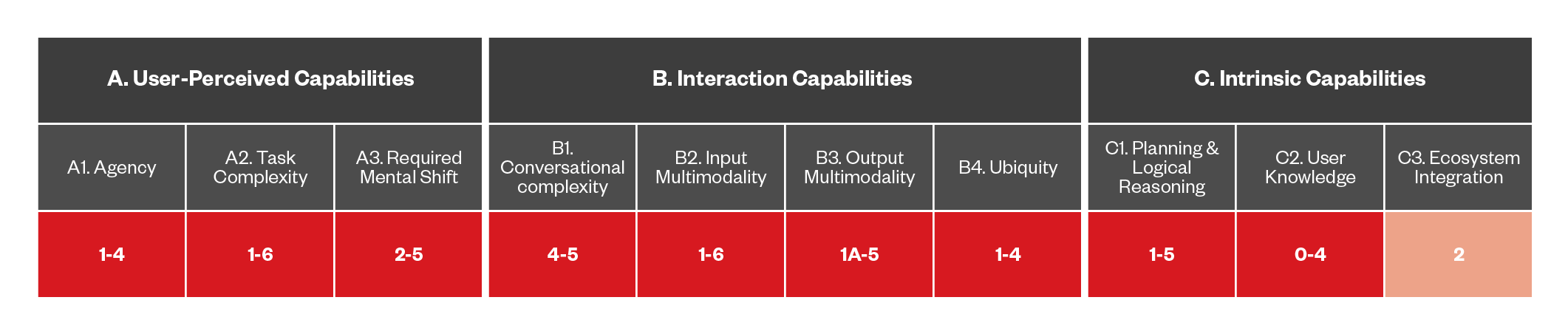

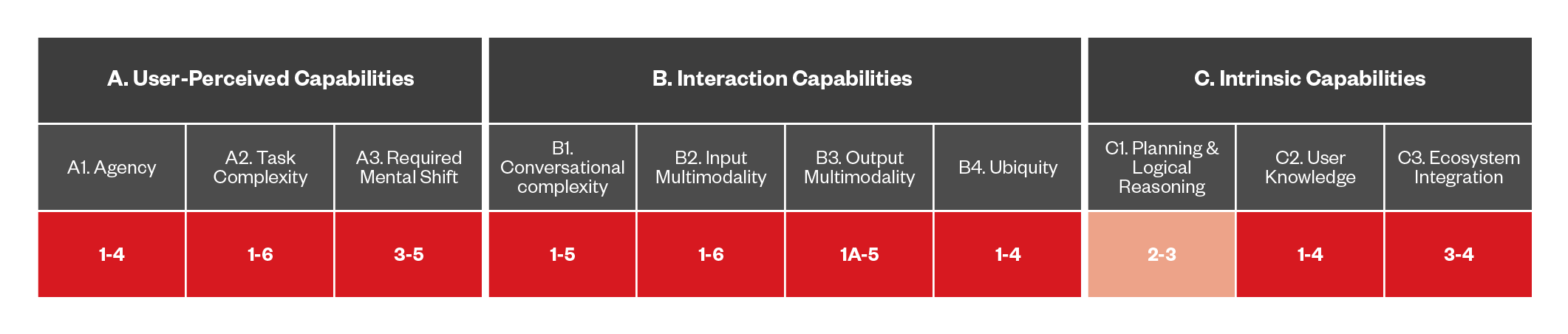

We have identified and isolated the distinctive capabilities for every digital assistant, namely the features that separate them from similar applications, and those that will be key in their future evolution. For each of the identified capabilities, we have come up with levels of advancements that either map the current and past evolutions of these applications or try to draw a roadmap for their short to medium-term prospects. Our goal is to understand where digital assistants can realistically go from their current iterations, and what kind of threats can be expected to target them.

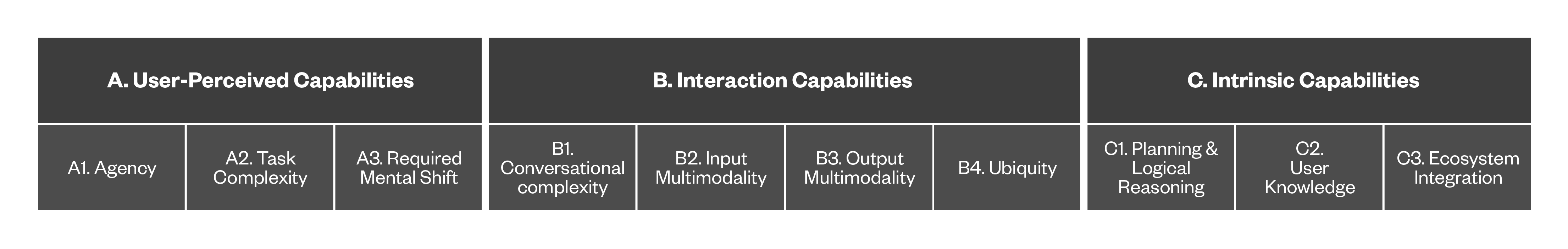

Such capabilities cover different aspects of DAs, with not all of them being directly relevant to the users who interacts with them. For this reason, we organized them into three main categories:

- User-perceived capabilities. These are closely related to how the DA's abilities are perceived by the end-user; among these are the level of agency and the complexity of the tasks that the assistant can undertake on behalf of the individual.

- Interaction capabilities. These pertain to the way the user interacts with the DA, such as the type of user interface, the level of multi-modality supported, and most importantly, the level of its conversational capabilities.

- Intrinsic capabilities. These are intrinsic to the inner workings of the DA and not directly noticeable by the user, such as the level of knowledge the DA has of the individual or the type of integration it has with the wider ecosystem.

Figure 1 provides a detailed description of each capability within its respective category.

Figure 1. Map of categories and their corresponding capabilities

This set of distinct capabilities allows us to evaluate DAs along multiple axes, such as:

- Allowing us to evaluate their evolution over time according to their key features.

- Offering a method of comparing different DAs under different point of views, using a coherent framework.

- Acting as a virtual bill of materials, telling us what is needed for a particular DA-related threat to become applicable in the near future (in a way, giving us a timeline of when to expect specific attacks).

Digital assistant capabilities

A. User-perceived capabilities

The capabilities in this section are closely related to how the DA’s abilities are perceived by the user. As these capabilities progress, the assistant becomes more autonomous and effective. However, it also requires a higher level of trust and psychological engagement from the user.

A1. Agency

Agency reflects the digital assistant's ability to make decisions and act on behalf of the user. Without agency, the assistant is limited to providing information upon request (0). At the lowest level, instead, the DA responds to a command with a corresponding action (1), such as setting an alarm. It then progresses to executing a sequence of tasks from a single request (2), like a "good night" routine that includes lowering shades and turning off lights. As agency increases, the digital assistant can also suggest actions to achieve specific goals — pending user approval (3) — and can eventually make decisions independently and act without supervision (4), fully managing tasks in the best interest of the individual.

A2. Task complexity

Task complexity measures the DA’s ability to perform complex tasks, potentially replacing other devices like mobile phones and PCs. At the most basic level, the assistant provides scripted responses within a fixed domain (1), such as listing booking websites when asked to reserve a room. As it evolves, the DA can offer domain-specific instructions (2), explaining how to book a hotel, and even perform some of these actions directly (3), such as querying hotel availability.

With further advancements, the assistant becomes able to match the functionality of a single app, assisting with all aspects of a task like hotel booking (4). At a higher level, it integrates the functionalities of multiple related apps (5), acting, for example, as a comprehensive travel planner that manages a variety of tasks, including hotel bookings and restaurant reservations. Ultimately, the DA could fully replace devices like smart phones, handling complex tasks across several unrelated domains (6).

A3. Required mental shift

This is not a capability of the digital assistant, but rather a factor that reflects the level of trust and psychological engagement required by the user to interact with the increasingly sophisticated capabilities of the DA as well as accepting and dealing with the possible shortcomings, such as hallucinations that can be mitigated but might not be eliminated. Initially, the user is simply open to listening to the assistant's responses (1). With the progress of the DA’s capabilities, however, it begins to follow its suggestions without verifying them (2). As the capabilities continue to advance, individuals become willing to let the DA perform basic tasks on their behalf (3), handle financial transactions (4), and, eventually, make decisions with legal implications (5).

B. Interaction capabilities

Interaction capabilities pertain to the way the user interacts with the DA. As these capabilities develop, the interactions with the DA become easier and more natural, profoundly influencing the overall user experience.

B1. Conversational complexity

Conversational complexity denotes the DA’s ability to engage in conversations with the individual. When this capability is lacking, no conversation is possible (0). Then, as it develops conversational skills, the assistant becomes able to respond to user requests, beginning with simple question-and-answer interactions (1). It can evolve to remember and use context within conversations (2), before finally engaging in natural conversations with emotional depth (3). As this capability further progresses, the DA can eventually initiate conversations on its own initiative, either with a specific goal in mind (4) or purely for social purposes (5).

B2. Input multimodality

Input multimodality reflects the DA’s ability to understand various forms of media. Initially, the assistant is limited to understanding written text (1) or text generated through speech-to-text technologies (2), although this type of input lacks any paralinguistic information — namely non-verbal elements, such as the emotional nuances conveyed by the voice. As it progresses, the DA then acquires the ability to handle multimedia input (3), such as images and video, and to process natural speech (4), with a full perception of vocal elements like timbre and intonation.

Further advancements enable the DA to interpret non-verbal cues, including facial expressions (5A) and biometric signals such as heart rate and blood pressure (5B), offering a more complete understanding of the user's emotional and physical states. Building on this, the DA develops real-time embodiment (6), gaining the ability to calculate and interpret position, depth perception, context, and audiovisual awareness, enabling a more grounded and contextually rich interaction. At the most advanced level, the DA operates as a brain-machine interface (7), directly decoding brain signals to discern and react to the user's intentions and emotions.

B3. Output multimodality

Output multimodality acts as the inverse of the previous capability, focusing on the DA’s ability to present content back to the user. At first, the assistant may provide no visual or auditory feedback to the user (0), effectively performing tasks in silence. As this capability develops, the DA moves through levels that mirror the progression seen with input multimodality, beginning with basic text generation (1A) and text-to-speech conversion (1B).

It then advances to richer multimedia outputs (2) such as videos, followed by natural speech that captures vocal nuances (3). Building upon these foundational levels, the DA evolves to present itself as a realistic avatar (4), offering a visual and interactive representation to the user. The most sophisticated level of output is achieved with enhanced mixed reality (5), where the DA integrates virtual information into the user’s field of view in real-time, creating an immersive and interactive experience that blurs the lines between the digital and physical worlds.

B4. Ubiquity

Ubiquity measures how well the DA is integrated into the user's daily life. At first, the assistant is confined to a specific location that the individual must actively reach to use its functionalities (1), as in the case of a home device like the Amazon Echo. As ubiquity increases, the assistant becomes more readily available, appearing on portable devices like smartphones (2) or wearable technologies such as smart watches (3), which are always active and easily accessible. The highest level of ubiquity is achieved with a brain-machine interface (4), where the assistant blends seamlessly with the user's cognitive processes.

C. Intrinsic capabilities

Intrinsic capabilities are inherent to the inner workings of the DA and are typically not directly noticeable to the user. As these capabilities advance, the functionalities of the DA become more sophisticated and better tailored to the user’s needs.

C1. Planning and logical reasoning

The planning and logical capability reasoning measures the depth and sophistication of the DA’s planning and problem-solving skills. Starting from zero logical reasoning abilities (0), the assistant can evolve to perform basic queries (1) and make simple one- or two-step inferences (2). Progressing further, it acquires the ability to plan autonomously within specific domains, whether in a single domain (3) — similar to how specialized AI models like AlphaGo operate — or across multiple domains simultaneously (4). At the most advanced levels, the DA can independently learn and reason within new domains (5), and even discover and master new areas of knowledge on its own (6) to perform tasks that were initially beyond its abilities.

C2. User knowledge

User knowledge represents the ability of the DA to acquire and remember information about its user. At the most basic level, the assistant operates without retaining any specific information about the individual (0), simply functioning as a response tool for immediate questions. As it advances, it begins to retain static information about its user (1), such as language preferences and location.

More sophisticated levels involve dynamic knowledge (2), where the DA learns from ongoing interactions, adapting to the habits of the user and their preferences over time. At higher levels, the assistant not only incorporates knowledge of the individual's social circle and communities (3), but also uncovers and learns information that the users themselves may not be aware of (4), such as potential health conditions indicated by fitness device data.

C3. Ecosystem integration

Ecosystem integration gauges how well the DA can access and incorporate external information, becoming an integrated component within a broader ecosystem. Initially, the DA may lack any external connectivity (0). It then progresses to accessing information within a fixed domain or skill set (1), typically from a single data provider. As it advances, the assistant can natively chain data from multiple sources without relying on frameworks such as LangChain or IFTTT (If This Then That), or make an API accessible (2). Eventually, it can even retrieve information and complete tasks by interacting with other DAs or human agents (3). Ultimately, the DA can autonomously discover and make use of new integrations to expand its functionalities (4).

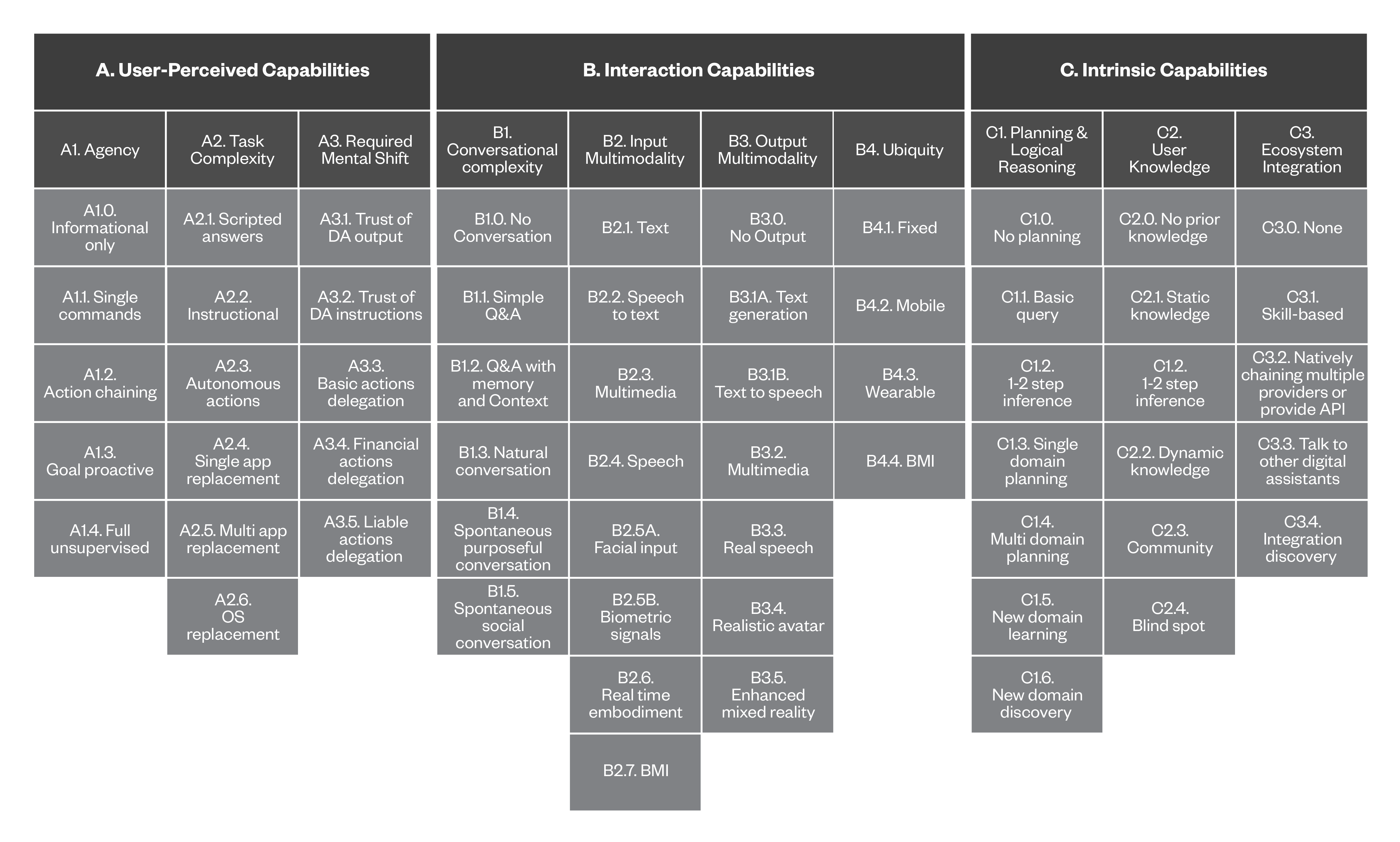

Analyzing the digital assistants matrix

Below is our full matrix, including the top-level categories, their corresponding capabilities (middle) and finally the different levels each capability can have.

Full details and descriptions for each level can be found in the Appendix.

Figure 2. Mapping of categories, their corresponding capabilities, and their capability levels, as described in the previous section

To better understand how the digital assistant matrix works, we will show how it maps three different digital assistants categories: one from the previous generation of hardware assistants (Amazon Alexa, Apple Siri), one from the present offering (Google Astra, ChatGPT 4o) and even one hypothetical future offering straight from a cinematic universe (Stark Industries’ E.D.I.T.H.) that, while fictional, presents an interesting portrait of future developments and shows the flexibility of our approach. The section “Digital Assistant Capabilities Scoring Table” shown on the sidebar and in the appendix serves as a reference for the values depicted in the tables.

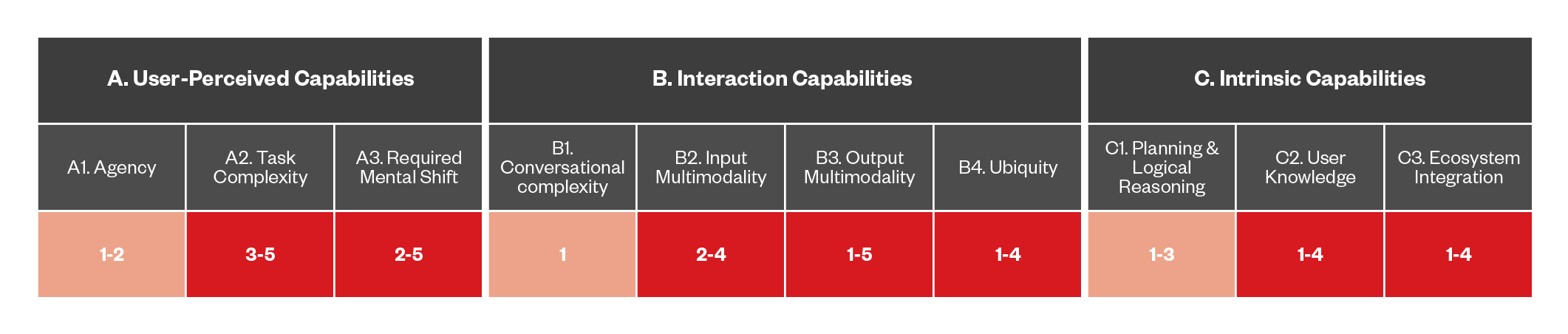

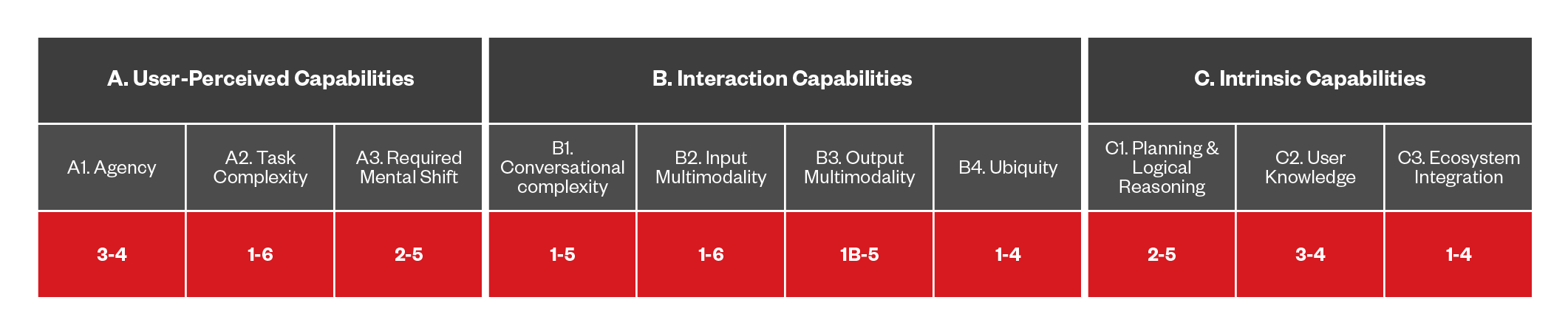

First-generation digital assistants: Amazon Alexa, Siri, Cortana, Google Assistant

First released in 2014 alongside the first Amazon Echo device, Alexa represents the first generation of DAs to enter the consumer market, with capabilities that are very much comparable to its competitors such as Google Assistant, Microsoft’s Cortana (which is currently being phased out), and Apple’s Siri. However, while these assistants paved the way for the technology to gain wider user acceptance, they show the shortcomings of their older age.

Figure 3. Mapping the capabilities of the first-generation digital assistants

In terms of capabilities, these DAs represent the baseline of our matrix: for example, we can see that Alexa, while able to provide a conversational interface, has a very basic level of agency, being limited to running some home automation upon user request. The same can be said for Cortana or Siri, both of which are able to perform basic interactions with a local computer or mobile phone.

They also possess basically zero reasoning ability, acting like a flat conversational agent for the user. This requires a very limited psychological acceptance from the user, who often can barely withstand listening to what the assistant says. The notable capability that these first-generation DAs have that chart higher than the baseline level is ubiquity, since Siri or Google Assistant can run on a phone and be with the user the whole time.

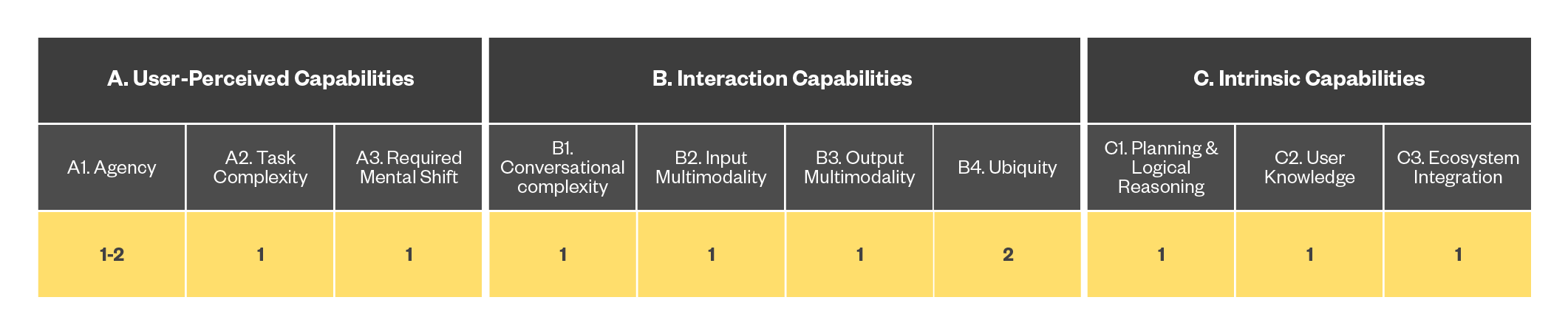

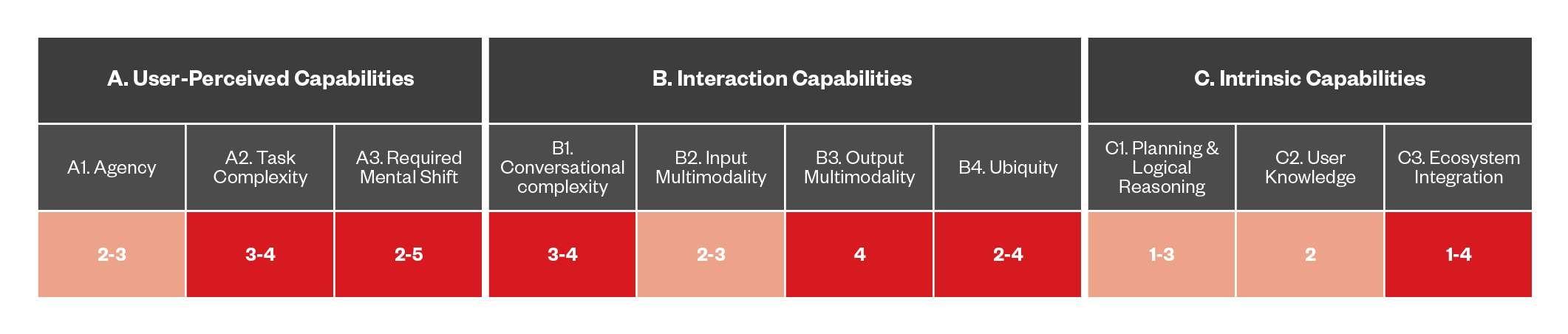

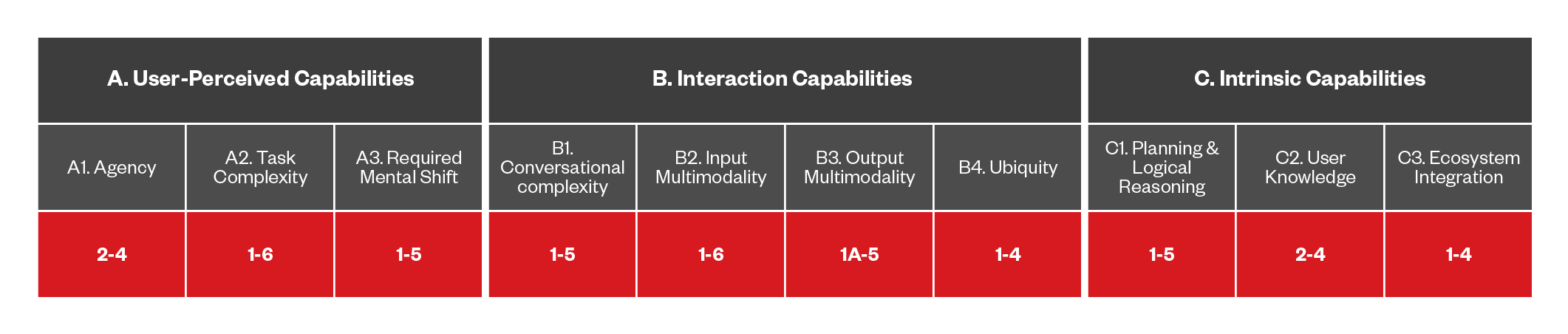

Current-generation digital assistants: ChatGPT, Google Astra

In recent years, DAs have undergone evolutions that brought us a new generation of assistants that are more capable from a conversational standpoint.

Examples include ChatGPT, with its latest conversation engine GPT-4o, as well as more integrated systems like Microsoft Copilot and the upcoming Google Astra.

While not yet fully integrated into the Google ecosystem at the time of writing, we can take Google Astra’s release video at face value to examine its capabilities as a next-generation DA.

Figure 4. Mapping the capabilities of the current-generation digital assistants

Compared to the first generation of DAs, we have an increase in conversational capabilities thanks to the use of LLMs behind the scenes, with the assistant now being able to deal with multimodal input (i.e., “explain what I am seeing right now”) and output (i.e., “draw me a picture of something”). The assistant is also able to retain a short memory of the ongoing conversation, allowing the user to have a more complex interaction.

We see, however, that we are still lagging in terms of reasoning and agency: while this new generation of assistants can, for example, fetch information from multiple sources, it cannot yet interact with different providers to create and carry out a complex plan (such as a full travel itinerary), but we believe we are not far from that reality.

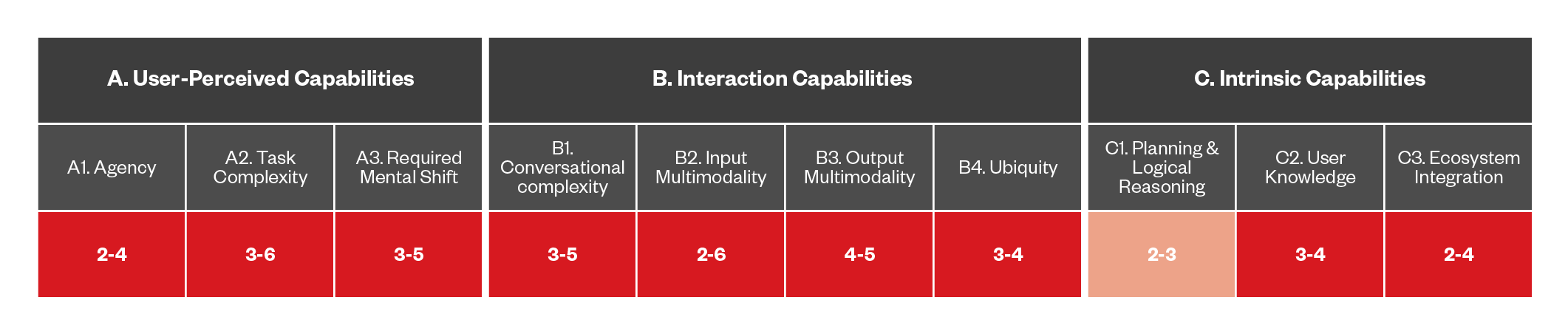

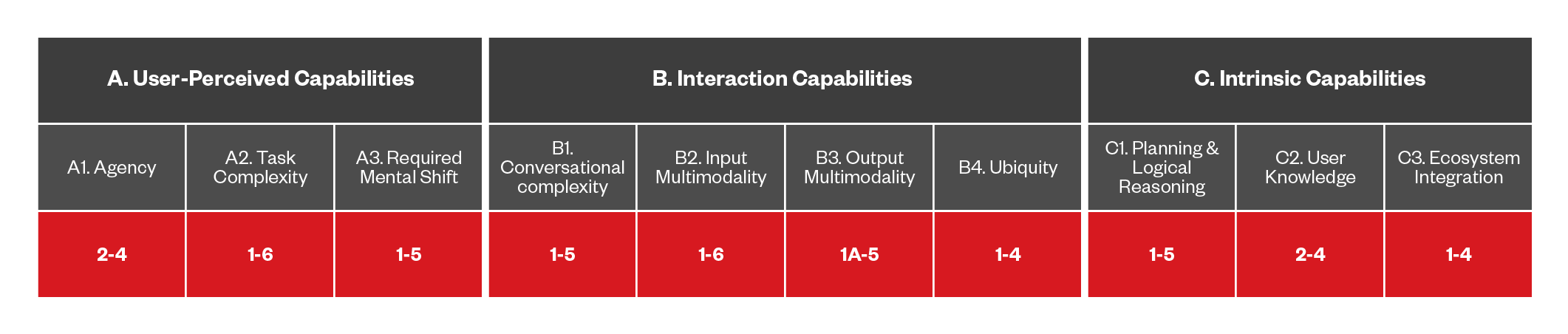

Hypothetical future DA: E.D.I.T.H.

While we wait for the industry to catch up on some capabilities, we tried to apply our matrix to what Hollywood thinks could be a possible future for DAs.

In the Marvel Cinematic Universe, Tony Stark’s creation, E.D.I.T.H (Even Dead I’m The Hero), is an assistant developed by the genius billionaire to assist his protégé, Peter Parker, who interacts with it via a pair of augmented reality (AR) sunglasses.

Basing our consideration to what we can infer from the movies, we can assign a fairly high score to the E.D.I.T.H. ecosystem integration, since the assistant is not only able to interact with multiple services at once, but even hack into those who are not available. Furthermore, the assistant sports a particularly high level of agency, being able to coordinate and control a whole fleet of armed drones, which comes from a staggering level of psychological acceptance, where our protagonist is willing to delegate to the assistant even actions with potential criminal consequences (however, we won’t spoil any more than this).

The figure below compares all the first and current generation digital DAs with what would be the hypothetical capabilities offered by a fictional assistant such as E.D.I.T.H.

Figure 5. Comparing the capabilities of current DAs to a future hypothetical E.D.I.T.H.

Digital assistants will become an integral part of our daily lives. They will offer convenience and efficiency in managing tasks and accessing information. However, to function effectively, these assistants often collect, process, and store a wide range of personally identifiable information (PII).

To understand the privacy implications and enhance data protection measures, it is crucial to recognize the various types of PII typically collected by digital assistants. These categories could include:

- Names: DAs require the names of users to personalize interactions and provide tailored experiences.

- Dates of birth/birthplace: This information is used for identity verification, customization, and security purposes.

- Current and previous addresses: Address data is used to offer location-based services such as weather updates, navigation, and local recommendations.

- Email addresses:Email addresses are essential for account creation, communication, and authentication purposes.

- Phone numbers: Phone numbers serve as unique identifiers and are used for communication, verification, and recovery processes.

- Account credentials: DAs may store credentials for emails, social media accounts, messaging apps, and banking services to facilitate seamless integration and enhanced functionality.

- Communication/Chat history: This data is used to improve interaction quality, learn user preferences, and provide continuity in conversations.

- Social maps: A social map outlines a user's relationships and interactions, providing insights into social connections and communication patterns.

- Social Security Numbers/Tax IDs: Some services may request this information for identification and verification purposes, e.g. an accounting DA.

- Name and address of doctors: Health-related services may require this information to manage appointments, prescriptions, and health records.

- Health information/Health records: DAs handle health information to provide reminders, track fitness goals, and offer medical advice.

- Insurance information: Insurance details are stored to facilitate claims processing and policy management.

- Travel-related information and accounts: This data is used to streamline booking processes and enhance travel experiences.

The collection and management of these diverse types of PII by DAs raise significant privacy concerns. To address these concerns, it is essential to ensure robust data protection measures, transparency in data handling practices, and compliance with relevant privacy regulations.

As DAs become more sophisticated and more commonplace, they will increasingly be targeted by attackers exploiting API abuse vulnerabilities. This threat involves using a DA as an intermediary to access APIs and process sensitive data. To mitigate this risk, DAs must implement strong authentication and authorization mechanisms, such as OAuth, to manage user consent and data sharing permissions. Users must explicitly authorize which service providers their DA can interact with, and what information can be shared, mirroring current API security challenges like domain takeovers and unauthorized access.

DAs will interact with other digital services, potentially introducing security risks. A compromised DA can request sensitive information under false pretenses, such as credit card numbers or social security numbers. This highlights the need for robust security protocols to verify the authenticity of DAs that are accessing personal data. Only trusted and vetted DAs should be granted access to sensitive information, minimizing the risk of malicious activity within the DA network.

As DAs become more integrated into our daily lives, they face growing security threats that take advantage of their unique capabilities and trusted position within user interactions. The potential threats include the creation of malicious “custom skills” (i.e., any additional integration that can be added to a DA, such as home automation controls and external services integration), manipulating DA interactions to deliver misleading information, and exploiting APIs to alter the data that DAs rely on. Furthermore, the rise of immersive environments like the Metaverse introduces new vulnerabilities in which avatars can mimic trusted personas to deceive users.

To address these threats, we came up with threat categories, which are summarized in a non-exhaustive list shown below. Each threat is assigned a number within a range of 0 – 6, depending on the levels that we previously covered. This range shows where the threats exist in each category, and in a few cases, you will see that they only exist in one level, not a range. Threats that have an upper range of 1 to 3 are denoted in orange, while those that have an upper range of 4 to 6 are denoted in red.

Skill squatting

Figure 6. The capabilities of DA-based threats that use skill squatting

Skill squatting takes advantage of the phonetic similarities between skill names or how users might mispronounce or slightly alter a skill’s name. This attack involves creating malicious skills with names that are very similar to legitimate skills. When a user attempts to invoke a legitimate skill but mispronounces its name, the digital assistant might inadvertently invoke the malicious skill instead. As an example, a skill named Howtel instead of Hotel would demonstrate a skill squatting threat.

Trojan skill

Figure 7. The capabilities of DA-based threats that use trojan skills

Trojan skills are a type of malicious software that targets voice-activated DAs. These apps appear legitimate and useful at first glance, often mimicking popular functions or offering helpful features. However, once installed, they secretly perform harmful actions that put the user’s privacy and security at risk. For example, they might steal sensitive information such as passwords or personal data, record conversations, or execute unauthorized commands without the user's knowledge or consent. Trojan skills take advantage of the user’s trust in DA platforms and exploits vulnerabilities for installation. This type of attack emphasizes the importance of thorough review processes, ongoing monitoring, and user education to protect against hidden threats from malicious apps in increasingly connected digital environments.

Malicious Metaverse avatar DA phishing

Figure 8. The capabilities of DA-based threats that use metaverse avatar DA phishing

Malicious metaverse avatars appear as legitimate DAs. These fake DAs mimic the appearance, behavior, and speech patterns of authentic assistants using advanced AI techniques. They are designed to deceive users into trusting them, which can lead to sensitive information, such as passwords, personal data, or financial details, being shared.

Additionally, these malicious avatars might manipulate users into performing actions that compromise their security or privacy. The immersive nature of the Metaverse makes it challenging for users to recognize malicious intent. Therefore, robust authentication mechanisms, constant monitoring, and user awareness are essential in preventing these sophisticated avatar phishing attacks and ensuring the integrity and security of interactions within the Metaverse (as we discussed in a previous publication).

Digital assistant social engineering (DASE)

Figure 9. The capabilities of DA-based threats that use DASE

Social engineering can be performed by proxy through DAs. Attackers can manipulate the output generated by DAs to indirectly deceive users into taking harmful actions. For instance, a malicious actor might instruct the DA to relay a message like, “Amazon has requested your permission for this,” knowing that users are likely to trust this communication due to their confidence in both their DA and the brand.

Although this type of attack can be executed with any form of DA, the likelihood of the threat increases as users develop greater trust in these systems, leading them to accept information and requests without skepticism. As DAs take on more sophisticated tasks, such as managing user finances, the severity of the threat rises, given that the potential consequences of a successful attack grow significantly.

To mitigate these risks, it is essential to enhance DA security, implement rigorous verification processes for communications, educate users about the dangers of uncritical trust in AI-driven interactions, and regularly update security protocols to address emerging vulnerabilities.

The Future of Malvertising

Figure 10. The capabilities of DA-based threats that use malvertising

The future of malvertising will experience an evolution, particularly within the framework of distributed advertising. Unlike traditional malvertising, which exploits vulnerabilities in ad networks to serve malicious content, DA-based malvertising takes advantage of the personalized nature of modern recommendation engines. By subtly corrupting the knowledge base of a user’s social network, attackers can manipulate the DA’s recommendation algorithms to steer users towards harmful content.

This tactic is particularly insidious because it capitalizes on the trust users place in their social and community-driven recommendations, which typically see less scrutiny from content protection systems focused primarily on individual user behavior. The success of DA-based malvertising hinges on a deep understanding of community-level user interactions, making it a sophisticated and challenging threat to counter.

The future of spear phishing

Figure 11. The capabilities of DA-based threats that use spear phishing

This threat involves compromised DAs tricking users into providing information, such as financial data, by using PII it already has access to. For example, the DA might use information on previous purchases or places visited by the individual to create a plausible scenario (e.g., “There is a problem with a previous purchase, please provide financial information to fix it”). This approach can work when the DA has some level of agency, but doesn't have the authority to proceed with financial operations on its own.

Semantic Search Engine Optimization (SEO) abuse

Figure 12. The capabilities of DA-based threats that abuse SEO

Semantic SEO abuse represents a growing threat in manipulating DAs, as the world moves towards real-time search and retrieval-augmented generation (RAG) models. Unlike traditional keyword-based indexing, semantic indexing focuses on understanding the meaning and context of information. Malicious actors can exploit this by injecting content that appears semantically relevant to what a DA is searching for but is actually misleading the user. This type of abuse aims to manipulate the DA' s results to present content that seems like the correct answer, thereby enticing users to visit a URL that may be harmful or deceptive.

As digital assistants become more reliant on semantic understanding to deliver information, attackers can craft pages that superficially align with user queries, but instead redirect users to undesirable outcomes. This manipulation not only undermines user trust in DAs but also poses significant security risks.

To mitigate these threats, it is essential to develop advanced filtering and verification mechanisms that can detect and prevent semantic manipulation, ensuring that DAs deliver accurate and reliable information. As semantic indexing becomes more prevalent, the challenge will be to maintain the integrity of search results in the face of increasingly sophisticated attempts to game the system.

Agents Abuse

Figure 13. The capabilities of DA-based threats that abuse agents

Agent abuse in DAs occurs when attackers exploit DA interaction APIs rather than directly manipulating the DA itself. This type of abuse is similar to targeted advertisements on social network platforms like Facebook, where malicious actors promote counterfeit products or scam services.

By manipulating the APIs that DAs rely on for information retrieval and decision-making, attackers can feed deceptive data into the system. If a DA recommends a product or service based on manipulated API responses, users are more likely to trust and act on this recommendation, believing their DA to be a reliable intermediary. This exploitation takes advantage of the inherent trust users place in their DAs, making them susceptible to scams and low-quality products.

As DAs evolve to become more integrated into daily decision-making, it becomes even more important to ensure the integrity of the APIs they interact with. Safeguards such as robust validation mechanisms, enhanced transparency of data sources, and regular audits can help mitigate the risk of agent abuse, preserving the trust relationship between users and their DAs.

Malicious External DAs

Figure 14. The capabilities of DA-based threats that use malicious external DAs

DAs often serve as intermediaries between users and APIs, possessing delegated authority to call and process data. This setup introduces significant security risks, as the DA, rather than the user, determines which APIs to interact with and how to manage the information being exchanged. OAuth or similar authorization mechanisms in a DA context would require a clear delineation of which providers the DA can communicate with, and the specific data authorized for sharing, such as personal information or health data.

This opens the door to a class of attacks where malicious external DAs can abuse vulnerabilities like those seen in today's API security breaches, like domain takeovers. These attacks can be particularly dangerous since users typically focus on the provider rather than the underlying API interactions. The potential for DAs to accept natural language queries further complicates the risk landscape, as it creates new avenues for API misuse and manipulation.

As a result, a comprehensive understanding and adaptation of existing API security frameworks to the DA environment is crucial for mitigating these emerging threats. As a note, task complexity in the chart for this section uses a 1-6 ranking, since we consider 1 to be disinformation, and people will take action from 2 through 6. On the Ecosystem Integration, we figured that 3 indicates that users installed the DA themselves, and anything above that would involve the DA installing another DA on its own.

To address digital assistant-based threats, a holistic approach that incorporates multiple layers of defense is essential. For example, implementing robust authentication mechanisms to ensure user identity verification safeguards users against attacks by employing multiple layers of security, making unauthorized access to DAs significantly more challenging. The combination of strong passwords and continuous monitoring limits an attacker’s ability to exploit vulnerabilities in the DA or manipulate it for malicious purposes. These measures ultimately help protect user data and privacy.

In addition, encrypting sensitive data serves as a fundamental security measure for protecting user information that is accessed or processed by DAs. Encryption would prevent unauthorized individuals from accessing or understanding the contents found within a DA, even if they manage to gain access to the systems. This protection can be applied at various levels: encrypting data at rest (what is stored on the system or devices), in transit (during communications between DAs and servers, or even DA to DA communication), and within the DAs internal memory. Utilizing strong encryption standards ensures that the data remains secure even if it is intercepted by malicious actors. By prioritizing data encryption, DAs can significantly reduce the risk of sensitive information being compromised, in turn safeguarding privacy and maintaining user trust in the system.

Furthermore, it is important to consider how the communication paradigm will shift in an environment predominantly tailored around DAs. We are looking at a multi-agentic architecture leveraging natural language for a large number of requests and responses, where one piece of polluted information might impact the whole planning chain. It becomes important therefore to proof the API ecosystem from attacks like prompt injections and information poisoning, something that, if not mitigated, could only be detected far off the processing chain.

Finally, employing advanced threat detection and mitigation techniques is crucial for proactively defending DAs against evolving cyber threats. These techniques go beyond traditional signature-based detection by utilizing machine learning algorithms and behavioral analysis to identify malicious activities in real-time.

Systems can be trained to recognize patterns indicative of known attack vectors — including the threats that are laid out within this entry, further evolving with the landscape of threats that do not yet have a defined signature. By continuously monitoring system logs that keep user privacy in mind, these systems can detect anomalies and suspicious activities, triggering alerts and responses to mitigate the threat.

Our analysis of the digital assistant ecosystem has revealed both the complexities and opportunities presented by this emerging technology.

We have identified several significant categories of security threats to DAs, including various attack vectors that can be exploited by malicious actors. These risks emphasize the need for prioritizing security considerations in the design and implementation of DAs.

One particular aspect that deserves an in-depth look is how the internet middle layer will have to evolve to support the agentic architecture that DAs will implement in order to bridge the gap between services and users, while also providing the latter with a smooth and intuitive interaction.

Collaboration between developers and users is crucial in promoting a culture of security awareness, responsible behavior, and best practices when using DAs. It is essential for users and developers to be able to recognize the types of personally identifiable information collected by DAs. Understanding these categories can help DA developers create more effective data protection measures, ensuring user-sensitive information remains secure.

The DA ecosystem has the potential to revolutionize human-technology interactions. However, addressing technical, social, and security challenges is important for unlocking its full potential. By prioritizing user needs, fostering innovation, and addressing security concerns, we can create a brighter future for all.

This appendix serves as a hands-on resource, allowing readers to experiment with the tool introduced in this study. It provides a summary of the capabilities we identified for digital assistants (DAs), along with their corresponding levels, presented in a table format. This layout makes it easier for the reader to select the appropriate level of capabilities when mapping DAs or assessing potential threats.

The list of DAs and threats that are included in this study is not meant to be exhaustive. Instead, it serves as a demonstration of how our tool can assist in analyzing various DAs and understanding their potential vulnerabilities.

Therefore, this appendix can also be seen as a starting point for further expanding the research we have undertaken with this study.

A1. Agency

One of the characteristic traits of DAs should be the ability to carry out actions on behalf of the user, and this skill tries to capture exactly that. We have identified five skill levels, namely:

- A1.0 – Informational only. The DA is information-only (i.e., only answers questions).

- A1.1 – Single commands. Every action possible by the DA has, in fact, a 1:1 mapping with specific commands that can be issued (“set an alarm for 8:30AM”).

- A1.2 – Action chaining. The DA is able to chain together multiple actions from one specific command, e.g., a command like “good night” triggers a whole routine of multiple actions (lowers the shades, turns off the lights, etc.).

- A1.3 – Goal proactive. The DA can suggest a goal to the user and a set of actions to make it happen, however, actions are still subject to user confirmation.

- A1.4 – Full unsupervised. The DA can take actions in the interest of the user, fully unsupervised.

A2. Task complexity

Task complexity classifies how much a DA will be able, in terms of usability and agency, to fully replace a phone or a PC in daily usage. Take as an example task, booking a hotel:

- A2.1 – Scripted answers The DA is a simple question answering service that provides simple scripted answers. For example, a DA being tasked to book a hotel would simply return a list of URLs for booking services (the scope is very limited possibilities of action on a fixed domain).

- A2.2 – Instructional. The DA has knowledge of certain domains and can generate a list of instructions on what do to in a specific domain, but cannot act upon them. For example, for the previous tasks the DA would return a list of instructions for the booking, but would not actually book the hotel itself.

- A2.3 – Autonomous actions. The DA can carry out certain actions based on user input (e.g., query availabilities for hotels).

- A2.4 – Single app replacement. The DA has the capability to be on par with an equivalent phone app. For example, the DA would be able to assist the user and carry on all the actions required to complete a full search and hotel booking, with all the associated support (reminders, check-in, rebooking).

- A2.5 – Multi app replacement. The DA would be able to implement and integrate the capabilities of several equivalent mobile apps. For example, acting as a full travel planner, searching for hotels in specific areas close to the proposed sightseeing spots that ranked best on sites like Tripadvisor, booking accommodation and restaurants, and calculating travel costs, among others.

- A2.6 – OS replacement. The DA would have enough capabilities to basically replace whole mobile platforms altogether (i.e., the DA becomes the new fully-supported platform for users).

A3. Required mental shift

This capability focuses on the users, in particular, the level of psychological engagement required to promote the adoption of a certain DA:

- A3.1 – Trust of DA output. A willingness to listen to what the DA says.

- A3.2 – Trust of DA instructions. A willingness to trust the DA instruction without validation.

- A3.3 – Basic actions delegation. A willingness to delegate basic actions to the DA on the user’s behalf.

- A3.4 – Financial actions delegation. A willingness to delegate financial actions to the DA.

- A3.5 – Liable actions delegation. A willingness to trust the DA to perform actions in which the user can be held legally liable for.

B1. Conversational complexity

In this section, we categorize how complex a conversation the DA can sustain, organized into the following levels:

- B1.0. The DA cannot engage in conversation.

- B1.1. The DA can engage in a simple Q&A conversation.

- B1.2. The DA can engage in a Q&A conversation that involves memory and context.

- B1.3. The DA can engage in a natural conversation — including emotional content — with the ability to perform small talk and display wit.

- B1.4. The DA can engage in a spontaneous purposeful conversation; it might initiate a conversation on its own initiative, with a specific goal in mind.

- B1.5. The DA can engage in spontaneous social conversation, initiating a conversation for purely social purposes.

B2/B3. Input/Output multimodality

With the evolution of AI models, we are seeing DAs supporting an increasing number of media both as an input and an output.

For input multimodality, we have the following levels:

- B2.1 – Text-only. The DA interacts via text-only interface.

- B2.2 – Speech to Text. Users can speak to the DA, but the voice is turned into text before being processed, losing all the meta-language information about voice, intonation, etc.

- B2.3 – Multimedia. The DA integrates images, video, and audio for relatively basic tasks such as reading the text on a picture, commenting on a song, reading handwriting, and identifying the actions in a video.

- B2.4 – Speech. The DA possesses native audio processing, i.e. using the user speech including information on intonation, inflection, etc.

- B2.5A – Facial input. The DA understands facial expressions and micro-expression, moving towards real emotional computing.

- B2.5B – Biometric signals. The DA integrates biometric information such as heart rate, and blood pressure.

- B2.6 – Real-time embodiment. The DA possesses the ability to calculate and understand position, depth perception, context, and audiovisual awareness.

- B.7 – BMI. The DA acts as an actual brain machine interface

For output multimodality, the levels are:

- B3.0 – No output. The DA provides no output.

- B3.1A – Text. The DA generates text.

- B3.1B – Text to speech. The DA possesses text to speech capabilities, i.e., it still generates text, but it is read out (although missing all voice information).

- B3.2 – Multimedia. The DA can generate picture, audio, and video output.

- B3.3 – Real speech. The DA generates actual spoken audio, while considering characteristics such as emotional information and vocal intonation.

- B3.4 – Realistic avatar. The DA can appear in the form of a human-looking virtual avatar.

- B3.5 – Enhanced mixed reality. The DA can superimpose information on top of an actual real time point-of-view video.

B4. Ubiquity

The ubiquity capability identifies how much and how well the DA integrates and follows users in their daily activities:

- B4.1 – Fixed. The DA exists as a desktop application or a hardware assistant, confined to a fixed place which requires the user to specifically reach out and interact with.

- B4.2 – Mobile. The DA can be accessed by users via a device that they constantly keep close to them, but also require them to reach out for (such as a smartphone).

- B4.3 – Wearable. The DA always accessible and is always with the user without the need to reach for it (such as a smart watch or smart glasses).

- B4.4 – BMI. The DA is literally augmenting the user’s flow of thoughts as a brain-machine interface (BMI).

C1. Planning and logical reasoning

Despite a seemingly straightforward definition, this capability is actually one of the most complex since it attempts classify the depth of a DA’s logical reasoning. We rank the levels as follows:

- C1.0 – No planning. The DA possesses no logical reasoning whatsoever.

- C1.1 – Basic query. The DA possesses logical reasoning that is somewhat comparable in complexity to a SQL query.

- C1.2 – 1-2 steps inference. The DA is able to reliably infer a chain of thought for a few steps.

- C1.3 – Single domain planning. The DA is capable of logically planning in a specified domain alone. This is similar to the capability of models like AlphaGo.

- C1.4 – Multi domain planning. The DA is capable of logically planning across multiple domains simultaneously.

- C1.5 – New domain learning. The DA is capable of autonomously learning new domains and can reason within them.

- C1.6 - New domain discovery. The DA is capable of autonomously discovering new domains to be learned to carry out a task or solve a problem, learn the new domains, and use the learned information for planning.

C2. User Knowledge

The user knowledge skill represents the level of knowledge a DA can acquire and retain about its user. We have identifed five skill levels for this:

- C2.0 – No prior knowledge. The DA works as a stateless answering box, retaining no information about the user.

- C2.1 – Static knowledge. The DA has some basic knowledge about the user, generally in the form of personal settings (language, location, etc.).

- C2.2 – Dynamic knowledge. The DA has knowledge about users acquired over time via interaction with them, learning their habits and adapting over time.

- C2.3 – Community. The DA integrates knowledge about the user's social circle, place in social groups, and details of associates, among others. This is information that it can learn through interactions, either with users themselves, or with other DAs.

- C2.4 – Blind spot. While levels 2 and 3 cover information about the user that is known to the user itself, this level involves a form of information that the DA can derive and learn that is not known, even to the users themselves. It approaches a level very close to serendipity — as a reference, think about a fitness band that can diagnose heart conditions previously unknown to the user.

C3. Ecosystem integration

Ecosystem integration defines how well the DA is able to access external information and integrate it into a wider ecosystem. It can be considered complementary to Task Complexity, but focused only on the ability to integrate with a larger ecosystem.

We define the levels as following:

- C3.0 – None. The DA has no access to external information.

- C3.1 – Skill-based. The DA has some access to external information and services, limited to a specific skill or fixed domain.

- C3.2 – Natively chaining multiple providers or provide API. The DA can link together sequential data derived from multiple sources, without the help of external support frameworks, such as LangChain or IFTTT. Alternatively, it can expose its own API. For example, the DA is able to retrieve a list of hotels for a certain time, locate them, then based on their position, query nearby restaurants, all natively.

- C3.3 – Talk to Other Assistant. In a scenario where DA might become specialized tools for specific services, a personal DA should be able to directly interface with other DAs and compile queries in natural language to retrieve the specified information. Alternatively, it should be able to interact with a human agent (i.e., customer support or reservation staff) to obtain a service natively.

- C4.4 – Integration discovery. Similar to the planning and reasoning level (to which this skill is complementary), at this level, the DA should be able to autonomously consult an information directory and find new services and mechanisms to access. It uses those services to augment its own capabilities. For example, it can provide answers to queries such as “Book a hotel, I need a taxi to get there from the airport, is there a taxi service in the area? How do I contact them and perform a transaction?”

The following table outlines the progressive capabilities of DAs across three key areas: User-perceived capabilities, interaction capabilities, and intrinsic capabilities.

Each capability represents a specific stage in a DA's evolution, progressing from basic functionalities to advanced, autonomous behaviors.

As these capabilities develop, DAs become better at performing tasks, interacting with users, and integrating into daily life. This evolution also demands varying levels of trust and psychological engagement from users. The table provides a structured view of this progression, detailing how each capability evolves over time — from rudimentary to highly sophisticated forms — ultimately shaping the overall user experience and interaction with the DA.

Digital Assistant Capabilities Scoring Table

| Category | Capability | Level | Description |

|---|---|---|---|

| A. User-Perceived Capabilities | |||

| A1. Agency | |||

| 0 | Provides information upon request only. | ||

| 1 | Responds to a command with a corresponding action (e.g., setting an alarm). | ||

| 2 | Executes a sequence of tasks from a single request (e.g., "good night" routine). | ||

| 3 | Suggests actions to achieve specific goals, pending user approval. | ||

| 4 | Makes decisions independently and acts without supervision. | ||

| A2. Task Complexity | 1 | Provides scripted responses within a fixed domain (e.g., listing booking websites). | |

| 2 | Offers domain-specific instructions (e.g., explaining how to book a hotel). | ||

| 3 | Performs some actions directly (e.g., querying hotel availability). | ||

| 4 | Matches the functionality of a single app (e.g., assisting with all aspects of hotel booking). | ||

| 5 | Integrates functionalities of multiple related apps (e.g., a comprehensive travel planner). | ||

| 6 | Fully replaces devices like smartphones, handling tasks across unrelated domains. | ||

| A3. Required Mental Shift | 1 | User is open to listening to the assistant's responses. | |

| 2 | Follows the assistant's suggestions without verifying them. | ||

| 3 | Allows the assistant to perform basic tasks on their behalf. | ||

| 4 | Allows the assistant to handle financial transactions. | ||

| 5 | Allows the assistant to make decisions with legal implications. | ||

| B. Interaction Capabilities | |||

| B1. Conversational Complexity | |||

| 0 | No conversation is possible. | ||

| 1 | Simple question-and-answer interactions. | ||

| 2 | Remembers and uses context within conversations. | ||

| 3 | Engages in natural conversations with emotional depth. | ||

| 4 | Initiates conversations with a specific goal in mind. | ||

| 5 | Initiates conversations for purely social purposes. | ||

| B2. Input Multimodality | 1 | Understands written text. | |

| 2 | Processes text generated through speech-to-text technologies. | ||

| 3 | Handles multimedia input (e.g., images and video). | ||

| 4 | Processes natural speech with full perception of vocal elements. | ||

| 5A | Interprets non-verbal cues, such as facial expressions. | ||

| 5B | Interprets biometric signals, like heart rate and blood pressure. | ||

| 6 | Real-time embodiment (e.g., calculates position, perceives depth, and has audiovisual awareness). | ||

| 7 | Operates as a brain-machine interface, decoding brain signals. | ||

| B3. Output Multimodality | 0 | Provides no visual or auditory feedback. | |

| 1A | Basic text generation. | ||

| 1B | Text-to-speech conversion. | ||

| 2 | Produces richer multimedia outputs (e.g., videos). | ||

| 3 | Generates natural speech capturing vocal nuances. | ||

| 4 | Presents as a realistic avatar with a visual and interactive representation. | ||

| 5 | Integrates virtual information into the user's field of view in real-time. | ||

| B4. Ubiquity | 1 | Confined to a specific location (e.g., home device like Amazon Echo). | |

| 2 | Available on portable devices like smartphones. | ||

| 3 | Available on wearable technologies (e.g., smartwatches). | ||

| 4 | Blends seamlessly with the user's cognitive processes (e.g., brain-machine interface). | ||

| C. Intrinsic Capabilities | |||

| C1. Planning and Logical Reasoning | 0 | No logical reasoning ability. | |

| 1 | Performs basic queries. | ||

| 2 | Makes simple 1- or 2-step inferences. | ||

| 3 | Plans autonomously within a single domain (e.g., specialized AI models like AlphaGo). | ||

| 4 | Plans autonomously within multiple domains simultaneously. | ||

| 5 | Learns and reasons within new domains independently. | ||

| 6 | Discovers and masters new areas of knowledge on its own. | ||

| C2. User Knowledge | 0 | Does not retain any specific information about the user. | |

| 1 | Retains static information about the user (e.g., language preferences). | ||

| 2 | Learns from ongoing interactions and adapts to user habits and preferences over time. | ||

| 3 | Incorporates knowledge of the user's social circle and communities. | ||

| 4 | Learns information unknown to the user (e.g., health conditions indicated by fitness device data). | ||

| C3. Ecosystem Integration | 0 | Lacks any external connectivity. | |

| 1 | Accesses information within a fixed domain or skill set. | ||

| 2 | Chains data from multiple sources or provides an API. | ||

| 3 | Interacts with other digital assistants or human agents. | ||

| 4 | Autonomously discovers and integrates new services to expand functionalities. |

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks