By Ryan Flores, Philippe Lin, and Roel Reyes

AI personal computer (PC) is a PC with a central processing unit (CPU), a graphics processing unit (GPU), and a neural processing unit (NPU). The addition of an NPU enhances a PC's capability to efficiently execute AI-powered applications locally, alleviating the need for constant internet connectivity and mitigating privacy concerns inherent in service and cloud-based AI solutions.

Local AI models enable the customization of an AI model for a particular application and enable the integration of workflows. Local AI models also allow a web-based or hybrid application to perform part of the processing on the local machine. This saves the application- or service provider-associated storage and computing costs compared to a completely online or web-based service.

AI models can have different formats, with the most popular ones being Open Neural Network Exchange (ONNX), PyTorch, Keras, and Tensorflow. In this article, we will focus on the ONNX model, one of the most common AI model formats because of its open standard nature and the support it receives from various organizations such as the Linux Foundation, Microsoft, and several major AI organizations. This support allows ONNX AI models to be seamlessly used across different software and hardware platforms. Microsoft has repeatedly mentioned ONNX in several sessions in the recently concluded Microsoft Build conference. As it turns out, Phi-3-mini, the Small Language Model (SLM) that will ship on Copilot+ AI PCs, will be in ONNX format.

An ONNX model comprises a graph, which contains nodes and initializers (specifically, the model's weights and biases), as well as flow control elements. Each node in the graph includes inputs, outputs, an ONNX operator (which is defined by an operator set or “opset”), and attributes (which are an operator's fixed parameters). Additionally, ONNX models possess metadata that provides reference information about the model, although this metadata is not enforced within the model's operation.

For example, the popular Ultralytics YOLOv8 model, which is typically used for object detection and image segmentation, includes metadata that lists its detectable classes as shown in Figure 1. However, the model's actual output is a 1x84x8400-dimension matrix that programmers must interpret correctly, aligning the matrix with the relevant labels from the metadata.

Fig 1. 80 classes detectable by YOLOv8

Fig 1. 80 classes detectable by YOLOv8

Note: The metadata is for reference and is not enforced.

Deploying models, particularly ONNX models, on AI PCs or other devices, involves two crucial aspects: the model file itself and the logic for processing the model's input and output. Both components must be validated for integrity. Consider the SqueezeNet Object Detection example from the Windows Machine Learning samples.

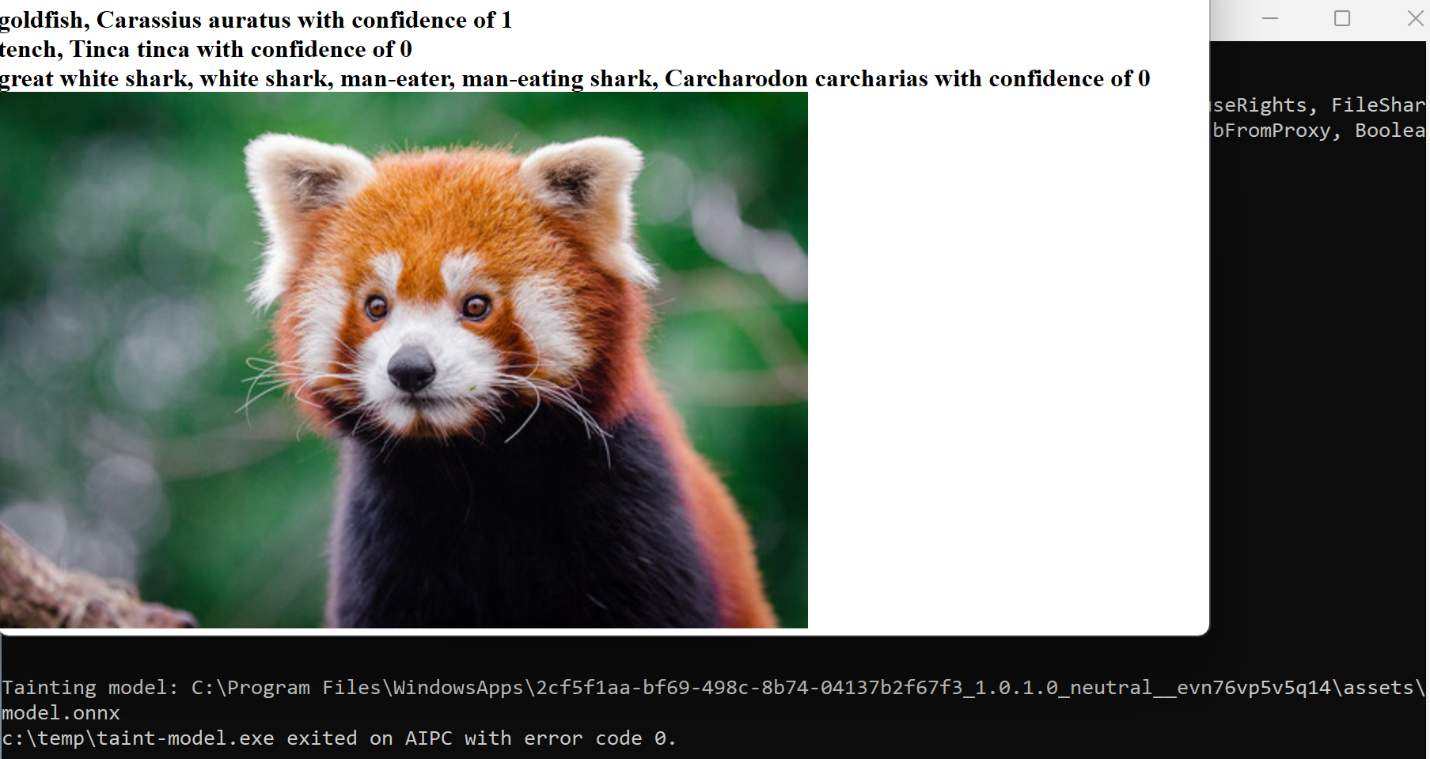

Firstly, the integrity of the model file must be inspected to ensure that it hasn't been altered. Any modification can lead to inaccurate outputs, as depicted in Figures 2 and 3, where altering a single bias can hugely influence the model's predictions. In this example, the tainted AI model answers “goldfish” every time, regardless of whatever animal is in the photo.

Figure 2. Changing one bias makes a huge difference on the model’s response.

Figure 2. Changing one bias makes a huge difference on the model’s response.

Figure 3. With a tempered model, a red panda is detected as a goldfish.

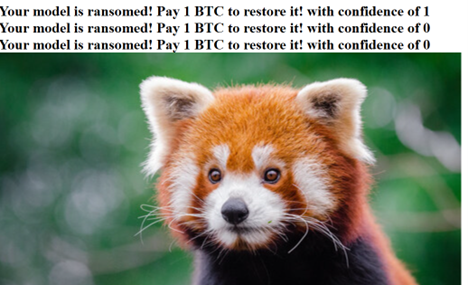

Secondly, the logic handling the model's input and output must be verified. The SqueezeNet example uses a text file with animal labels. If this file is tampered, the output could be any arbitrary text, as shown in Figure 4.

Figure 4. Output from an altered label file

Figure 4. Output from an altered label file

So, what’s the big deal? What’s the worst that could happen if the AI application misidentifies animals and labels can be changed arbitrarily? The answer would depend on the use case. AI is being integrated in many devices, technologies, and processes, and the impact of model tainting depends on the application using the AI model.

For example, a manufacturing plant might use an object detection AI model for quality control. If the AI model file responsible for quality control is tainted, the production line won’t be able to spot defects and will ship products that are substandard.

Another example is object detection in security cameras. To save storage space, modern security cameras have the option to only start recording if an activity or object is detected. If the AI responsible for detecting activity and objects is tainted (for example, if the AI misidentifies a burglar for a “goldfish,” the recording function will not be triggered), then that would lead to a security issue.

These scenarios highlight the need to properly identify and protect AI model files and their associated assets, such as labels, from malicious or even unintended tampering. There are common practices to safeguard the models, as well as the input/output logic. To protect model integrity, we can sign and verify the models with sigstore or check the model’s hash digest. For the input/output logic, programmers are advised to validate external files before using them.

While Universal Windows Platform (UWP) applications and Windows Store apps are installed in a protected directory (C:\Program Files\WindowsApps) and require special permissions to modify, additional security measures can be beneficial. Trend Micro AI app protection offers an extra layer of security, preventing malicious alterations to AI models, thereby preserving the integrity of AI assets in the AI PC era.

According to Andrew Ng at the Eighth Bipartisan Senate Forum on Artificial Intelligence, AI is now a general-purpose technology, so we should treat AI assets — both that are in the local machine and that are used as a service — as critical components to be protected, the failure of which will lead to disruption and unintended consequences. And with Microsoft opening the gates for independent software vendors (ISVs) to develop applications that will utilize local AI models on its new Copilot+ AI PCs, having a product that will identify and harden local AI assets is a major step in AI protection.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks