Download the Full Research Paper (PDF)

Download the Full Research Paper (PDF)

by Stephen Hilt and Aliakbar Zahravi

Key takeaways

- Red teaming tools, which are essential for security testing, can also be repurposed by malicious actors for cybercriminal activities.

- Although red team tools offer many benefits, their dual-use nature also poses substantial risks, underscoring the need for strong ethical guidelines and effective detection capabilities.

- Cybercriminals can misuse open-source software for malicious purposes, underscoring the importance of stringent security measures and continuous monitoring of supply chains.

- AI can identify and triage potential threats from open-source repositories, drastically reducing analysis time and prioritizing critical projects.

- Evolving red teaming methodologies with proactive detection and constant monitoring of emerging tools will enhance organizational defenses against cyber threats.

Red teaming tools, which organizations use in cyberattack simulations and security assessments, have become an important cybersecurity component for those looking to strengthen organizational defenses. However, the inherent nature of red team tools means that cybercriminals have also been repurposing them for malicious activities.

These tools, while designed for legitimate security testing and improvement, also attract malicious actors looking to enhance their toolkits. They typically possess advanced capabilities that allow them to automate vulnerability discovery, conduct sophisticated penetration tests, and simulate social engineering attacks, which when placed in the hands of cybercriminals, can lead to increased attack success rates and a reduction in both time and resources needed to achieve malicious goals. For instance, by abusing tools that can automate the exploitation of known vulnerabilities, attackers can target and compromise systems before patches are applied.

Nation-state actors, which often already possess advanced tools and techniques, can enhance their attacks even further using red team tools for strategic purposes such as espionage and sabotage. For instance, the threat actor APT41 employs a red teaming tool known as Google Command and Control (GC2) that allows them to abuse services like Google Sheets and Google Drive for communication between infected devices and the attacker's server. In addition, these groups can also further customize these tools to target specific organizations, leading to even more effective attacks.

Abusing red teaming tools and repositories for malicious purposes

GitHub serves as an indispensable tool for collaborative software development and an important resource for cybersecurity professionals. For red teamers, GitHub offers access to several constantly updated repositories of collective knowledge and resources, which includes a wide range of tools that are used for conducting security assessments.

Malicious actors typically integrate red team tools (often found on, but not limited to GitHub) using a variety of methods, the most straightforward of which is using the complete project as is. In this scenario, attackers use open-source tools without implementing any changes to the actual code. However, if the repository is well-known, leading to high detection rates, malicious actors might implement changes to the delivery method, such as integrating a custom loader with the primary payload encrypted and loaded into memory.

Furthermore, repositories can be used as a starting point or template, with the malicious actor adding custom code as needed. This often includes applying obfuscation techniques, such as string obfuscation or Windows API call hashing, to evade detection. They may also streamline the tool by removing unnecessary functions.

GitHub repositories also serve as valuable resources for knowledge sharing and collaboration. Certain projects can host advanced red teamer techniques, such as process doppelgänging, which injects code by abusing the NTFS transaction mechanism in Windows.

One of the most alarming cybercrime trends in recent years is the rise of supply chain attacks, especially those that target codebases. According to a report by the European Union Agency for Cybersecurity (ENISA), 39% to 62% of organizations were affected by third-party cyber incidents. Furthermore, several recent high-profile supply chain cyberattacks, such as Apache Log4j and SolarWinds Orion, highlight the significant costs and risks associated with these attacks.

Developers often use third-party components to streamline processes and make the development process more efficient. However, this introduces potential weak points in the pipeline where attackers can exploit the less secure elements of an organization's supply chain by targeting trusted components, such as third-party vendors or software repositories. Payloads can be derived from open-source projects, which are often delivered through complex multi-layered obfuscation. In these scenarios, malicious actors use various methods to distribute malicious code, including typosquatting, dependency confusion, and submitting malicious merge requests to legitimate Git repositories.

Repository trends and AI-based identification techniques

Given the rapid release and updating of repositories in GitHub, security researchers need to monitor the site to stay informed of the latest developments in malware and red teaming tools.

By determining which repositories are trending, security researchers and SOC teams can pinpoint which tools and techniques are potentially being used by malicious actors. These repositories can be identified by characteristics such as star and fork counts, which indicate heightened community interest, as well as an increased discussion in underground forums.

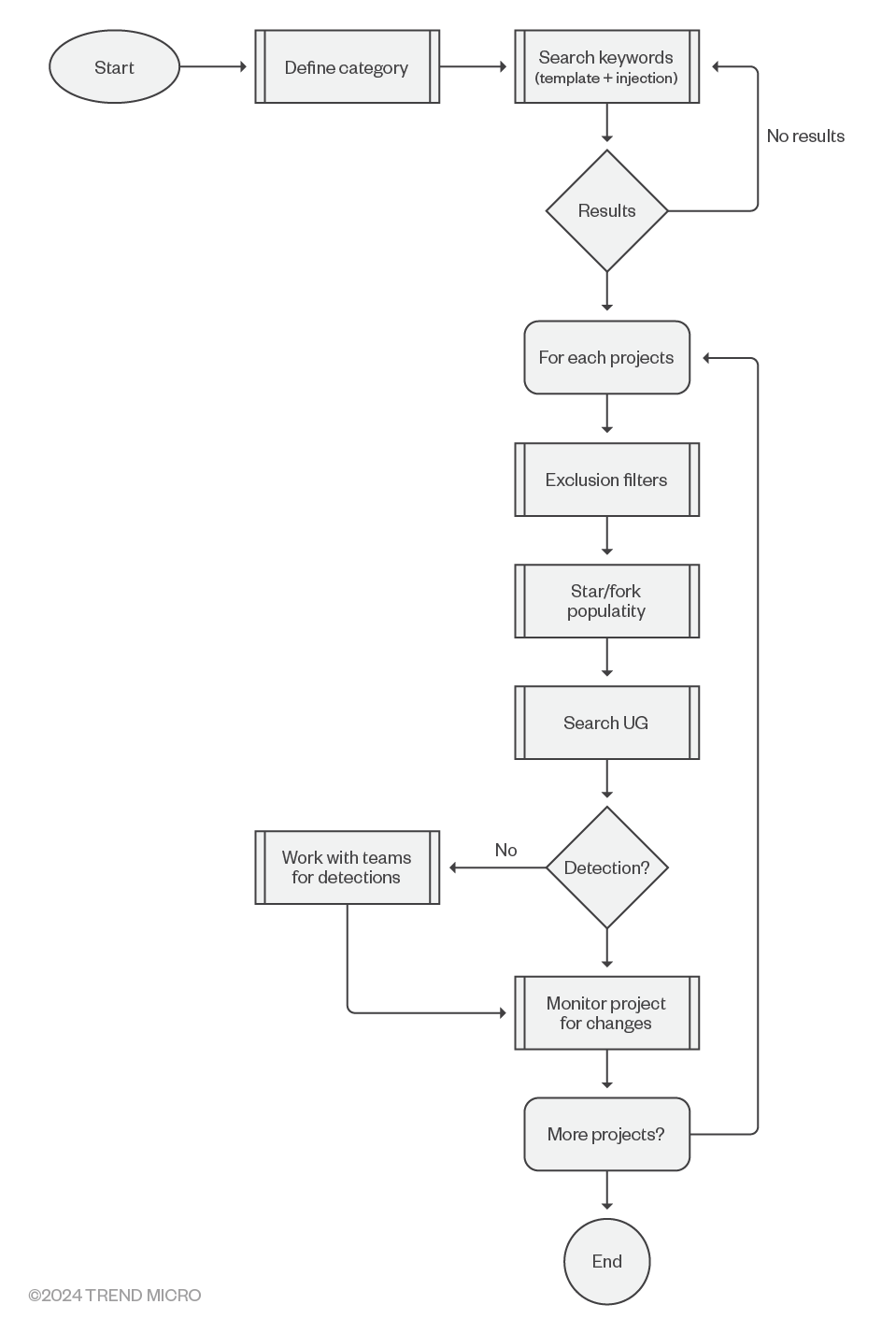

During our research into trending repositories, we discovered that simple topic-based search queries were inadequate since a large number of GitHub repositories lack topics, tags, or even descriptions, causing us to overlook them when we relied solely on topic modifiers.

Instead, we analyzed repositories within each specific category, identifying common patterns that linked them together. Through this improved methodology, we were able to refine our queries and incorporate them into our automation system.

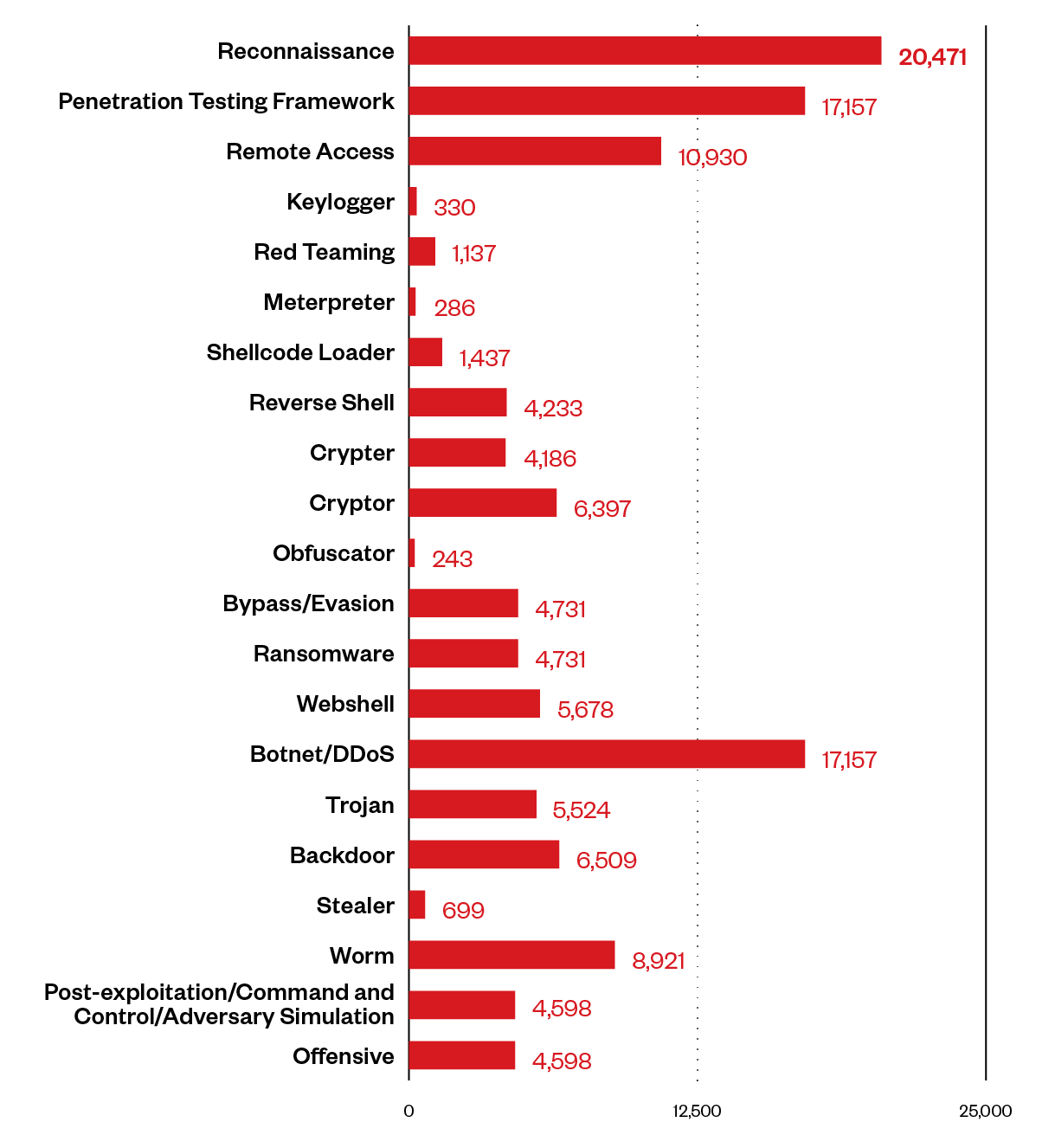

Figure 1. Offensive security tool repositories used in malicious projects categorized by topics

Using AI to identify trending repositories

We used artificial intelligence (AI) as part of our repository research. The benefits of this approach allowed us to lessen the workload required to evaluate thousands of GitHub projects.

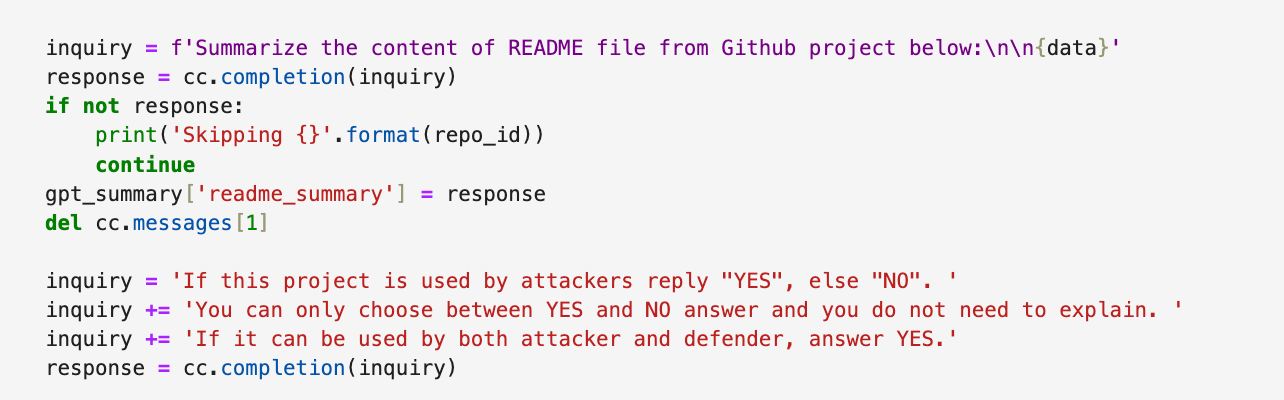

Using AI allowed us to automate the assessment of GitHub projects for potential malicious use. We created prompts instructing the AI to extract README files from their respective repositories, then analyze them to identify projects that had potential to be harmful. By using large language models (LLMs), we were able to sift through the README files to detect keywords and phrases linked to possible offensive security tools or malicious intent. We then reviewed the flagged projects manually, filtering out defensive tools and focusing on those needing closer examination.

Figure 2. Prompts used to summarize README files

AI also helped generate comprehensive threat analyst reports for each project. These reports provided in-depth insights into the project's codebase, functionality, and security implications, and included the project summary, code analysis, usage context, and community activity.

By employing these AI-driven methods, we were able to effectively filter and analyze a large number of GitHub projects, significantly lightening the workload for human threat analysts.

Figure 3. Flow chart of the triage process used to determine the most important projects for evaluation

Managing and categorizing red teaming resources

Paid services like Malsearch, a search engine designed for red teaming code, help address the need to organize and streamline the management and categorizing of red teaming resources. These services provide cybersecurity professionals with a resource for finding what they need among a vast amount of information.

Conclusion

The integration of red teaming techniques into the cybersecurity strategy playbook has been an important step for organizations to enhance their defenses by allowing them to identify potential security gaps via simulated adversarial attacks. However, the dual-use nature of these tools also poses risks, as they can be repurposed by malicious actors for nefarious purposes.

AI and machine learning offer promising ways to enhance detection and response by efficiently triaging potential threats from open-source repositories. AI-driven processes drastically reduce analysis time and prioritize important projects, allowing for a rapid and effective response to potential attacks.

It is essential for red teaming methodologies to continuously evolve in tandem with proactive detection and ethical considerations. Shifting from a reactive approach — where tools are addressed as they become popular among cybercriminals — to a proactive stance that involves constant monitoring for emerging and high-risk tools will allow organizations to protect themselves better from the risks posed by cyber threats.

For a deeper dive into red team tools and the rest of our research, read our paper titled Red Team Tools in the Hands of Cybercriminals and Nation States: Developing a New Methodology for Managing Open-Source Threats.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

последний

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One