Today’s Cloud and Container Misconfigurations Are Tomorrow’s Critical Vulnerabilities

By Alfredo Oliveria

It’s projected that by 2027, more than 90% of global organizations will be running containerized applications, while 63% of enterprises already adopted a cloud-native strategy in their businesses in 2023. The initial expectation was that this shift to the cloud would merely be an infrastructure change, where traditional security principles would still apply.

Back then, malware and exploits were the primary threats that IT and system administrators, developers, and operations teams had to contend with. For cybersecurity professionals, dissecting malware in targeted attacks and scrutinizing exploits during penetration tests were standard practices and required no substantial changes to their approaches.

These assumptions are far from the truth. An enterprise’s move to the cloud and its use of container technologies introduce a plethora of variables, complicating the balance between operational needs and security priorities. For example, managing technical aspects such as network configurations and strategic elements like compliance and time to market becomes increasingly difficult. These challenges expose organizations to a stealthy yet costly threat that is rapidly becoming the new critical vulnerability in the cloud: misconfigurations.

The evolving paradigms of cloud security

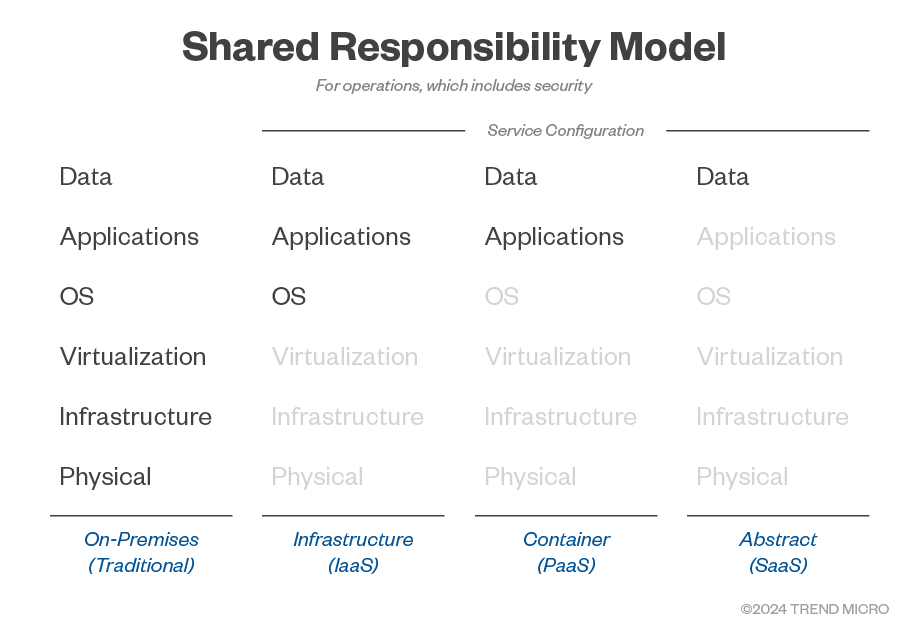

Cloud service providers (CSPs) have adopted a shared responsibility model that delineates the security responsibilities between the provider and the customers. Essentially, the CSP is responsible for the security “of the cloud,” which includes the infrastructure, hardware, and software of the cloud services. On the other hand, customers are responsible for security “in the cloud,” which encompasses data, applications, and user access.

CSPs ensure that the physical infrastructure, including servers, storage devices, and network components, is secure. This includes measures like firewalls, physical access controls, and regular security audits. Depending on the service and shared responsibility model, the CSP is also responsible for patching operating systems.

The customers, among other things, are responsible for their own data within the cloud. This involves implementing encryption, data loss prevention strategies, and access controls. For example, while the cloud platform provides encryption at rest and in transit, it is up to the customer to ensure that their data is encrypted before being uploaded to the cloud, and that the encryption keys are managed effectively.

Figure 1. How a cloud shared responsibility model defines the CSP and customer’s accountabilities

Variations in cloud shared responsibility models

The shared responsibility model varies depending on the type of cloud service being used:

Infrastructure as a service (IaaS): The CSP provides the basic infrastructure, including virtual machines, storage, and networks. The customer is responsible for the operating systems, applications, and data.

- Platform as a service (PaaS): The CSP manages the underlying infrastructure and the platform that runs applications, while the customer handles the data and application logic. For example, the CSP manages the servers up to the operating system level, networks, and storage for container services within the cloud, while customers are responsible for application code and data security.

- Software as a service (SaaS): The CSP manages almost everything, from the infrastructure to the application itself. Customers are primarily responsible for their data and user access when using, for example, serverless services.

The attack surface is also changing as more cloud services are being adopted. Physical attacks are almost dead. From the moment a customer starts using cloud services, the CSP has the full responsibility of preventing and mitigating any physical access that would allow an attacker to exploit a local physical vulnerability. On the other hand, the CSP provides management and security for the application itself when adopting the SaaS model — that is, all OS vulnerabilities are managed and patched by the CSP.

If all security measures (including patches) are in place, the services would no longer be within the attacker’s reach. When all security measures, including patches, are implemented effectively, the services are less accessible to attackers. As cloud service providers (CSPs) take on more responsibility for security, the overall attack surface decreases. This shift allows CSPs to enforce more secure platforms, reducing the potential for misconfigurations by customers.

Service exposure is another key point worth highlighting. Most CSPs enforce the principle of least privilege on their services by default, which the customer must explicitly change on their own accord. Leaving a vulnerable application running while facing the internet is harder when you must go the extra mile to make it public and accessible.

These don’t eliminate exposure to vulnerabilities. This is especially true when adopting the IaaS model, as customers can really relax the security. It is their responsibility, after all, and they can expose their applications and whole OS, which can still be affected by old or newly discovered vulnerabilities. However, when an application is designed and developed in an IaaS environment, the CSP can enforce security best practices, which further reduces risks from vulnerabilities and their exploitability. There are fewer files and libraries to exploit or infect, and the environments are more short-lived. Although traditional vulnerabilities are unlikely, it doesn’t mean that there are no security issues. What’s left then?

Most of the cloud-related security incidents we see have something in common: misconfiguration.

When the enforced security policies clash with usability and configuration complexity, customers start to be more flexible getting around established best practices. Cloud migration without cloud security expertise loosens up security policies.

Let’s take the chmod 777 as an example. It’s a command that grants overly permissive access to resolve permission issues. Many system administrators — even the most experienced ones — use chmod 777 as a quick fix to make a website work. It effectively allows everyone to read, write, and execute the file or directory without considering the security implications.

A similar pattern could be seen with cloud services today. Users frequently grant full access to resolve issues. This could be a pragmatic choice, driven by the need to support Agile development, test and troubleshoot issues, automate pipelines, or manage dispersed teams that require broad access to perform their tasks effectively. Moreover, when data from multiple internal and external sources needs to be consolidated into a cloud-based platform, limited permissions become an issue.

However, enterprises often forget the importance of balancing operational agility with security, either due to their lack of proper governance, security awareness, or the rapid pace of technological changes. Whether it’s a checkbox that enables unrestricted access or an overly permissive identity access management (IAM) policy, the mindset remains: If it works, it works. This approach, however, compromises security and exposes the system to potential vulnerabilities.

Examples of cloud/container misconfigurations

Amazon Web Services (AWS) S3 buckets: A common misconfiguration is setting an S3 bucket policy to allow public read/write access to quickly share data. This opens the data to anyone on the internet, leading to potential data breaches.

IAM roles: Granting an “owner” or “admin” role to all users in a cloud project to avoid permission issues can lead to security risks. This practice allows every user to perform any action within the project, increasing the risk of accidental or malicious changes.

It is common to find open projects on GitHub with deploy and installation files that are too permissive, or someone on Stack Overflow showing a piece of a configuration file with excessive permissions as a problem solver.

The issue is exacerbated when vendors add such overly permissive configuration files on their documentation and code snippets. When it comes to network configuration files, for example, it is common practice to offer examples where the default network configuration to start a process, API, or flexed daemon is 0.0.0.0. Depending on the environment and firewall rules, this would be exposed to anyone connected to the internet.

Figure 2. An example of how a configuration file provides excessive permissions

Misconfigurations: The new vulnerability in cloud and container environments

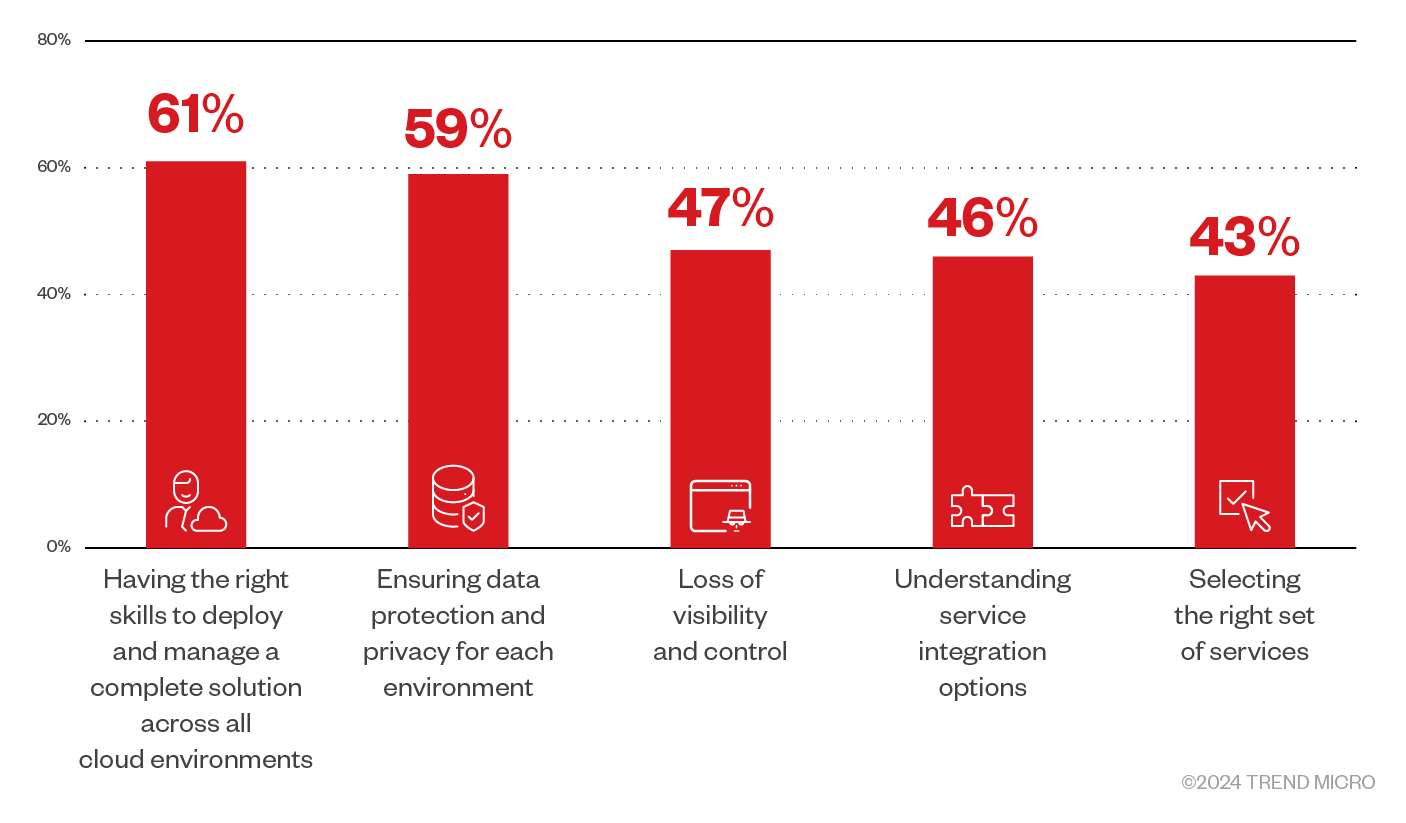

The increased complexity of modern cloud and container environments contributes significantly to the prevalence of misconfigurations. For instance, many companies are adopting multicloud strategies. Enterprise Strategy Group’s (ESG) noted that Cloud Detection and Response Survey in 2023 noted that 69% used at least three different CSPs, while 83% of organizations have migrated their production applications to the cloud. With this migration continuing to accelerate and with every CSP having its own set of configurations and security controls, it becomes challenging for security teams to maintain consistent security postures across all platforms. And as companies increasingly rely on multiple CSPs, the need for robust cloud detection and response (CDR) solutions that support scalability and process automation becomes more critical.

Figure 3. Organizations increasingly adopt a multicloud strategy with several concerns

(Source: 2023 Trend Micro Cloud Security Report)

Rapid deployment and DevOps practices without security in mind

The push for rapid deployment cycles in DevOps practices exacerbates the risk of misconfigurations. The same ESG report noted that most organizations are adopting DevOps methodologies extensively, which contributes to frequent software releases without adequate security checks. This often results in configurations that focus on functionality while neglecting security, leaving gaps that can be exploited by attackers.

The ESG survey also reported that in the context of DevOps, 85% of organizations deploy new builds to production at least once per week. This rushed deployment schedule increases the likelihood of misconfigurations. The rapid cadence of software pushes, driven by business demands, often outpaces the security teams’ ability to thoroughly vet and secure configurations.

Here are some examples of common cloud and container misconfigurations:

- Unauthenticated API: Interfaces that don’t have authentication measures could allow anyone to access and manipulate data.

- Open storage bucket: Cloud storage buckets configured to allow public access could expose sensitive data.

- Default password in production deployment: Using default credentials in a live environment, which are easily guessable and widely known, makes it easy for attackers to gain unauthorized access.

- Unencrypted data in transfer: Data sent over the network without encryption exposes it to interception.

- Excessive permissions: Granting users or services more permissions than necessary increases the risk of misuse.

- Unrestricted inbound ports: Leaving unnecessary ports open could enable attackers to misuse it to gain access to the system.

- Misconfigured security groups: Security groups with overly permissive rules could allow unwanted traffic.

- Exposed management consoles: Management interfaces exposed to the internet without proper access controls could become targets for unauthorized access.

- Outdated software and dependencies: Running old versions of software that have known vulnerabilities leaves systems open to exploits and attacks that could compromise data and functionality.

- Lack of network segmentation: Failing to segment the network allows an attacker to move laterally more easily, increasing the risk of widespread compromise.

These are the potential outcomes when misconfigurations are successfully abused or exploited:

- Data breaches: Unauthorized access to sensitive data could allow attackers to expose and misuse confidential information.

- Account hijacking: Attackers could gain control of accounts to perform malicious activities.

- Cryptojacking: Attackers could gain unauthorized use of computing resources to mine for cryptocurrency, draining resources and hampering performance.

- Denial of service (DoS): Overwhelming services could render them unavailable, disrupting access to legitimate users.

- Data loss: Deletion or corruption of critical data could result in irreversible loss of critical information.

- Compliance violations: Failing to protect data could lead to breaches of regulatory requirements, incurring fines and legal repercussions.

- Escalated privileges: Attackers gaining higher levels of access could exploit more critical systems and data.

- Service disruption: Compromised services could interrupt to business operations and productivity.

- Intellectual property theft: Stealing proprietary information could enable attackers to exploit trade secrets and undermine a company’s competitive standing.

- Financial loss: Costs from remediation efforts and potential brand damage could lead to direct and indirect financial losses.

Attackers exploiting misconfigurations

Attackers are acutely aware of the prevalence of misconfigurations and are actively exploiting them in the wild. Trend Micro’s research illustrates the extent of these exploitations:

- Kong API gateway misconfigurations: Our study highlights how attackers exploited misconfigured Kong API gateways to gain unauthorized access to APIs and sensitive data. These misconfigurations, often involving lack of authentication and insufficient access controls, allowed attackers to manipulate API traffic and extract valuable information.

- Apache APISIX exploitation: Another study on Apache APISIX showed how attackers abuse default and misconfigured settings to infiltrate systems. These vulnerabilities were often due to administrators not changing the default settings or failing to properly secure the API gateways, leading to significant security breaches.

Misconfigurations vs. traditional vulnerabilities

Misconfigurations are often more prevalent than traditional vulnerabilities due to the ease with which they can occur. A single misconfiguration can expose an entire application or dataset, whereas traditional vulnerabilities typically require more sophisticated techniques. According to the ESG report, nearly all organizations experienced a cloud security incident in the past year, with many incidents tied to misconfigurations. Telus’ 2023 “State of Cloud Security” report corroborated this, noting that 74% of organizations experienced at least one significant security incident due to a misconfiguration.

Though not always the case, exploiting misconfigurations requires less technical expertise compared with traditional vulnerabilities. For example, an improperly configured Amazon S3 bucket can be accessed by anyone with the URL. In contrast, exploiting a buffer overflow requires detailed knowledge of the software and environment.

The Telus report also noted that nearly 50% of cloud security incidents stem from preventable misconfigurations, such as default settings left unchanged or excessive permissions granted to users and services. These issues often arise due to oversight or lack of understanding of the security implications of certain configurations. The same study also reported that 95% of organizations reported encountering these misconfigurations regularly.

The report also showed that zero-day exploits rank the least as causes of cloud incidents, but often get the most attention. Misconfigurations, however, are much more common and pose a greater threat to cloud and container environments.

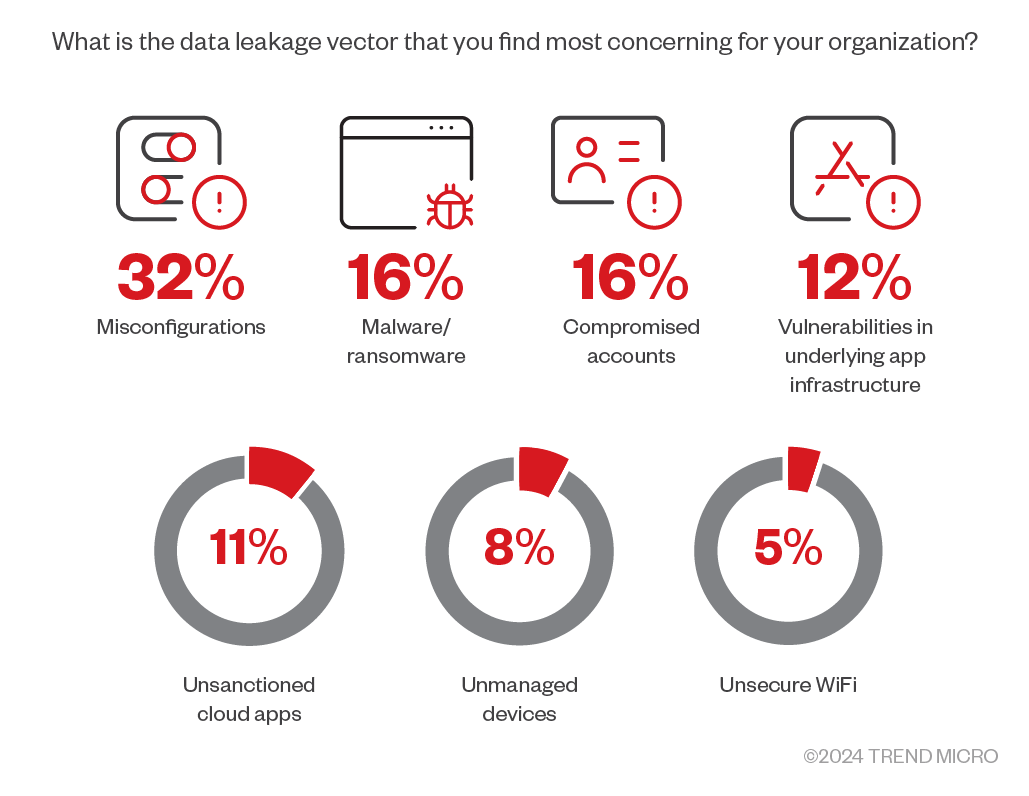

Figure 5. Organizations rated misconfigurations as the top concern for data leakage vectors

(Source: 2023 Trend Micro Cloud Security Report)

Both misconfigurations and traditional vulnerabilities can have a severe impact. However, the breadth of impact from misconfigurations can be wider. Numerous high-profile cases already demonstrate that a misconfigured cloud storage service can lead to massive data breaches, affecting millions of users. Traditional vulnerabilities might allow deep access to a system but often require additional steps to achieve the same impact.

Misconfigurations: Real-world impact and examples

The Captial One incident in 2019 is one of the most notable examples of a misconfiguration leading to a significant security breach. The incident was caused by a misconfigured web application firewall, which allowed an attacker to access over 100 million customer records stored in Amazon S3. This breach not only exposed sensitive personal information but also highlighted the critical need for proper configuration management in cloud environments.

In another instance, Tesla’s Kubernetes console was left exposed without a password, allowing attackers to use Tesla’s cloud resources for cryptocurrency mining. This incident underscored the importance of securing administrative interfaces and ensuring proper access controls in container environments.

Trend Micro also reported incidents where misconfigured Docker APIs were exploited to deliver cryptocurrency-mining malware and botnets. These misconfigurations allowed attackers to gain access to Docker containers and use their resources for malicious activities.

Misconfigurations consistently rank as the top cloud threat for several years. Trend Micro’s research in 2020, for instance, found that misconfigurations were the biggest threat to cloud security. A 2022 report from the Cloud Security Alliance (CSA) emphasized the ongoing prevalence of misconfigurations as the leading cause of cloud security incidents. In 2023, Telus’ report identified misconfigurations as the most significant risk in cloud environments.

Misconfigurations could also lead to significant financial losses. Direct costs include fines for regulatory noncompliance as well as incident response and remediation efforts. Costs can also be indirectly incurred through the loss of customer trust, damage to the brand, and potential loss of business opportunities.

The Telus report estimated that the average cost of a cloud security incident involving misconfiguration is approximately US$3.86 million. IBM reported that in 2023, the cost of a single data breach in the US averaged US$9.44 million, which includes lost revenue as well as audit and remediation fees. In 2018 and 2019, data breaches stemming from cloud misconfiguration cost companies almost US$5 trillion.

Misconfigurations also have regulatory implications. Regulatory bodies are increasingly scrutinizing cloud security practices. Regulations such as the General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), and the Health Insurance Portability and Accountability Act (HIPAA) impose strict requirements on data protection and privacy, and failures in these areas can result in hefty fines.

In the Capital One incident, the company was fined an US$80-million penalty and agreed to pay an additional US$190 million in settlement. According to the ESG report, nearly 31% of organizations reported compliance violations due to cloud security incidents. In another survey, 50% of security and DevOps professionals reported increased downtime due to misconfigurations in their cloud environments, compliance violations, and unsecure APIs.

Resolving cloud and container misconfigurations: Security best practices and approaches

Automation and continuous monitoring

Manual configurations are prone to human error. Automation ensures that security policies and configurations are applied consistently across all environments. There are approaches that can be adopted to define security settings programmatically, which helps standardize their enforcement across development, testing, and production environments. There are also tools that automatically correct configuration drifts — where an IT environment deviates from an intended state over time due to manual changes or updates — to ensure that the infrastructure remains aligned with security policies. Automating security tests also helps catch misconfigurations early in the development cycle.

Automation and continuous monitoring provide immediate, consistent, and scalable ways to manage configurations and mitigate risks. It’s no surprise that investment in data privacy and cloud-based security solutions is a top priority among enterprises. Gartner projects it to increase by more than 24% in 2024, what with the continuous adoption of cloud- and AI-based technologies.

Security training and awareness

Security training and awareness are indispensable for maintaining secure cloud and container environments. By prioritizing regular training programs and integrating security best practices into daily operations, organizations can significantly reduce the incidence of misconfigurations, enhance their response times to security incidents, and ensure that their teams are well-equipped to handle evolving security challenges. In fact, a survey from the SANS Institute revealed that organizations that engage in regular security awareness training have reduced incidents by up to 70%.

Telus’ report highlighted that only 37% of surveyed companies have dedicated cloud cybersecurity staff, while the ISC2 emphasized that 35% of organizations identify cloud computing security as a significant skills gap. In our 2023 cloud security survey of cybersecurity professionals in the EU, the respondents identified critical skills, such as understanding various compliance and regulatory requirements related to cloud security and managing security across diverse cloud environments. Well-trained teams can address some of these gaps.

Implementing DevSecOps

Integrating security into the DevOps pipeline (i.e., DevSecOps) ensures that security is embedded at every stage of the development process.

DevSecOps practices can help harmonize rapid development and robust security. According to the GitLab 2024 Global DevSecOps Report, 54% of surveyed development teams have implemented an integrated DevSecOps platform, which has led to significant improvements in developer productivity and operational efficiency. Furthermore, organizations using DevSecOps practices such as automated security checks in CI/CD pipelines and continuous monitoring are four times more likely to deploy multiple times per day, demonstrating the efficiency that can be gained from these practices.

A Gartner Peer Community survey also showed that 66% of organizations that have adopted DevSecOps encountered fewer security incidents, while 58% improved their compliance scores.

What cloud and container security would look like in the future

As enterprises continue to move to the cloud and use containers, AI and machine learning (ML) would no longer be optional add-ons but will become core components in their security. These technologies can enhance the detection of misconfigurations by identifying patterns and anomalies that could indicate security risks. AI and ML can also automate the remediation of identified issues, reducing the time and effort required to maintain secure configurations. DevSecOps tools already incorporate AI and ML to automate misconfiguration and vulnerability scanning across the development life cycle, for instance.

On the other hand, enterprises must be prudent in adopting AI and ML, as they can also introduce new challenges, complexity, and exposure to security risks. Organizations should first establish governance frameworks to ensure that AI and ML tools are securely integrated and managed within their cloud environments.

Another emerging trend is the use of enhanced cloud security posture management (CSPM) tools to specifically address the increasingly complex and numerous requirements for remediating misconfigurations in cloud-based systems and infrastructures (i.e., IaaS, SaaS, PaaS). CSPM tools can consolidate visibility across multiple cloud environments, for example, as well as automate the enforcement of security policies. While this is especially useful for organizations that use cloud services from multiple providers, it could be a double-edged sword when not implemented effectively.

Many enterprises are also now adopting a zero-trust architecture to mitigate the risks associated with misconfigurations. According to Gartner, 63% of global leaders they surveyed reported that they’ve fully or partially implemented a zero-trust strategy in their business operations.

Zero trust assumes that no user or device should be trusted by default. It involves verifying every access request as though it originates from an open network, regardless of where the request comes from. This model requires strict identity verification and continuous monitoring, making it harder for attackers to exploit misconfigurations.

While a zero-trust approach is recognized as an industry best practice, enterprises should carefully discern the scope of implementation and measure its effectiveness. In the same Gartner survey, for instance, 35% of organizations reported encountering a failure that significantly disrupted their implementation. This challenge is exacerbated by the projection that through 2026, 75% of organizations will exclude unmanaged, legacy, and cyber-physical systems from their zero-trust strategies due to the unique, specific, and critical tasks they perform in the business. These systems, however, are commonly prone to misconfigurations and vulnerabilities. To address these challenges, it is essential to adopt comprehensive and adaptable security solutions that can fill these gaps and ensure that the organization’s cloud and IT environments are secure alongside those that cannot be directly managed by zero-trust principles.

Today’s misconfigurations as tomorrow’s critical vulnerabilities

As enterprises move their operations to the cloud and even further into cloud-native applications through the software-as-a-service (SaaS) model, misconfigurations are gaining the same or even more relevance than traditional vulnerabilities.

Back in the 2020, Trend Micro correctly predicted that misconfiguration was the future of security challenges. Of course, vulnerabilities will never leave the stage and will sometimes walk hand in hand with misconfigurations, but the latter surely came to stay. For tackling security, a mindset needs to be constantly present among cloud administrators, and security teams need to adapt to platforms where old security technologies cannot be replaced.

Yesterday and today’s misconfigurations could be an enterprise’s unnoticed yet significant and expensive vulnerability tomorrow. Organizations must prioritize robust configuration management practices, continuous monitoring, and security automation to mitigate these risks effectively. Regular security training and the implementation of DevSecOps practices should be integral parts of any cloud strategy. Adopting effective zero-trust principles and AI and ML technologies for proactive threat detection and remediation can further enhance security postures.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

- Agentic Edge AI: Development Tools and Workflows

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One