The Nightmares of Patch Management: The Status Quo and Beyond

As the value of data increases together with continuous discoveries about what can be done with it, the number of targeted attacks and threats that abuse vulnerabilities in systems has also increased. Vendors and manufacturers have noticed this upshot and have been trying to keep up — to both the delight and chagrin of IT teams.

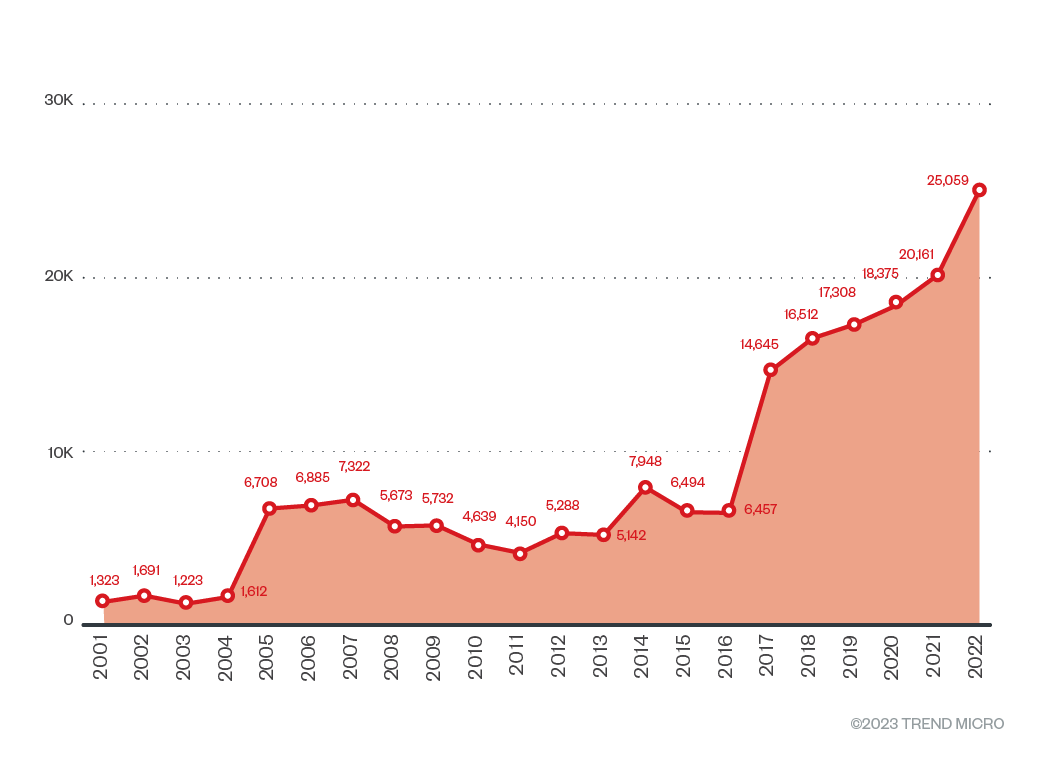

Figure 1. The number of software vulnerabilities per year assigned CVE numbers (Source: MITRE)

Patching and tracking updates for risk mitigation has become a daunting task for administrators and IT teams, especially since this is only a small part of their everyday tasks in an already thinly distributed team. In ideal scenarios, organizations update their policies every couple of years, alongside the security strategies and objectives that their actionable accomplishments are built on to ensure continuous availability, efficiency, and mobility. It’s also important to note that while small and medium businesses (SMBs) have been observed as catching up — in some ways reportedly performing better at patching and managing their systems on a regular basis than their bigger supply chain counterparts — overall, more can be done to implement and update a patch management policy in light of technological and cybersecurity developments.

[Read: Cybercrime and exploits: Attacks on unpatched systems]

Patch management in recent years

Before the pandemic, the thought of collecting and keeping track of employees’ personal and company-issued devices (alongside the on-site systems used every day), had caused system administrators to scratch their heads. With attacks and intrusions more frequently making the headlines, decision-makers have now also realized the equivalent and accompanying costs. In recent months, organizations have likely been forced to come up with haphazard solutions in response to the sudden shift to remote work arrangements. Admittedly, tracking these systems and updating them on a regular basis have only become more complicated and fatigue-inducing in this circumstance.

In general, patching for endpoints have become simpler, automated, and more streamlined compared to past methods. Patching on the server side, however, tells a different story of challenges altogether. The reluctance to immediately implement the necessary measures for servers is mainly due to downtime costs: Applying the patches create a likelihood that deploying these patches immediately breaks the continuity of existing functionalities. Among a number of concerns, these business discontinuity issues come in the form of patches themselves having bugs, introducing new vulnerabilities upon deployment, and failing to address the disclosed gap. Additionally, vendors include “extras” in the patches that might turn out to be potentially unsafe, and might require the necessary reboot of systems. In particular, for industrial control systems (ICSs), reboots for patching are considered problematic due to how they affect public integrated systems.

Interestingly, as more users connect and interchangeably use devices for access, they also become more aware of the security upkeep needed for these devices. Along with worldwide mandates that call for better legislation and implementation of cybersecurity, these costs cumulatively contribute to more frequent deployments from vendors. In an effort to simplify patching as far as Microsoft is concerned, gone are the days when system administrators or users get to decide which patches to apply separately. Each system, be it a server or endpoint, cumulatively patches. However, this change has also introduced other concerns for IT teams.

As observed in previous years, software vendors deployed patches on the same schedule during Microsoft’s Patch Tuesday. While some have delayed their own releases, these are merely bound to compound and accumulate over time and potentially cause more issues. Additionally, IT teams have had to contend with the home network and the multitude of connected personal internet of things (IoT) devices, likely without knowing if these systems and networks are secure to begin with. With the record number of malware threats and attacks in recent years, as well as the social engineering techniques that have proved effective during the pandemic, the delay in patch implementations could mean trouble for a number of organizations.

The cloud, not to mention its respective advantages, has noticeably grown in popularity. Patching has also been less of an issue as cloud service providers (CSPs) have made this service convenient for their subscribers. On the flipside, this growth has also attracted cybercriminals who are interested in the cloud’s illicit potential. In moving their on-premises hosted applications and servers to cloud containers and platforms, there is a possibility that organizations might neglect the necessary security measures when dealing with the differences in structure. Cybercriminals have caught on and found the most profitable ways to catch enterprises off-guard, leaving more gaps open with misconfigurations and container flaws. In turn, cybercriminals abuse these openings to find ways to get inside containers and go through the networks undetected for some time.

One way that organizations have resolved traditional patching issues is by subscribing to the patching-as-a-service option offered by vendors, thus making service providers responsible for the implementation of monthly updates. Cloud-based solutions and the efficiencies that they offer companies for mobility and speed are also popular. Meanwhile, aside from companies’ responses to customer inquiries with regard to security and privacy procedures, submissions and disclosures from researchers and responses from vendors with regard to fixes have also continuously improved. Despite the challenges in enacting an effective patch management policy, developing, continuously learning and adjusting, and encouraging a better understanding of changing technologies and strategies have led to a more robust cybersecurity performance.

Challenges and best practices

Consumer awareness of the need to patch and update operating systems heavily contributes to companies’ growing consciousness with regard to improving their cybersecurity postures despite difficulties in implementing them. The market’s demand for better online security, data privacy, and storage has signaled improvements for automated patch deployment. As a result, this demand prompts manufacturers and vendors to keep up. Observers note that there is a growing industry that aims to help companies and users to deploy patches automatically.

Among the challenges that are often mentioned, the following are common to implementing patch management procedures on a regular basis:

- Resources. At the most basic level, not all vendors deploy fixes on the same day as Patch Tuesday. As a result, system administrators can’t keep track of all the different release cycles needed for all the systems that are likely connected and used.

In addition, companies have long sounded the alarm on the growing gap in skilled cybersecurity personnel for companies — this problem persists until today and presents a challenge to keeping an adequate cybersecurity posture. The surge in production-related technologies and systems also potentially muddles the tracking necessary for management. While companies’ IT teams and administrators know patching is a fundamental component for handling vulnerabilities, there is also a gap in confidence and training for the identification, analysis, and implementation for vulnerability management.

Best practices. There is yet to be an established standard for informing industry practitioners and IT teams of all release cycle intervals— that is, if they can deploy fixes on a regular basis at all. While not all vendors can automate their releases, information is often available on their respective news or blog sites. Occasionally, vendors are also capable of making email lists, updates, and RSS feeds that can be integrated into specific mailboxes.

Furthermore, rather than seeing patch and vulnerability management as a traditional IT and security operations task, these should instead be considered part of a wider security strategy, especially for DevOps and operations security. An organized inventory is one of the primary procedures for asset management, and keeping track of all business assets that are involved in operations is the only way that these can be monitored for accurate analysis. Traffic and behavior patterns, operating system versions, network addresses, credentials, and compliance checks are just some of the few components that can contribute to accurate monitoring, data analytics, and anomaly detections. Keeping in mind that most patches are made available within hours of public disclosure, immediate implementation via automation can narrow down the risk window significantly, either with virtual patches or permanent updates.

- False positives and misdirected priorities due to fragmented and siloed solutions. A solution that is focused on one environment (for example, email detection) might falsely flag a vulnerability that is covered by a different solution (such as a cloud server solution), or vice versa. In this simplified example, the disconnected solutions might lead analysts and security teams to chasing false positives or attending to low-risk vulnerabilities while leaving critical gaps open. This can also lead to unidentified vulnerability backlogs that can remain unpatched, leaving systems at a higher risk to exploits and intrusion.

Best practices. Seeing the solutions and issues from a centralized perspective allows for better focus and insight — not just during an incident but also when hunting for the potential threat openings. Utilizing technologies such as security information and event management (SIEM), artificial intelligence (AI), data analytics, and threat intelligence, among others, allows security operation centers (SOC) and IT teams correlated detections across different environments for a bigger context in investigations and responses. This visibility allows for centralized, proactive, and defragmented monitoring, tracking, analysis, identification, and detection of vulnerable areas, thus reducing false positives and accelerating integrated responses.

- New and forgotten openings due to the foray into cloud solutions. In the rush to offer new services and products, companies have increased customer interaction with applications. However, as they improve efficiency by moving to the cloud, companies lose track of details that address their security postures. When migrating to the cloud, it is crucial for companies to ask some integral questions; for example, are the apps designed securely? Are the APIs safe? Were the apps fixed for flaws? Were the containers properly customized and configured? Are authentication and authorization tokens correctly implemented?

Best practices. Whether in the cloud or on-premises, testing applications and cloud containers with the same rigor and attention remains paramount. It’s important to have a checklist of the governance and structure policies of both containers and apps during their foundational builds to ensure subsequent security and functional efficiency of developments. Developers must ensure that base images are vulnerability-free and scanned in their entirety before deploying into production. They must also establish that base images are clear allowed to build upon, making sure that the packages, binaries, and libraries are secure from the onset.

Companies must also include due diligence on their respective known, high-risk, and common vulnerabilities to ensure that the necessary updates and solutions are responded to. For any analysis prior to subscription, companies should look into their historical data on high-risk windows between disclosures and deployments, change management, deployed patch applicability, and issue and risk management. It is equally important to conduct regular scans from containers to keys, compliance to malware variants, and third-party software to teams. The differences in the upkeep and audit of the infrastructures might be unnerving, but the foundational vulnerability checks and scans can reduce risks in the long run.

- Remote work and gaps in vulnerability management. While remote work arrangements are not new, enterprises have been challenged to implement at scale for all workers and their devices as a result of the abrupt changes brought on by the pandemic. While it is possible that administrators who considered implementing patches experience difficulties with everything on-site, these difficulties are heightened when both the users and the systems that need patching are geographically dispersed. Some systems require the administrators to physically interact with the machines that need to be updated, while an uncertain timeline and implementation puts more organizations at higher risk. Especially for legacy ICSs, deploying fixes remotely and blindly is almost unimaginable due to the delicate balance and integrations heavily dependent on the feasibility of patching.

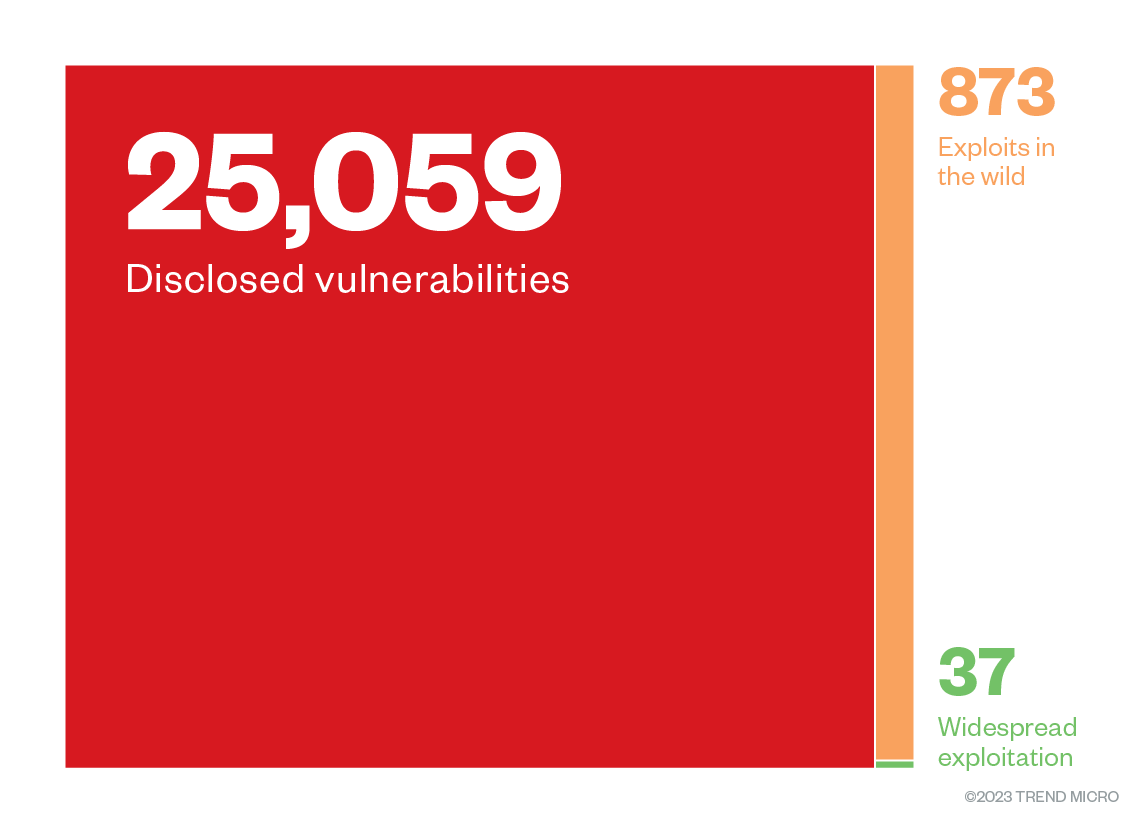

Best practices. Not all vulnerabilities are created equal. Out of the total number of security openings disclosed, only a few openings are exploited at large and even less are used for intrusion, as shown in Figure 1. While these numbers are not to be taken lightly, enterprise administrators and users can benefit from the prioritization and identification of the vulnerabilities that need to be patched immediately. Some of the necessary measures include having good vulnerability information sources, system and software inventories, vulnerability assessments, scanning and verification tools, patch deployment measures (for example, agent-based vs. agentless deployments), and continuous improvement plans for risk mitigation.

In instances where patches for implementation entail physical interaction on-site, being able to identify and focus on gaps found in specific environments for remediation — either with permanent patches or virtual ones — can help administrators in choosing which gaps to prioritize over a multitude of disclosures. Notably for ICSs, this prioritization becomes integral when system administrators and IT teams are weighing in on patching systems or not following specific procedures and models.

Figure 2. The proportion of CVEs that were observed and exploited in 2022 (Source: MITRE, CISA, Google Project Zero)

Figure 3. Adjusting patching priorities with the threats found in the enterprise environment (Source: Gartner)

[Read: Application security 101]

Virtual patching

Virtual patching is an imperative aspect of patch management. Since vendors and manufacturers need time to come up with and deploy the necessary patches and upgrades upon disclosure of a vulnerability, these temporary fixes buy them time for permanent solutions, as well as help them avoid any unnecessary downtime for companies to implement patches at their own pace. Especially for incidences of zero-day vulnerabilities, these virtual patches protect systems and networks as an additional security layer from known and unknown exploits.

One example is CVE-2020-0688, a remote code execution (RCE) bug in the Microsoft Exchange mail server rated as Important. Through the Trend Micro Zero Day Initiative (ZDI), this bug was reported to Microsoft on the last week of November 2019, and a virtual patch was released on December 7. It was patched by the vendor 66 days after a virtual patch was released, and active attacks were observed in a month after the vendor patch was deployed. Meanwhile, virtual patches were deployed for over three months.

For enterprises and organizations using legacy systems, this fix allows for an extra layer of protection when security flaws are no longer supported, and provides a significant amount of flexibility for gauging specific points that require patches. Good virtual patching solutions are multilayered in order to address varying environments — physical, virtual, or cloud — to ensure continuous business-critical traffic, communication, and operations.

[Read: Security 101: Zero-day vulnerabilities and exploits]

Conclusion

Cybercriminals and determined attackers will continue to find more creative ways and security gaps for exploitation in order to profit at the expense of organizations and individuals. As the pace of technological developments in communication and workload efficiency allows for a wider breadth for increased productivity and convenience, the same pace is also advantageous for malicious actors

to find more weaknesses that can facilitate higher illicit profits. Organizations will have to be conscious of the internal and external factors that can be abused for unauthorized intrusions and breaches. In this vein, updates and patches, patch management procedures, and patching measures should simultaneously, if not proactively, evolve as fast as the market, service, and products offer and demand.

Despite such a tall order, technological changes and advancements will only push users, vendors, and businesses toward better cybersecurity mindfulness. This could also push vendors and manufacturers to ensure that these systems are not exploitable. Given the numbers of gap discoveries and disclosures, it might be worth asking if the continued increase is due to security practitioners getting better at finding these security flaws, or if the surge in attacks and threats is due to the flood of increasingly vulnerable systems available in the market.

Whatever the reason, risk windows must still be narrowed in the face of developments and accumulated learning. At the same time, users will have to continuously take part in heightening their awareness and keeping their defenses up. Although indispensable trust on the released patches will always remain, consumer demand for better security will ensure that suppliers are always on their toes and that individual users make culpability a habit.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks