CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

By Vincenzo Ciancaglini, Salvatore Gariuolo, Stephen Hilt, Rainer Vosseler

Key Takeaways

- Advanced AI digital assistants were showcased at CES this year, promising seamless integration into people’s everyday lives.

- However, the increasing sophistication of AI digital assistants raises significant cybersecurity threats, including data privacy issues and potential misuse of sensitive personal data.

- In our analysis, we used these products as case studies for AI digital assistants, evaluating their characteristics based on our Digital Assistant Matrix to explore and discuss potential issues and vulnerabilities that this emerging technology may face.

- Continuous audio monitoring and handling of critical information by these assistants make them vulnerable to attacks like voice command manipulation and identity theft. Robust security measures and user awareness are crucial to mitigating the risks associated with increasingly autonomous AI systems.

The growth of AI digital assistants (DAs) marks a pivotal moment in technology, promising to revolutionize how people interact with their environments. At CES 2025, innovations in this space showcased the transformative potential of these systems to offer seamless convenience, personalized services, and improved efficiency. From always-on home assistants to personalized wellness coaches and travel planners, AI DAs are trying to become an integral part of our daily life.

As these systems become more sophisticated, they also introduce a range of significant cybersecurity threats that make the implementation of robust encryption and secure storage protocols necessary. AI DAs handle sensitive personal data, control access to critical devices, and often operate within complex ecosystems of interconnected systems. Understanding these risks is crucial to ensuring user safety and trust.

Drawing on Trend Micro’s research last December, this entry explores three notable AI DAs unveiled at CES 2025 and evaluates the potential threats associated with tools like these. As these are innovative emerging devices, we are basing our assessments on public descriptions of their features. We used publicly accessible open-source data to identify potential security issues that can arise with this emerging technology. By pointing out these potential issues, we seek to alert manufacturers of these products and similar products to potential weaknesses that they should consider and address for the protection of their customers.

Always-listening AI assistant

The always-listening AI assistant, created by Bee, operates with continuous audio monitoring to provide real-time responses. While this feature offers exceptional convenience, it raises serious security concerns. Its wearable device, the Bee Pioneer, has microphones that capture and send data to the Bee AI.

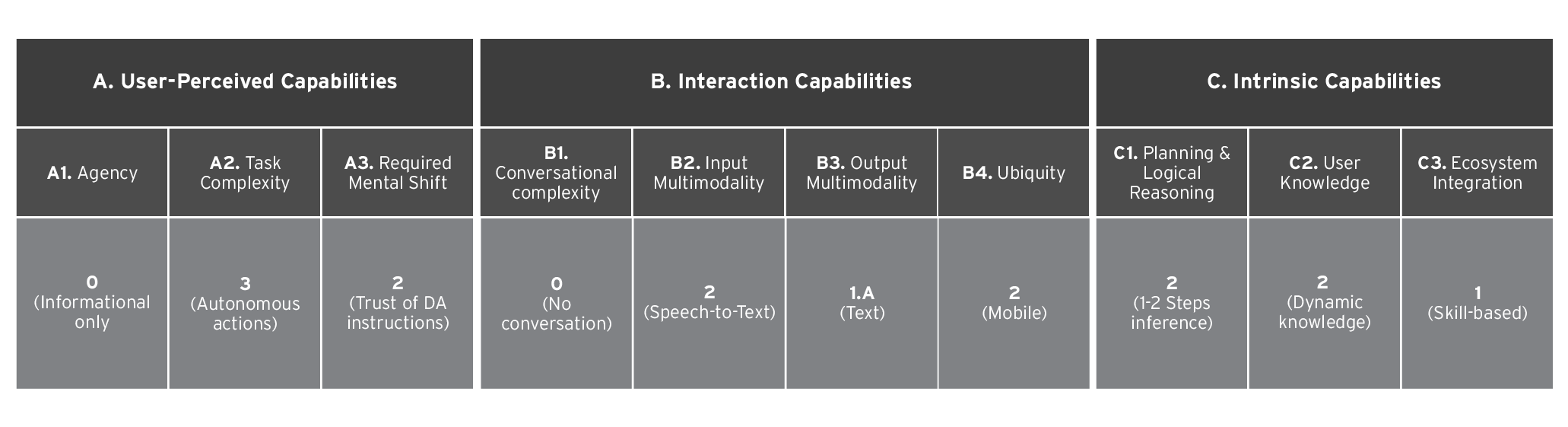

In Table 1, we mapped the AI assistant’s capabilities based on the digital assistant framework discussed in our previous research.

Table 1. Level assignments of the Bee AI assistant’s capabilities as a digital assistant

- Agency – in the most common case, when the DA provides information only, agency is 0 (“Informational only”). However, in the news article linked above, Bee should also be able to perform basic actions (instruct the AI to find a dinner spot to suit your preferences), which is related to a higher level of agency at 1 (“Single commands” ).

- Task Complexity we mapped this as a 3 (“Autonomous actions”), as Bee can perform actions autonomously based on human input based on the agency.

- Required Mental shift – Bee mainly provides summaries, insights and reminders, which requires a low level of engagement making it a 2 (“Trust of DA Instructions”). However, if we consider the DA’s ability to perform basic actions based on the Agency, the level of mental engagement from the user would be higher at a 3 (“Basic actions delegation”).

- Conversational complexity – We rank this at a 0 (“No conversation”), as the DA cannot engage in actual conversation.

- Input Multimodality – This is ranked as a 2 (“Speech-to-Text”), as it relies on Speech-to-Text.

- Output Multimodality – This is ranked as a 1.A (“Text”), as it produces text only.

- Ubiquity – Bee comes in the form of an iPhone app, mapped as 2 (“Mobile”), or an Apple watch/standalone tag, mapped as 3 (“Wearable”).

- Planning and logical reasoning – Based on the information available on this device, we rate it as a 2 (“1-2 Steps inference”).

- User knowledge – Is given a 2 (“Dynamic knowledge”), as Bee has knowledge about users acquired over time via interaction with them, learning their habits and adapting over time. Obviously, as part of the interactions, it also learns about other people that are around the user, but we wouldn’t consider this as community knowledge (3), as this would require actually keeping info about these people.

- Ecosystem integration – Also rated as a 2 (“Natively chaining multiple providers”), Natively chaining multiple providers or provide API, as it can interact with other devices (like the phone) and perform actions with external entities (see the example in agency, again).

Devices like Bee have significant potential data privacy issues. Continuous audio recording increases the likelihood of sensitive conversations being captured and even misused. If the encryption standards are insufficient or the storage is not properly secured, attackers could potentially intercept this data for malicious activities, such as identity theft. The most likely route is stealing account details and logging into the companion apps to exfiltrate sensitive summarized data. The assistant is also likely vulnerable to voice command manipulation, where synthetic or pre-recorded voices can trigger unauthorized actions . Audio recording attacks are something to consider with devices that have Input Multimodality (B2). This would mean the future threats that apply to it are as follows:

- Skill squatting

- Trojan skills

- Malicious metaverse avatar DA phishing

- Digital assistant social engineering (DASE)

- The future of malvertising

- The future of spear phishing

- Semantic search engine optimization (SEO) abuse

- Agents abuse

- Malicious external DAs

AI wellness coach

Panasonic’s AI wellness coach, Umi, focuses on personalized health and wellness advice. Umi is part of the Panasonic Well portfolio of consumer solutions. By collecting biometric and behavioral data, it offers users tailored guidance on fitness, sleep, and nutrition. However, this advanced functionality could also bring security risks to its users.

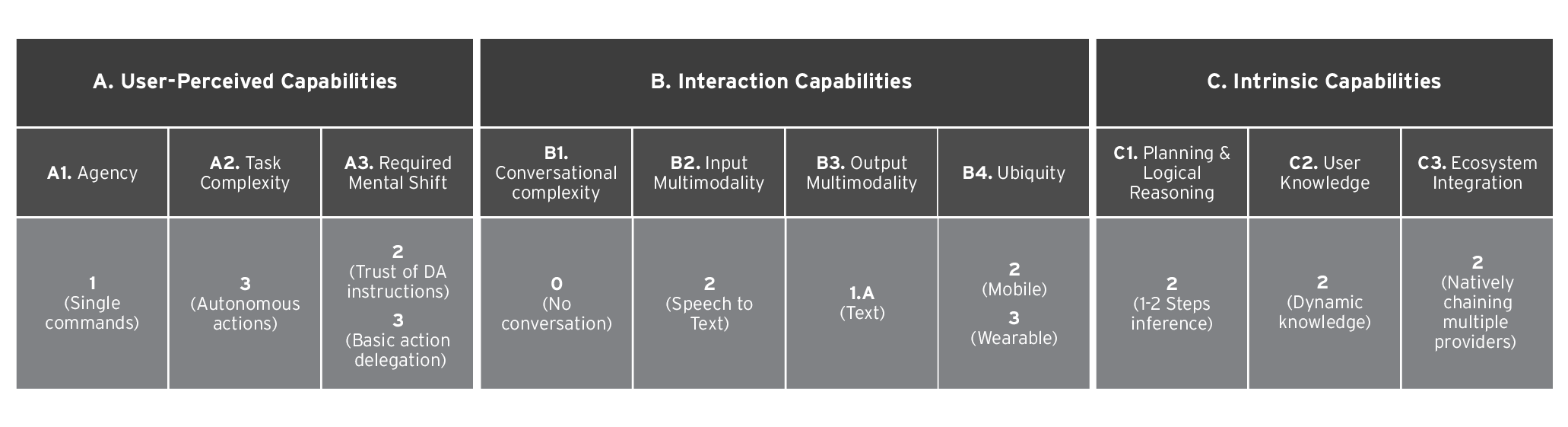

In Table 2, we mapped this AI wellness coach’s capabilities based on the digital assistant framework discussed in our previous research.

Table 2. Level assignments of the Panasonic Umi’s capabilities as a digital assistant

- Agency – Given the limited information available, we assign a concrete score of 0 (“Informational Only”) for Agency, with a possible hint towards 1 (“Single Commands”), depending on what it truly means for the assistant to “take actions on the user’s behalf.”

- Task Complexity – Based on the available information, we assign a score of 3 (“Autonomous actions”) to this capability, as the assistant can take specific actions based on pre-defined inputs.

- Required Mental Shift – The assistant primarily provides notifications and insights (, which suggests a low to moderate level of user engagement, placing it at a 2 (“Trust of DA instructions”). However, if it can act on behalf of the user for certain tasks, this could increase engagement to a 3 (“Basic actions delegation”).

- Conversational Complexity – This is rated as a 0 (“No conversation”), as the assistant does not engage in actual conversation beyond structured interactions.

- Input Multimodality – The assistant primarily supports text input, ranking it at a 1 (“Text-only”).

- Output Multimodality – Rated at 2 (“Multimedia”), as it provides textual and graphic responses only.

- Ubiquity – During CES, Umi was demonstrated as a mobile app, placing it at 2 (“Mobile”), with potential future expansion to other devices.

- Planning and Logical Reasoning – Rated at a 2 (“1-2 steps inference”), as it can handle structured logical actions.

- User Knowledge – Given the expectation that the assistant will have some dynamic knowledge about user habits, it is rated at 2 (“Dynamic Knowledge”). However, it does not maintain community-level knowledge (3) since it does not track interactions with other users extensively.

- Ecosystem Integration – Currently rated as a 1 (“Skill based”), as it integrates with external services such as Precision Nutrition or SleepLabs.

Based on our research, the handling of biometric data introduces critical risks to services like this AI wellness coach. Health data, such as heart rate or sleep patterns, is highly sensitive and, if compromised, could lead to falsified medical records or erroneous health recommendations by a scammer. Furthermore, the wellness coach integrates with third-party health devices and applications, which creates a broader attack surface. A single weak point in these connections could allow attackers to infiltrate the system and access private user information.

Social engineering attacks pose another substantial risk for wellness devices. The breach of MyFitnessPal demonstrated how vulnerabilities in wellness apps can expose millions of users’ data, highlighting the critical need for robust security measures in AI-powered wellness devices. Cybercriminals could impersonate healthcare providers. These types of attacks become more believable when heath protected data is directly quoted as part of the attack. I n extreme cases, attackers could offer fraudulent health services if they found some way of interfacing with a wellness apps ecosystem. Victims might unknowingly disclose personal data or pay for unnecessary services, resulting in financial loss and compromised privacy . Wellness coaches will maintain User Knowledge (C2); this case, as we mentioned, would be at a level of 2. This means the following future threats would apply:

- Skill squatting

- Trojan skills

- DASE

- The future of spear phishing

- Semantic SEO abuse

- Agents abuse

- Malicious external DAs

AI travel concierge

Delta’s AI concierge enhances the travel experience by analyzing personal travel data to offer tailored recommendations. The AI-powered Delta Concierge will be integrated into the company’s Fly Delta app. While it aims to simplify travel planning, a DA like this can introduce several potential security risks.

In Table 3, we mapped this AI concierge’s capabilities based on the digital assistant framework discussed in our previous research.

Table 3. Level assignments of Delta Concierge’s capabilities as a digital assistant

- Agency – Given the limited available information, we assign a 0 (“Informational only”) for Agency, though there is a possibility it could be 1 (“Single commands”), depending on how the assistant’s ability to “take actions on the user’s behalf” is defined.

- Task Complexity – We assign a score of 3 (“Autonomous actions”) to the A2 capability, as the assistant can execute predefined actions independently.

- Required Mental Shift – The assistant primarily provides notifications and reminders (such as travel updates), suggesting a low to moderate level of user engagement, rated at 2 (“Trust of DA instructions”) . If its ability to take action increases, this could raise engagement to 3 (“Basic actions delegation”).

- Conversational Complexity – Rated as 0 (“No conversation”), since the assistant does not support free-flowing conversations and instead operates through structured interactions.

- Input Multimodality – Given its reliance on speech input, it is rated 2 (“Speech-to-Text”).

- Output Multimodality – Rated at 1A (“Text”) as it primarily produces text and graphic-based responses.

- Ubiquity – The assistant appears to be integrated into airline services and mobile applications, warranting a score of 2 (“Mobility”), with potential future expansion to other platforms.

- Planning and Logical Reasoning – Rated 2 (“1-2 steps inference”), as it can process structured logical actions (like recognizing an expiring visa and sending a notification).

- User Knowledge – Rated 2, as it possesses dynamic knowledge of user travel habits. However, it does not qualify for community-level knowledge (3) since it does not track extensive user interactions beyond individual profiles.

- Ecosystem Integration – Rated 1 (“Skill-based”), as it integrates with partner services like Uber or Jobi, allowing some level of interaction with external platforms.

The Delta digital assistant handles sensitive information, including travel preferences, itineraries, and payment details, which makes it a lucrative target for cybercriminals. A data breach could lead to identity theft or financial fraud. Attackers might also exploit the concierge to send phishing messages , such as fraudulent travel alerts, tricking users into revealing passwords or payment details. While this all will be in the app, even an email pretending to be a travel concierge would be more believable because of the data that an attacker might be able to steal.

In the future, this class of travel-related assistants are poised to become much more powerful, handling full travel reservations on behalf of the user, across multiple providers. However, this also exposes them to new classes of threats, such as supply chain attacks and data poisoning, where a miscreant might pollute travel availability results to inject malicious information, and in the case of people of interest to nation states, this could aide in surveillance.

Weak authentication mechanisms increase the risk of unauthorized account access. If attackers gain control of user accounts, they could alter travel plans, book unauthorized trips, or steal payment information. We’ve even shown how cyber criminals love to travel the world on the cheap. These vulnerabilities undermine user trust and expose travelers to potential financial and logistical harm.

AI travel concierges will have User Knowledge (C2) as a main attack mechanism, and as previously mentioned, this would be ranked at level 2. This would mean the future threats that apply to it are the following:

- Skill squatting

- Trojan skills

- Malicious metaverse avatar DA phishing

- DASE

- The future of malvertising

- The future of spear phishing

- Semantic SEO abuse

- Agents abuse

- Malicious external DAs

Trend Vision One™

Trend Vision One™ is a cybersecurity platform that simplifies security and helps enterprises detect and stop threats faster by consolidating multiple security capabilities, enabling greater command of the enterprise’s attack surface, and providing complete visibility into its cyber risk posture. The cloud-based platform leverages AI and threat intelligence from 250 million sensors and 16 threat research centers around the globe to provide comprehensive risk insights, earlier threat detection, and automated risk and threat response options in a single solution.

Conclusion

AI digital assistants vary in capability, influencing the security threats they face. These systems range from basic voice-activated tools to advanced AI-driven agents that execute transactions, manage IoT ecosystems, and automate complex workflows. While AI assistants continue to evolve, many current implementations lack true multimodal capabilities, seamless integration, and contextual awareness. Despite these limitations, they already expose users to risks such as data privacy breaches, adversarial AI manipulation, and sophisticated social engineering attacks. By assessing these AI digital assistants as representative examples of this emergent technology against our Digital Assistant Matrix, we delved into the various security issues that these services might face in real-world usage scenarios.

Our previous research underscores the importance of mapping AI assistant capabilities to corresponding security threats to implement targeted protections. Each capability tier presents distinct vulnerabilities:

- Basic AI assistants (like voice-controlled applications handling simple queries) are susceptible to data interception, eavesdropping, and unauthorized access.

- Context-aware assistants (like systems that adapt responses based on user behavior and history) introduce risks such as session hijacking, inference-based privacy leaks, and manipulated decision-making.

- Highly integrated AI systems (like assistants that automate cross-platform tasks, execute financial transactions, and make autonomous decisions) face threats including deep social engineering, adversarial input attacks, automation abuse, and privilege escalation exploits.

Security measures have not kept pace with the expanding capabilities of AI assistants. Many current implementations remain fragmented, lacking robust multimodal interactions, seamless ecosystem integration, and adaptive security mechanisms. This gap underscores the need for proactive defenses that anticipate AI’s growing autonomy. Developers must design security frameworks that scale with AI evolution rather than retrofitting protections after vulnerabilities emerge.

User awareness must also align with AI advancements. Digital assistants are often perceived as simple tools, leading to an underestimation of security risks associated with granting them access to sensitive data and decision-making authority. Users need to recognize the trade-offs involved in relying on increasingly autonomous AI systems.

As the industry moves toward more intelligent, multimodal, and deeply integrated AI assistants, security must remain a foundational consideration. Without defenses aligned to evolving AI capabilities, these systems risk becoming high-value attack targets rather than trusted digital allies.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks