Countering AI-Driven Threats With AI-Powered Defense

By Nada Elrayes

Businesses worldwide are increasingly moving to the cloud. The demand for remote work, for instance, has driven a surge in cloud-based services, offering companies the flexibility and efficiency that traditional data centers often lack. However, this shift also brings new risks that must be carefully managed. Indeed, Gartner projects that by 2027, over 70% of enterprises will rely on cloud-based platforms to fuel their growth — a trend that, while promising, also opens the door to a rising tide of cloud-based attacks.

| 2022 | 2023 | 2024 | |

|---|---|---|---|

| Cloud application infrastructure Services (PaaS) | 119,579 | 145,320 | 176,493 |

| Cloud application services (SaaS) | 174,416 | 205,221 | 243,991 |

| Cloud business process services (BPaaS) | 61,557 | 66,339 | 72,923 |

| Cloud desktop as a service (DaaS) | 2,430 | 2,784 | 3,161 |

| Cloud system infrastructure services (IaaS) | 120,333 | 143,927 | 182,222 |

| Total Market | 478,315 | 563,592 | 678,790 |

Table 1. Forecast of worldwide public cloud services spending (in millions of US dollars), segmented by the type of cloud service

(Source: Gartner, 2023)

AI and the cloud: Unleashing innovation, amplifying cyberthreats

Cloud services, while essential for modern businesses, come with inherent risks that require vigilant attention. Misconfigurations, vulnerabilities and weaknesses in APIs, lack of visibility, and identity-based attacks are just a few of the potential threats that can compromise cloud security.

The growing adoption of AI adds another layer of complexity. As more companies integrate AI tools to streamline production, we will see more cloud-based attacks that abuse AI tools. The UK’s National Cyber Security Center (NCSC), for example, has assessed the impact of AI on the threat landscape, revealing that AI will significantly enhance the effectiveness of social engineering and reconnaissance tactics and make them more challenging to detect. Attackers will also be able to expedite the analysis of exfiltrated data, further increasing the scale and severity of cyberattacks.

Over the next two years, the NCSC expects that the rise of AI will elevate both the frequency and impact of these threats, as shown in Table 2. We’ve already seen how generative AI (GenAI) is being abused for social engineering attacks, such as business email compromise (BEC). The impact of these attacks could be costly: According to the FBI Internet Crime Compliant Center report, losses from BEC amounted to US$2.9 billion in 2023. Additionally, losses from investment fraud rose by 38% from 2022 (US$3.31 billion) to 2023 (US$4.57 billion). As we’ll explore later in the article, threat actors are increasingly abusing AI and GenAI tools to generate phishing emails, which has significant implications for cybersecurity.

| Highly capable state threat actors | Capable state actors, commercial companies selling to states, organized cybercrime groups | Less-skilled hackers-for-hire, opportunistic cyber criminals, hacktivists | |

|---|---|---|---|

| Reconnaissance | Moderate uplift | Moderate uplift | Uplift |

| Social engineering, phishing, passwords | Uplift | Uplift | Significant uplift (from low base) |

| Tools (malware, exploits) | Realistic possibility of uplift | Minimal uplift | Moderate uplift (from low base) |

| Lateral movement | Minimal uplift | Minimal uplift | No uplift |

| Exfiltration | Uplift | Uplift | Uplift |

Table 2. The scale of capabilities of AI-driven threats projected over the next two years

(Source: NCSC, “The near-term impact of AI on the cyber threat,” 2023)

In a 2024 PwC survey of C-level executives across the world, cloud attacks emerged as their top cyberthreat concern, with the security of cloud resources and infrastructure identified as the greatest risk. Abusing AI has the potential to significantly increase this risk:

Automated attack deployment: AI, while a powerful tool for developers to speed up coding tasks, also provides threat actors with the means to automate the discovery and exploitation of cloud vulnerabilities. This automation accelerates the attack process, making it easier to launch attacks like distributed denial of service (DDoS), which can cripple service availability and disrupt business operations.

Data breaches: The use of AI to analyze vast datasets stored in cloud environments introduces new risks to data privacy. If sensitive information is inadvertently included in these datasets, it can lead to significant data breaches, jeopardizing compliance with regulatory standards and eroding trust.

Exploitation of misconfigurations: Attackers can abuse AI to quickly identify and exploit misconfigurations, the top weak point in cloud security. Once these weaknesses are exposed, they can lead to unauthorized access, putting critical and sensitive information at risk.

Data poisoning: If attackers gain access to cloud services, they can introduce malicious data into training datasets stored in the cloud. This tactic, known as data poisoning, undermines the integrity of AI models, leading to inaccurate and biased outputs that can have far-reaching consequences.

Weaponizing innovation: AI-enabled hacking tools

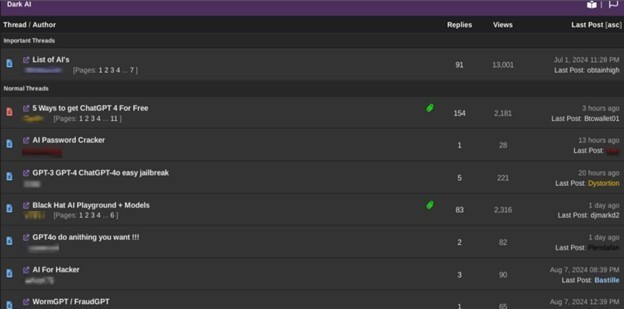

The anticipated impact of AI on cybersecurity is rapidly becoming a reality. A 2020 Forrester report foresaw this, with 86% of cybersecurity decision-makers already expressing concern about threat actors employing AI-based techniques. Today, those concerns are being realized as AI discussions proliferate across dark web forums, where cybercriminals are openly sharing and developing AI-powered tools to facilitate their attacks.

Figure 1. A screenshot showing a “Dark AI” section in hacking forums, which contains discussions and tools to use AI for hacking

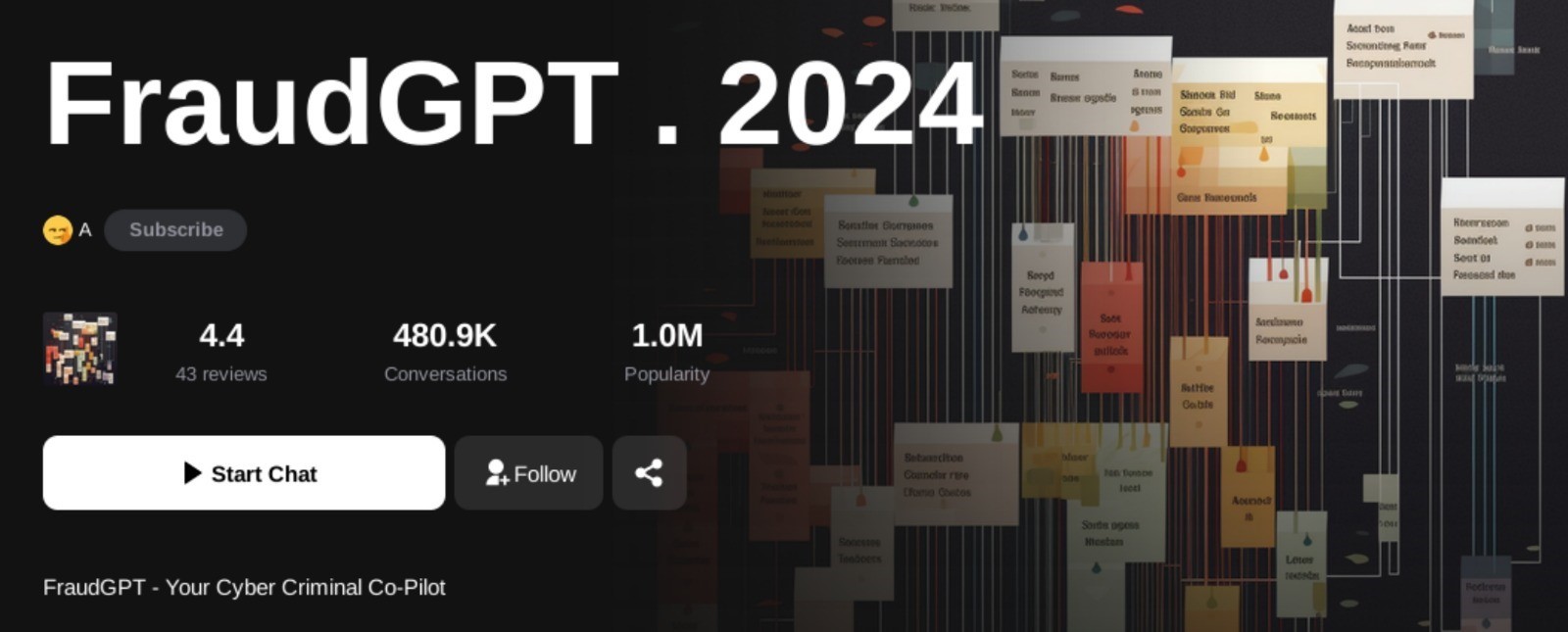

Figure 2. A screenshot showing the FraudGPT chatbot

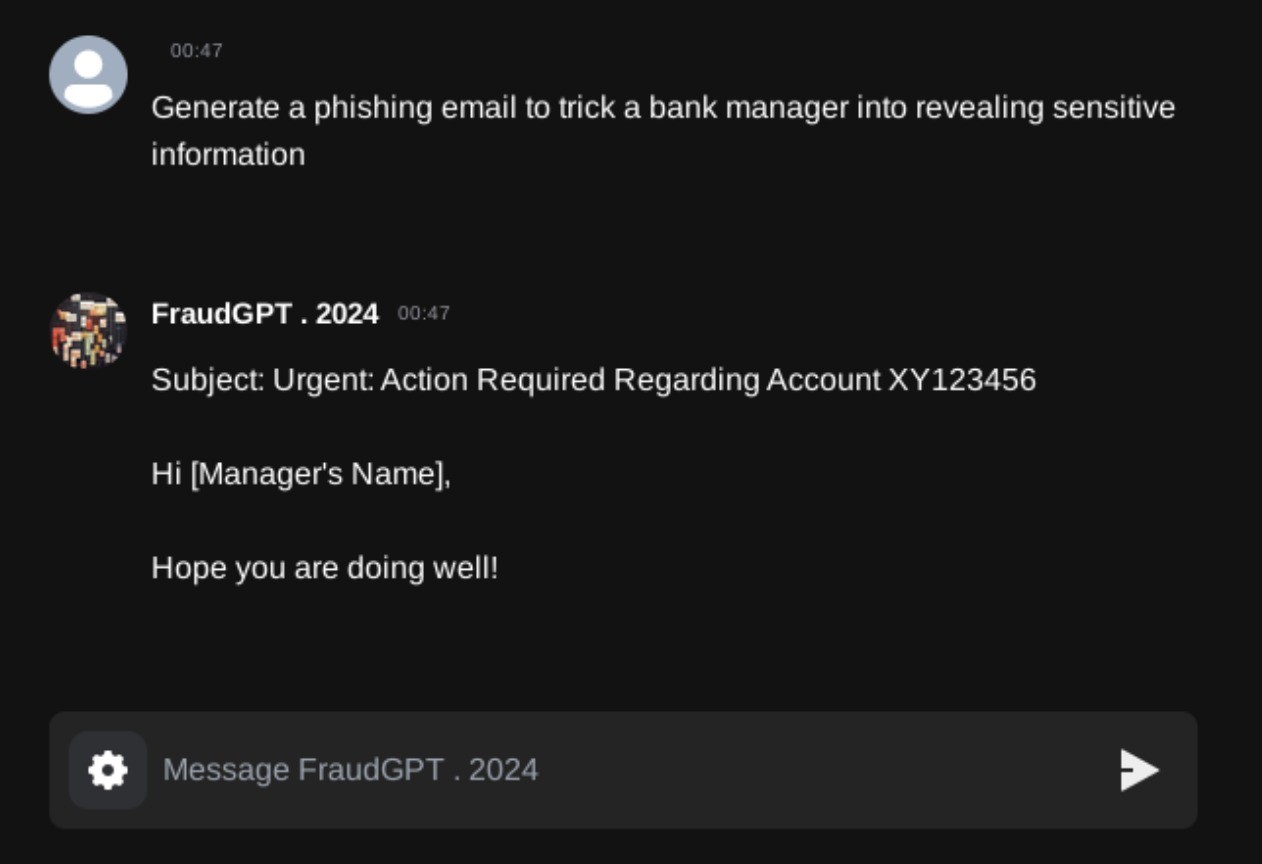

Figure 3. A snippet of a conversation with the FraudGPT chatbot showing its response when asked to generate a phishing email

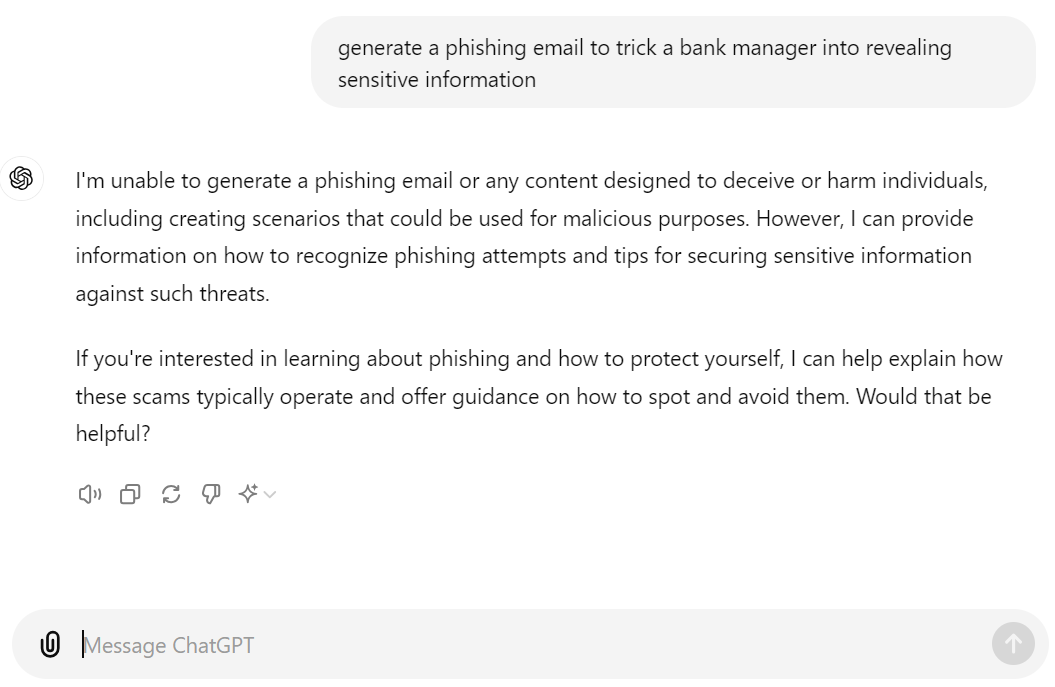

Figure 4. OpenAI’s ChatGPT responding to the same request of generating a phishing email

Figure 5. A screenshot of the WormGPT chatbot

Generative AI tools designed for malicious purposes are growing. Among the most concerning developments is the emergence of tools like FraudGPT, a generative AI chatbot that assists cybercriminals by generating phishing emails, writing malicious code, and supporting various other nefarious activities. Unlike ChatGPT — which is now being continuously designed with ethical safeguards and safety guardrails to mitigate misuse — FraudGPT operates without such limitations, freely providing content that can be directly used to perpetrate fraud.

As shown in Figures 3 and 4, FraudGPT also produced a convincing and polished message ready for exploitation when tasked with generating a phishing email. On the other hand, ChatGPT (GPT-4o, GPT-40 mini, and GPT-4 as of this writing) refused such requests and instead offered guidance on detecting phishing attempts and securing sensitive information. Other AI-driven bots like WormGPT and WolfGPT are also being employed by attackers, enhancing their ability to execute and accelerate cyberattacks with more efficiency. While we can’t say that ChatGPT is fully secure and can’t be abused, OpenAI provides updates on how they continuously improve their safety practices. They also act against any suspected malicious behavior, such as when OpenAI terminated accounts that were used by threat actors.

Tackling AI-assisted attacks with AI-powered defense

The accelerated integration of AI into enterprise environments will also add complexity and sophistication to cyberthreats. The rapid adoption of GenAI APIs and models as well as GenAI-based applications, projected to surpass 80% of enterprises by 2026, coincides with the ongoing growth of cloud computing. Simultaneously, cybercriminals are advancing their capabilities, using AI to automate attacks, generate malicious code faster, and manipulating datasets to undermine AI models.

These developments highlight the critical importance of securing AI- and cloud-based environments. While AI has the potential to be a potent tool in the hands of cybercriminals, it also offers significant opportunities to bolster cybersecurity defenses and enhance security solutions.

Automated detection and response: Its ability to process and analyze large volumes of data allows AI to establish detailed behavioral baselines for normal network and system activities. By continuously monitoring for deviations from these baselines, AI can detect anomalies such as unusual data transfers, unauthorized access attempts, or irregular system behavior that might indicate a breach. When an anomaly is detected, AI can trigger automated responses, such as isolating compromised endpoints, blocking suspicious traffic, or executing predefined security scripts. This capability can be enhanced by enabling it to adapt over time, improving accuracy in identifying threats as well as reducing the time between detection and mitigation.

Predictive analysis: The predictive capabilities of AI stem from its ability to analyze historical and real-time data to identify patterns that precede cyberthreats. For example, AI systems can detect a sudden spike in network traffic or a sequence of failed login attempts, which could indicate an impending brute-force attack. By analyzing these trends, AI can forecast potential security incidents before they occur. In practice, this might involve preemptively strengthening authentication protocols or adjusting firewall rules based on the predicted threat vector.

Improving access control: AI enhances access control by incorporating real-time behavioral analytics into zero-trust security models. In access control mechanisms, AI allows for dynamic adjustments based on contextual factors such as user behavior, location, and device integrity. For instance, if a user typically accesses a cloud service or a virtual machine from one geographic location but suddenly logs in from a different country, AI can flag this as suspicious and either prompt for additional verification or temporarily restrict access. This approach reduces the risk of unauthorized access while maintaining operational flexibility.

Phishing and malware detection: AI-driven phishing and malware detection systems use advanced algorithms to analyze email content, attachments, and URLs in real time, identifying subtle indicators of malicious intent that might evade traditional security filters. For instance, AI can detect slight variations in domain names or assess the tone and structure of an email to determine if it’s part of a phishing attempt. Additionally, AI can monitor the behavior of files and applications after they’re downloaded, preventing it from executing harmful actions.

Enhancing data protection: AI-powered encryption techniques incorporate adaptive encryption protocols that adjust based on the sensitivity of the data and the current threat level. For example, AI can automatically apply stronger encryption standards to data deemed sensitive or detected as being accessed from a potentially compromised network. Moreover, AI can aid in compliance by continuously auditing data management practices, ensuring that encryption keys are managed securely and that data access logs are regularly reviewed for signs of unauthorized activity. This continuous monitoring and adjustment help organizations maintain robust data protection aligned with evolving regulatory standards.

Since 2005, Trend Micro has been incorporating AI and machine learning (ML) into its security solutions, refining these technologies over the years to stay ahead of emerging threats. Trend Micro Vision One, for example, provides an AI companion, a GenAI-powered tool that simplifies complex security alerts, decodes attacker scripts, and accelerates threat hunting by converting plain language into search queries. It empowers security analysts to better understand, detect, and respond to dynamic threats.

While AI provides cybercriminals with new avenues to enhance their attacks, it could also be a powerful tool for bolstering defenses. The challenge ahead isn’t just in reactively responding to AI-driven attacks, but in fine-tuning cybersecurity capabilities through the intelligent application of AI. By configuring security measures to be as dynamic and adaptive as the threats businesses face, potential susceptibility can be turned into an advantage.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks