Generative AI in Elections: Beyond Political Disruption

Generative AI in Elections: Beyond Political Disruption

Elections are not just an opportunity for nation states to use Generative AI tools to damage a politician’s reputation. They are also an opportunity for cybercriminals to use Generative AI tools to orchestrate social engineering scams.

By David Sancho and Vincenzo Ciancaglini

Key Takeaways:

- Increasingly sophisticated and accessible AI tools, including deepfakes and Large Language Models, equip cybercriminals with potent means to disrupt political landscapes and perform large-scale scams, especially during election seasons.

- Two types of attackers use deepfakes for manipulation: nation states/political factions aim to tarnish a candidate's reputation to influence election results, while cybercriminals use a target's influence to add credibility to a social engineering scheme, such as fake cryptocurrencies or fundraisers.

- Large Language Models (LLMs) like OpenAI’s ChatGPT have seen massive development and can be used by criminals to scam voters. They can sift through social media profiles to identify potential victims, create polarizing social media groups, and craft personalized phishing messages at scale.

- As cybercriminals use efficient AI tools for social engineering and impersonation, there is potential for increased cybercrime during election seasons. The US election, with its high number of voters and the common use of English language, could be particularly lucrative for criminals.

Setting the scene

As we enter the climax of the global 2024 election season, with all eyes on the US elections this November, the rising threat posed by deepfakes and other Generative AI technologies cannot be ignored. Politicians are prime targets for deepfakes because they are well-known public figures and have great influence over their country’s citizens. The heightened public attention surrounding them during elections further intensifies this issue, declaring open season for those looking to exploit these technologies for unscrupulous purposes.

Two distinct categories of adversaries are keenly interested in exploiting these AI tools to simulate the likeness of politicians:

- Nation states and political factions seeking to destabilize: Their primary objective is to sully a candidate's reputation, potentially swaying the election in their favor.

- Cybercriminals: These bad actors harness a target's influence to add credibility to their social engineering plots, convincing victims to act against their own interests, such as by investing in a fraudulent cryptocurrency or contributing to a fake fundraising campaign.

Attacking reputations

The power of deepfakes and LLMs to distort a politician's reputation in the hopes of manipulating election results is a stark reality in today's social media age. These tools have proven capable of implanting false memories and fueling the "fake news" phenomenon. While disinformation campaigns are not new, the election season provides a unique platform for these disinformation brokers, as voters are more open to political messages. Here are a few recent examples.

In the Polish national election in 2023, the largest opposition party broadcasted an election campaign ad that mixed genuine clips of words spoken by the prime minister with AI-generated fake ones. That same year, a fake audio clip of the UK labor party’s candidate, Keir Starmer, swearing at staffers went viral and gained millions of hits on social media. In the run-up to the 2024 US election, we’ve also seen deepfakes of Donald Trump being arrested.

In these cases, deepfakes were being used to erode the candidates’ reputations to make them lose points in the election race. You can find more about this topic on our recent article, “The Illusion of Choice: Uncovering Electoral Deceptions in the Age of AI.”

Exploiting elections for monetary gain

Nation states are not the only entities showing interest in elections as they present a highly attractive opportunity for cybercriminals. Analyzing the prevalent trends in cybercriminal activity involving deepfakes, we see deepfakes being leveraged in several ways.

We see an increased use of deepfakes in targeted cyberattacks. In these scenarios, cybercriminals use deepfakes to convincingly mimic a person known to the victim, with the primary objective of financial exploitation. This strategy has been detected in both business and personal environments. It ranges from business email compromise (BEC) scams, which involve impersonating a corporate executive, to "virtual kidnapping" scams that prey on personal relationships. The success of these scams hinges on the ability to persuade victims that they are genuinely communicating with the impersonated person via manipulated audio or video content.

Furthermore, the application of deepfakes extends beyond personalized attacks. We've witnessed cases where cybercriminals employ deepfakes to conduct large-scale scams. In these instances, the attacker impersonates a public figure, leveraging their standing and influence to promote dubious financial schemes. At present, deceptive cryptocurrency operations seem to be a popular choice amongst cybercriminals, although their tactics are not restricted to this avenue alone.

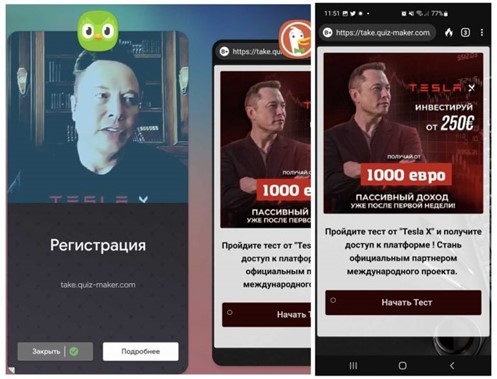

Figure 1: Elon Musk deepfake used in a fake advertisement and during a live stream,

after attackers overtook several legitimate Youtube channels

Scams of this nature can occur throughout the year, but election season provides a prime opportunity for cybercriminals to use deepfake politicians to target potential voters. As elections draw near, voters become more receptive to messages from candidates, creating an ideal environment for financial scamming.

The GenAI toolset

The election landscape is evolving due to the growing sophistication and availability of AI tools, a shift that cybercriminals are exploiting to conduct AI-driven social engineering attacks during election seasons. The emergence of user-friendly, point-and-click deepfake creation tools has transformed the field, replacing complex tools like DeepFaceLab.

Generative AI equips cybercriminals with advanced tools to create photorealistic virtual personas of political candidates. These can deceive voters into donating money, investing in fraudulent schemes, or supporting counterfeit fundraisers. Real-life events, such as Donald Trump promoting his NFT trading cards or Elon Musk endorsing 'dogecoin' cryptocurrency, highlight the feasibility of such scams.

Given the wide public exposure that election candidates receive, cybercriminals find fertile ground for exploiting AI tools in various threat scenarios.

Large language models: Voters' social engineering at scale

Large language models (LLMs), such as OpenAI’s ChatGPT and Meta's Llama, have seen significant advancements in recent years, with improved performance, widespread availability, and lower computational needs.

HuggingFace, a public platform for open-source AI models, currently hosts over a million AI models, including 32,000 open-source LLMs. These models, which cater to various needs including foreign languages, text generation, and chatbots, are accessible to all, including cybercriminals.

In the underground digital world, LLM services are increasingly being exploited for illicit activities, particularly in the form of jailbreak-as-a-service, which enables users to access regular services like ChatGPT without its built-in guardrails. The proliferation of these services presents a potential threat for voters, as criminals can leverage LLMs to orchestrate sophisticated scams. Here are three use cases:

Victim reconnaissance and baiting

The first step in orchestrating voter scams is identifying the right interest groups. As voter factions tend to be polarized, criminals aim to capitalize on this division. In this process, Large Language Models (LLMs) can be remarkably useful in several ways:

- They can analyze social media profiles to identify individuals with specific interests. By vetting public information, LLMs can deduce a person's political leanings or policy preferences based on their social media activities.

- LLMs can also assist in forming interest groups on social media platforms. For instance, a criminal could establish a Facebook group like “Candidate X for President - Florida Chapter,” generating contentious posts to draw in potential victims.

Building infrastructure

For a scam to be successful, the scammer may require a network of deceptive platforms, such as counterfeit websites promoting fabricated policies or messages from a candidate, sham fundraisers, endorsement sites, and more. These platforms serve to reinforce the misleading narrative they aim to propagate. LLMs can swiftly and effortlessly assemble these sites, even utilizing assets misappropriated from official campaign sites. Additionally, LLMs can enhance search engine optimization, ensuring these fraudulent websites rank high in search results, thereby boosting their perceived legitimacy.

Bespoke messages for maximum impact

Many voters are accustomed to receiving generic emails from candidates, legitimate or otherwise, that address all voters with minimal personalization. However, determined criminals can create phishing messages tailored to individual victims on a large scale. Targeting victims within specific interest groups allows for more focused messages since the topics aren't diverse. Tools like ChatGPT can merge voter interests, the fraudulent message, and elements from the voter's social media account to create highly accurate, targeted texts. This can be done on a grand scale, even in different languages, and the content can be used for phishing emails or scripts for deepfake videos aimed specifically at the user.

Deepfakes: Going beyond political disruption

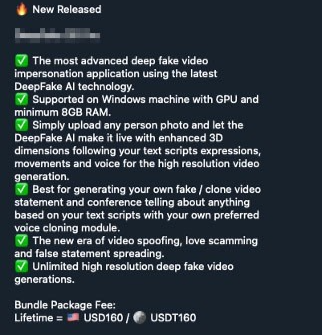

Deepfakes form a critical part of the AI toolkit used by criminals during elections. Though commonly associated with political propaganda and disruption, deepfakes are increasingly being exploited for financially motivated scams. Modern deepfake creation tools are now user-friendly, requiring minimal technical knowledge or computational power, and allow personalized video generation at scale.

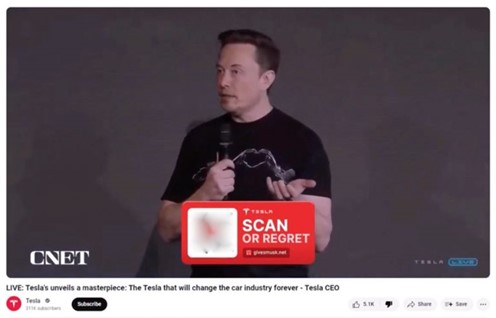

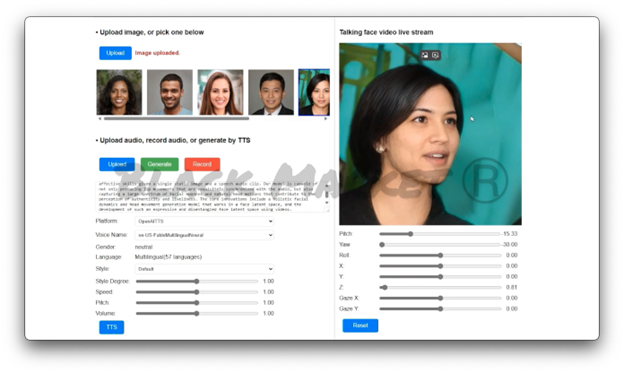

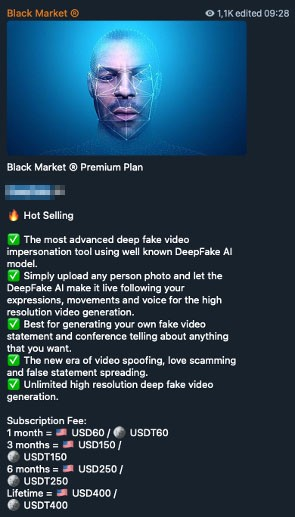

Figure 2: Deepfake 3D Pro app and offering in the underground

One such tool is Deepfake 3D Pro, available in the criminal underground for a lifetime subscription of 160USD. This tool enables the creation of videos featuring a 3D avatar that can impersonate anyone. The avatar can recite a script or mimic recorded audio, which can be generated using popular voice cloning services with just a few seconds of the candidate's original voice.

Voice cloning provides another avenue for candidate impersonation for financial gains. Criminals can generate false soundbites from candidates, with the fake avatar endorsing a scam or reading a phishing script targeting a specific victim. When deployed on a large scale, voice cloning tools create a seamless link between the text-generating capabilities of LLMs and deepfake avatars.

Additionally, tools like Deepfake AI, available for 400 USD in underground marketplaces, allow criminals to superimpose the candidate’s face onto an actor’s video in a lifelike manner. These tools can be used to promote a bogus crypto project, solicit funds for a fake fundraiser, or validate a lie to support a scam.

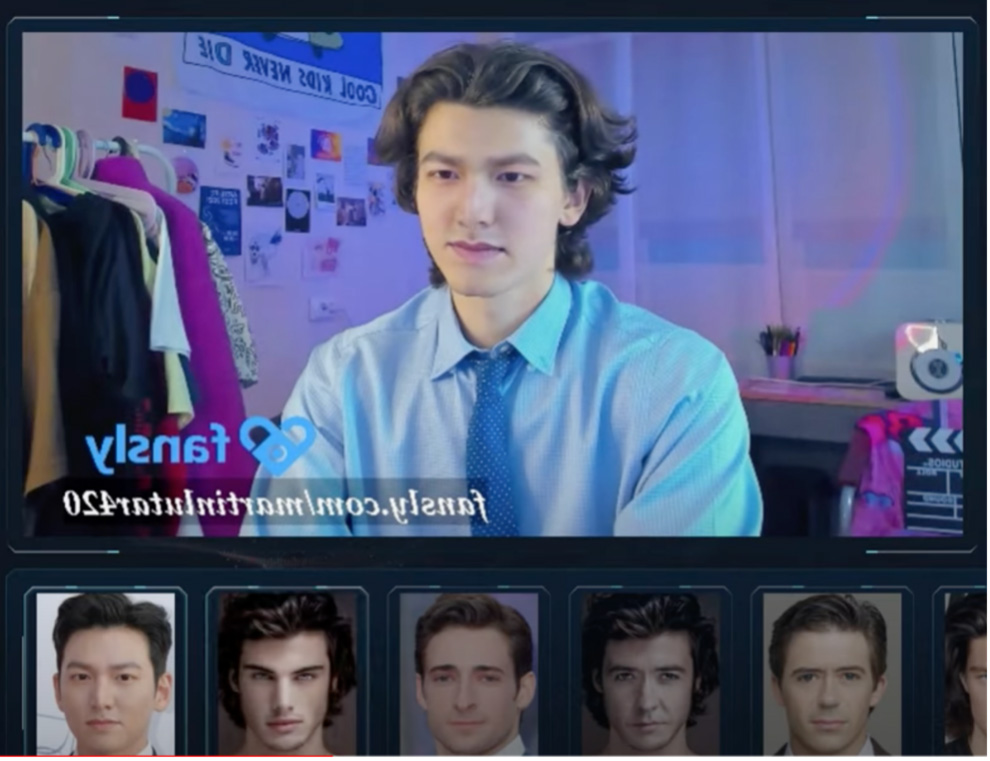

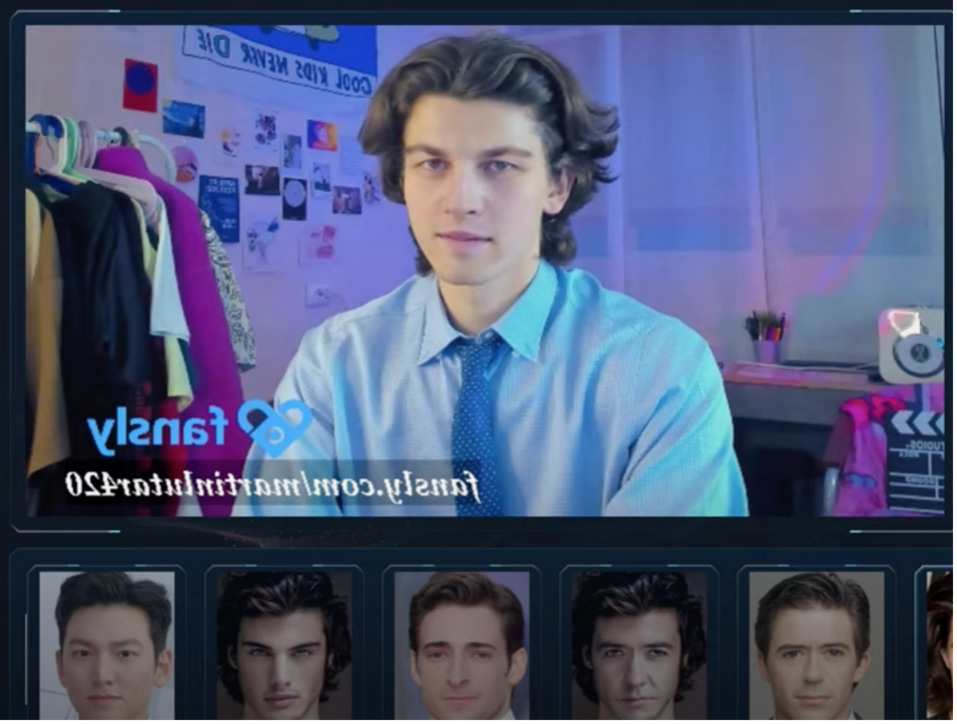

Figure 3: Deepfake AI offering in the underground

Complementing these methods, real-time impersonation is possible with live face replacement tools like FaceSwap and AI VideoCallSpoofer. For around 200 USD for a lifetime subscription, these tools enable a criminal to perform a deepfake impersonation during a live video call. While this may not be suited for large scale attacks, it could trick the candidate's close associates. This opens the possibility for more sophisticated attacks, such as the impersonation of a Ukrainian diplomat that almost deceived a US senator in September 2024. The potential for monetary gains using this technology cannot be overlooked.

Figure 4: Screenshots of FaceSwap

What can we do about it?

Is it possible for voters to distinguish truth from falsehood? The focus here isn't on election interference, but rather on evading the snares set by cybercriminals. It's often easier to prevent scams than to change one's viewpoint.

To help mitigate the damage a deepfake might cause, one initiative, for instance, aims to watermark synthetic images. This allows people to identify the creator of the image, the alterations made, and by whom, helping them recognize deepfakes. More information on this watermarking initiative can be found on the C2PA website. It's encouraging to note that major players like OpenAI, Meta, Google, and Amazon are all involved in this initiative.

Additionally, Trend Micro offers a free application specifically designed to detect deepfake video calls, capable of flagging fraudulent conversations in real-time. Check out the Trend Micro Deepfake Inspector on the Trend Micro website.

In the future, we might see politicians pledging not to use social media for fundraising appeals or investment promotions. However, this won't entirely eradicate the threat of criminal deepfakes, as individuals are innately trusting by nature.

Final thoughts

Thankfully, so far, we have not seen too many examples of the attacks we describe here. However the current climate offers a perfect storm for cybercriminals to deploy these tactics. The 2024 US election, with its large voter base and English as the most common language among attackers, stands out as a potential goldmine for these criminals. As we approach the climax of the election period, it's critical to be prepared for these emerging threats.

Modern AI provides cybercriminals with enhanced tools to identify targets, manipulate them, and convincingly impersonate respected public figures to carry out scams more effectively. The increasing sophistication and affordability of these ready-to-use tools suggest a probable surge in their use to exploit election season for financial gains.

Therefore, this topic is of utmost relevance to consumers and voters. The complexity of these threats and their potential impact on individual voters makes it crucial for everyone to remain vigilant and informed. Even as some actors may aim to disrupt election results, other cybercriminals may simply be looking to defraud their victims. As such, the need for caution cannot be overstated. If an offer sounds too good to be true, it likely is, regardless of whether it appears to come from a preferred candidate.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Related Posts

- AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

- Metaverse or Metaworse? How the Apple Vision Pro Stacks Up Against Predictions

- Across the Span of the Spanish Cybercriminal Underground: Current Activities and Trends

- Bridging Divides, Transcending Borders: The Current State of the English Underground

- AI Assistants in the Future: Security Concerns and Risk Management

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks