By Nitesh Surana

Azure Machine Learning (AML) is a machine-learning-as-a-service (MLaaS) platform for building, deploying, and managing machine learning (ML) models. Prior to Azure ML, Azure Batch AI was the ML offering in Azure and later, retired. AML offers a wide range of features and services for data preparation, model training, deployment, and management, enabling organizations to use ML to gain insights from data and build intelligent applications. AML facilitates machine learning operations (MLOps), providing data scientists and ML professionals the means to manage their ML workflows, infrastructure, datasets, models, and algorithms. Users of AML can reduce the time they spend creating infrastructure for testing hypotheses because the platform allows them to build pipelines that help them iterate and scale based on their needs. Many global organizations across various industries use AML for the efficiencies it affords.

Additionally, one can use AML to fine-tune, train, deploy and monitor models on their custom datasets and leverage the infrastructure maintained by Microsoft, as showcased in a recent video about the underlying infrastructure of ChatGPT.

Figure 1. Azure’s AI supercomputer infrastructure built to run ChatGPT

Upon exploring the service from a security standpoint, we encountered vulnerabilities and unsecure practices related to storage and logging of sensitive information, exposed APIs leaking sensitive information arising from usage of cloud middleware agents in specific AML configurations, and the use of misconfigured open-source components leading to unintended logging of sensitive access tokens, amongst other issues that we intend to cover in a series of upcoming blogs. We reported these security issues to Microsoft, and they have been remediated.

In the first blog post of our series, we detail our findings for the first class of security issues around storing and logging of credentials in cleartext on AML resources. We shed light on how these issues were found and the risks posed in collaborative environments like AML.

Brief overview of AML

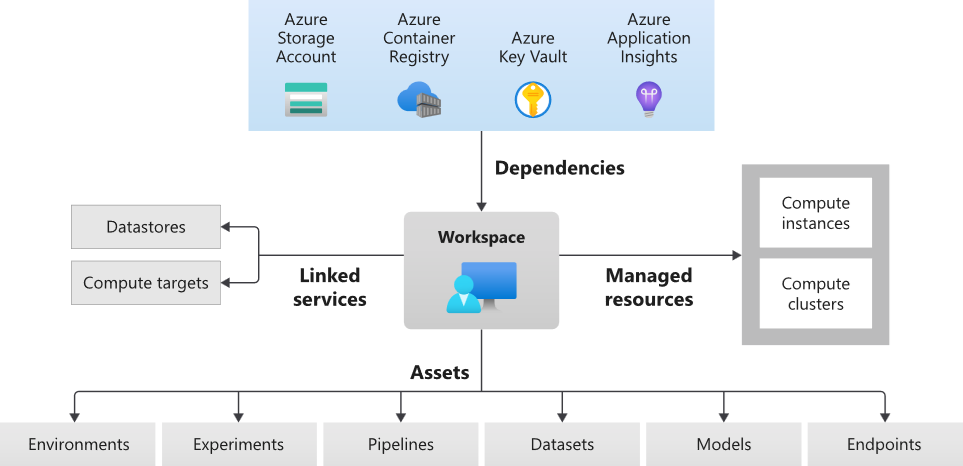

AML is comprised of various components, which are detailed in Figure 2.

Figure 2. Components of AML service (Source: Microsoft.com)

The AML service uses a combination of user-managed and Microsoft-managed services underneath. When a user creates a resource based on the AML service, a workspace is created. An AML workspace is a centralized place for managing ML-related activities and artifacts. One can access the workspace using AML Studio, VSCode extension, AML SDK, AML CLI. The AML Studio offers a web user interface (UI) that developers can use to manage resources easily. Users can work on Jupyter Notebooks, experiments, datasets, models, training/inference environments, deploy ML models as endpoints, and perform MLOps.

When a workspace is created, resources for the succeeding services must be specified (apart from the Container Registry) for the workspace to function as the workspace is dependent on these services. Users can either use existing resources for these services or create new ones just for the workspace.

- Storage Account

- Key Vault

- Container Registry

- Application Insights

Computes in AML

Compute targets are one or more machines on which a user can run their scripts, notebooks or deploy ML models as endpoints. A user can choose from a local computer, Compute Cluster, Compute Instance, Azure VM, Azure Databricks, and few more in preview mode. We chose Compute Instance (CI) for our analysis.

A Compute Instance (CI) is a fully configured Microsoft-managed development environment, used for development and testing. The default operating-system image is based on Ubuntu and maintained by Microsoft. It contains development tools like Docker, Jupyter, Node.js, Python, NVIDIA CUDA-X GPU libraries, Conda packages, and TensorFlow, among others installed by default. These are common tools used for ML projects and applications.

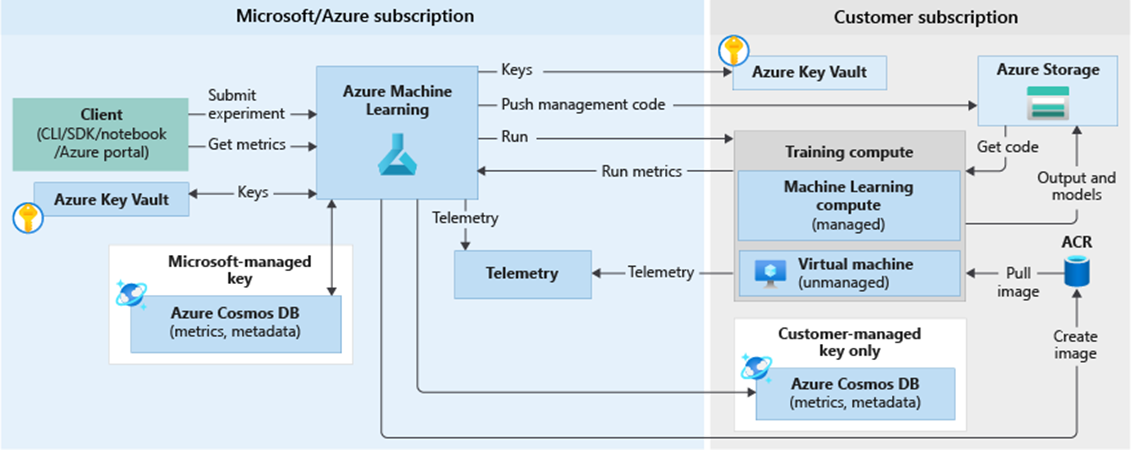

Figure 3. AML Architecture (Source: Microsoft.com)

In AML, CIs and Compute Clusters are provisioned using Batch. Using Batch, one can run large-scale workloads in Azure. It creates and manages a pool of compute nodes (virtual machines), installs the applications you want to run, and schedules jobs to run on the nodes. A task is a unit of computation associated with a job. Think of tasks as single individual runs of a job.

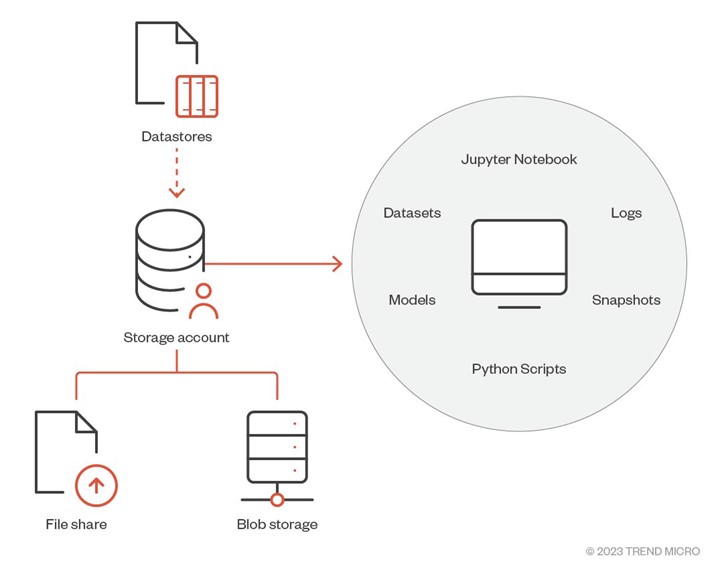

Storage in AML

Each AML workspace has a storage account associated with it. The storage account’s File Shares and Blob Containers are used to store ML related artifacts and entities like Jupyter notebooks, scripts, logs, datasets, models etc. To access these ML-related entities in a storage account from CIs, AML provides datastores. Basically, datastores are references to a workspace’s storage account. It enables a user to interact with different storage types (Blob, Files, Datalakes). Using datastores, a user doesn’t need to place connection information (access key, SAS creds) in their scripts for accessing storage options using credential-based access.

Figure 4. Usage of storage account in AML

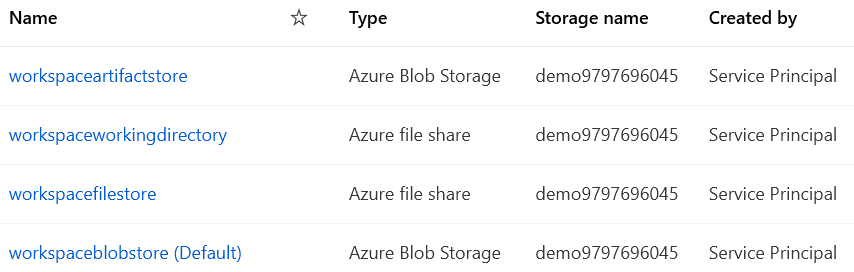

With every AML workspace, there are certain datastores created by default. These datastores are mapped to different storage options as shown below:

Figure 5. AML datastores (left) mapped to storage options (right)

If we expand on any of the datastores mentioned above, we can see the type of storage it references. For example, “workspaceartifactstore” points to the blob storage:

Figure 6. Datastore and storage type mapping in the AML service

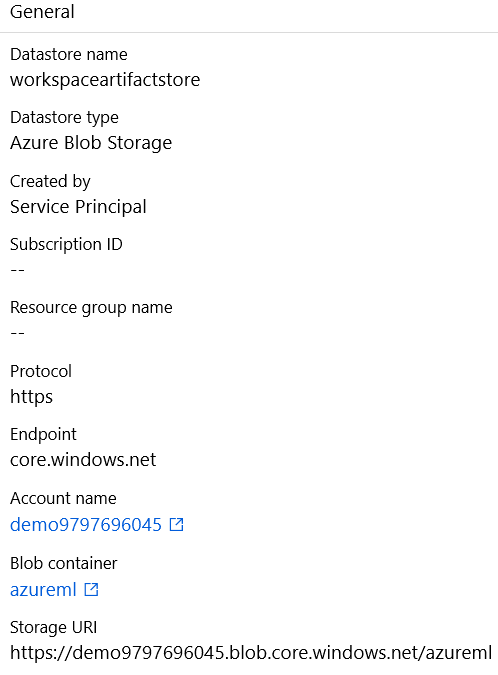

Additionally, AML datastore supports the following authentication types to access a supported storage service:

Figure 7. Credential-based support for Azure File Share using AML datastores (Source: MSDocs)

For working with Azure File Share, AML datastore supports credential-based authentication and doesn’t support identity-based authentication. The credential that is used to work with Azure File Share is a combination of a username (storage account’s name) and a password (storage account’s primary access key)

The File Share of the workspace’s storage account is mounted across all CIs that are created in the workspace. This drive is the default working directory for Jupyter, JupyterLab, RStudio, and Posit Workbench. This means that notebooks and other files created are automatically stored in the file share and available for use in other CIs as well. Additionally, since the AML service enables collaboration amongst users, by design, any user in the workspace can access other users’ files, such as Jupyter Notebooks and scripts.

Considering the number of Azure services involved, we set out to understand the different components of AML. It was clear from the start that AML is a major Azure cloud service that is dependent on other critical services. Given the variety of open-source components in widespread use, we saw value in analyzing how the different components work. Seen from the vantage point of an adversary in a post-compromise scenario, the attack surface appears markedly broad, which our research findings shall validate.

With this background and an overview of AML service, we discuss the details of the security issues that we uncovered and reported to Microsoft through the Zero Day Initiative (ZDI).

Cleartext storage of sensitive information

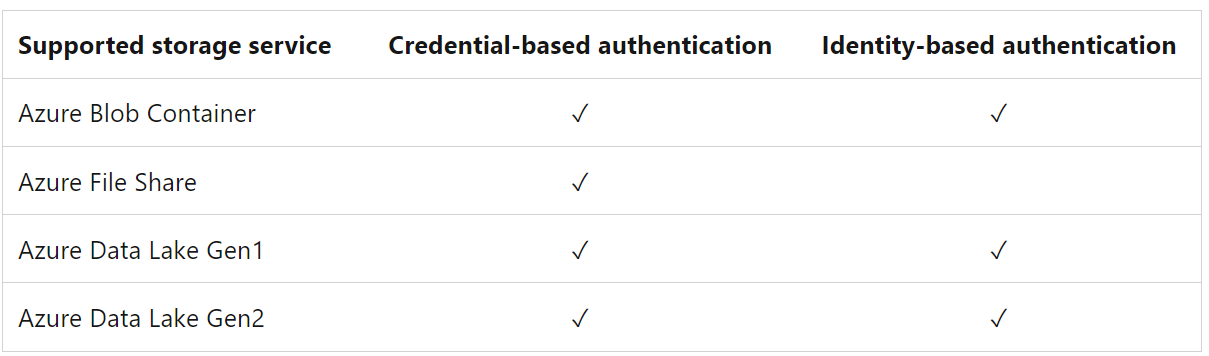

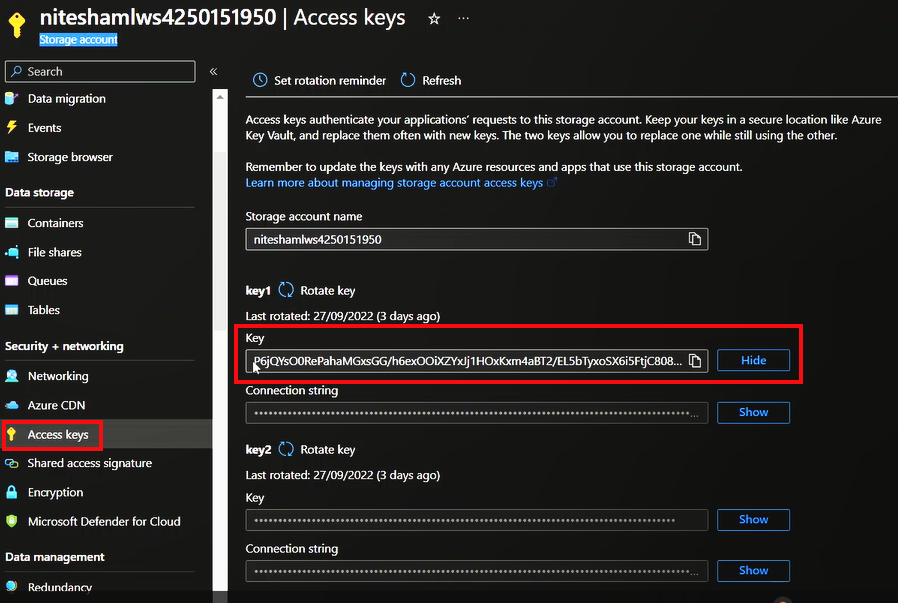

Storage Account uses two 512-bit storage account access keys to authorize access to configuration and data of the storage account. As one can understand, it’s important to have these keys guarded, since AML-related entities are stored in the storage account associated with an AML workspace. On CIs, the file share of the storage account is mounted using the mount utility and the command looks like this:

Figure 8. Command to mount the Azure File Share on CIs

We found four instances of cleartext storage of storage account access key in a CI created in an AML workspace:

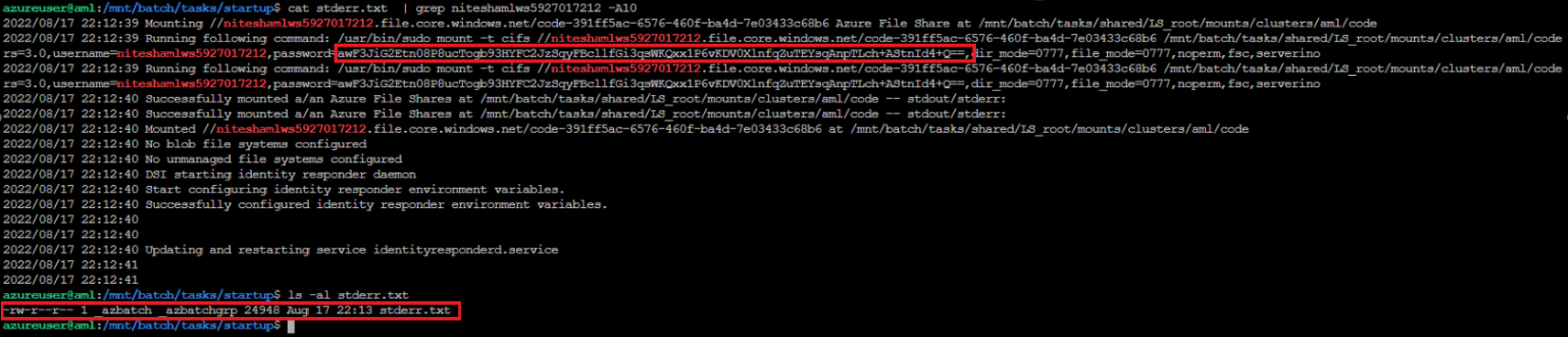

1. ZDI-23-161: Batch error logs

While exploring the CI and figuring out how the File Share was mounted, we came across the logs generated when the start task is executed. In Batch, the start task prepares the operating environment for a node (i.e., the CI). The output of the task execution is saved in two files named stderr.txt and stdout.txt located at /mnt/batch/tasks/startup/.

- /mnt/batch/tasks/startup/stdout.txt

- /mnt/batch/tasks/startup/stderr.txt

In one of the commands that is executed in the start task, we found that the command-line containing the primary access key of the storage account associated with AML workspace was getting logged in the error logs at stderr.txt in clear text.

Figure 9. Storage account credential in Azure Batch error logs. Click photo to enlarge.

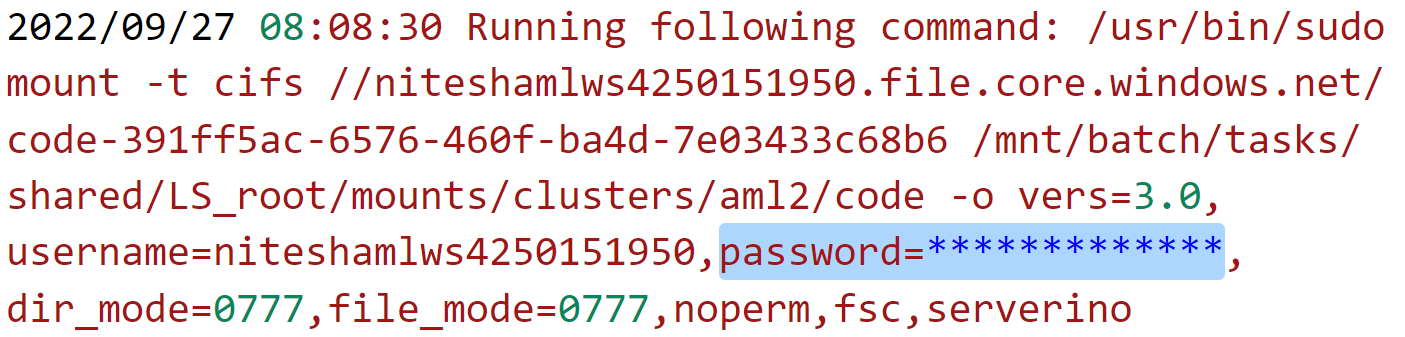

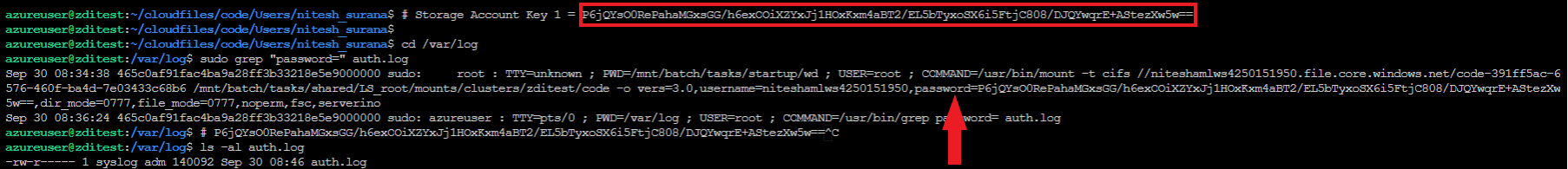

2. ZDI-23-096: System authorization logs

On every CI boot, the Azure File Share associated with an AML workspace’s storage account is mounted in this path:

- /mnt/batch/tasks/shared/LS_root/mounts/clusters/CI_HOSTNAME/code

In the previous instance, it was evident from the Batch error logs that the command that was being run contained the storage account credential in the password field. Since the command is being run as ‘sudo’, we found the command containing the credentials being logged as-is in the system authorization logs at /var/log/auth.log.

Figure 10. Storage account credential for the workspace in the Azure Portal

Figure 11. Storage account credential in authorization logs. Click photo to enlarge.

It is true that the authorization logs still need elevated permissions to be accessed. However, the default user in Cis, named “azureuser” is a member of the sudoers group and can assume “sudo” permissions. Additionally, after we reported the two aforementioned issues, we came across an instance where the “password” field’s value was masked.

Figure 12. Masked “password” in Batch error logs

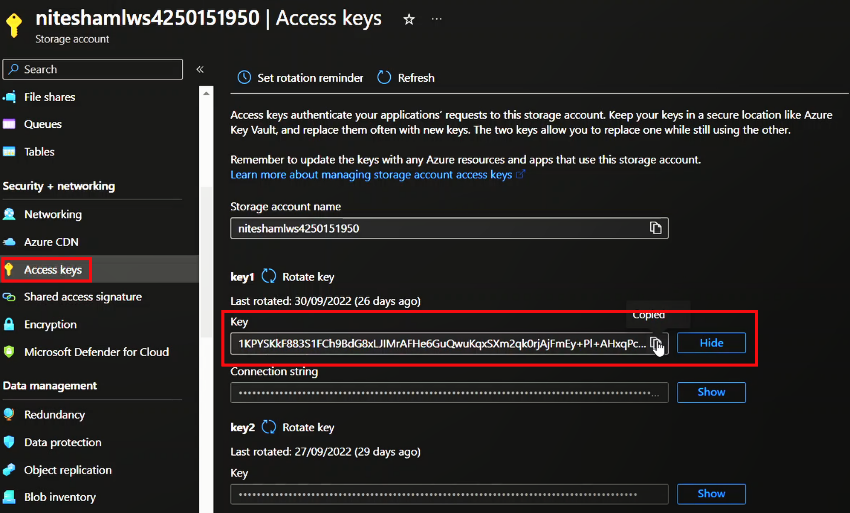

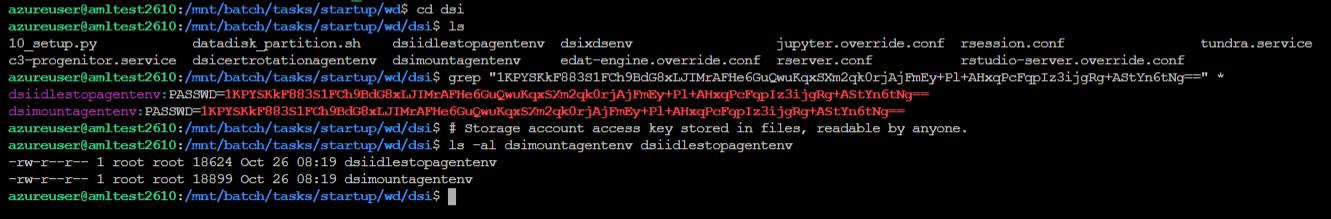

3. ZDI-23-095: Agent configuration files

After the above two issues were fixed after our reporting to Microsoft, we came across two instances of the primary access key being stored in cleartext on the CI. AML uses certain agents that are installed on CIs to manage, diagnose, and monitor them. They are stored in the working directory of the start task at /mnt/batch/tasks/startup/wd and are managed by Microsoft. They run as systemd services on CIs and need certain configurations to perform their actions. For two such agents, named “dsimountagent” and “dsiidlestopagent”, we found that the service fetches environment variables from the following files:

- dsimountagent: /mnt/batch/tasks/startup/wd/dsi/dsimountagentenv

- dsiidlestopagent: /mnt/batch/tasks/startup/wd/dsi/dsiidlestopagentenv

These instances of storage account access key being logged were found while we were trying to understand how the previously reported issues were fixed.

Figure 13. Storage account credential for the workspace as shown on the Azure Portal

Figure 14. Storage account credential in middleware environment variable files. Click photo to enlarge.

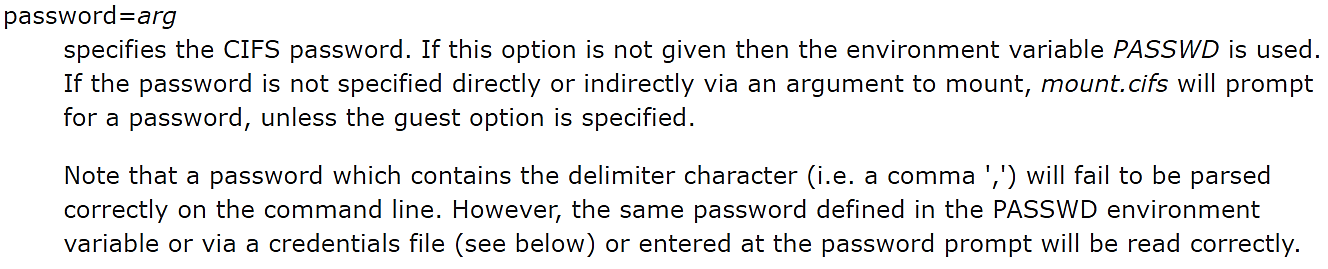

The environment variable, which contained the primary access key of the storage account, was named “PASSWD”. While figuring out why the environment variable was specifically named as such, we saw that the mount utility can fetch the value of the “password” argument from an environment variable named “PASSWD” when the argument is not specified:

Figure 15. Supply “password” as an environment variable named “PASSWD”

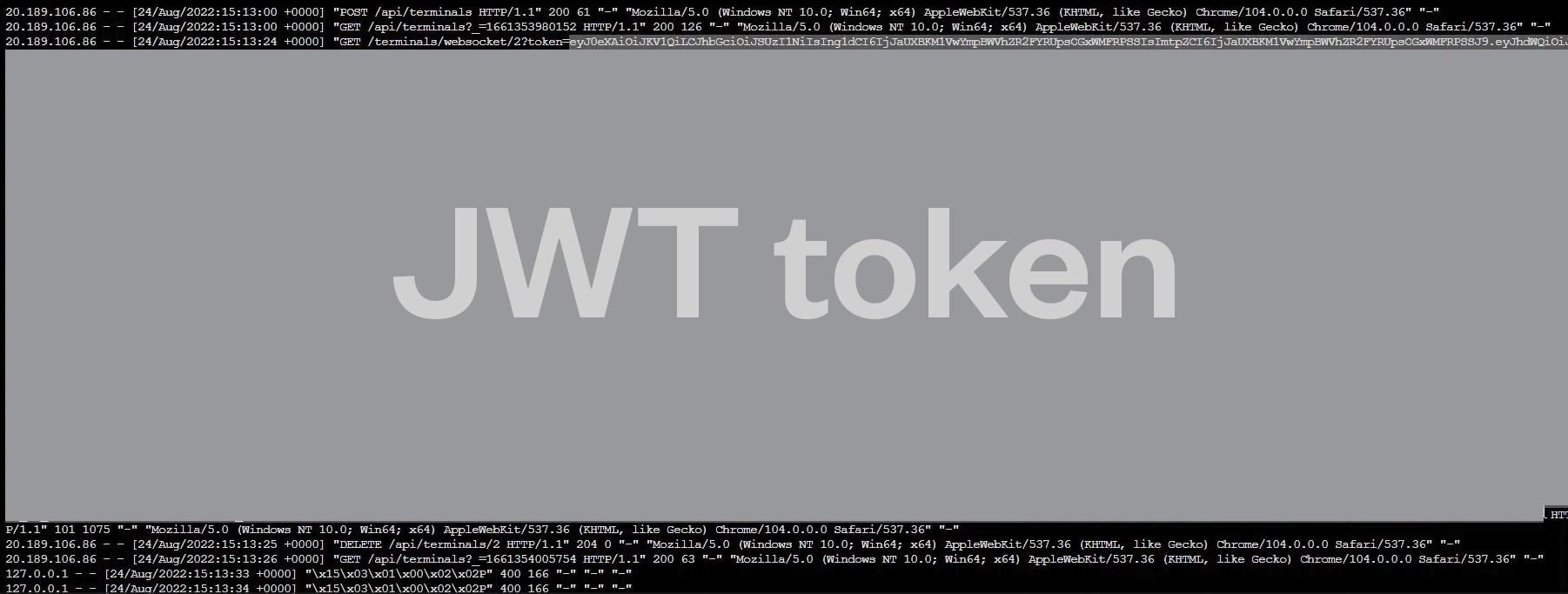

4. ZDI-23-097: Compute Owner JWT in nginx access logs

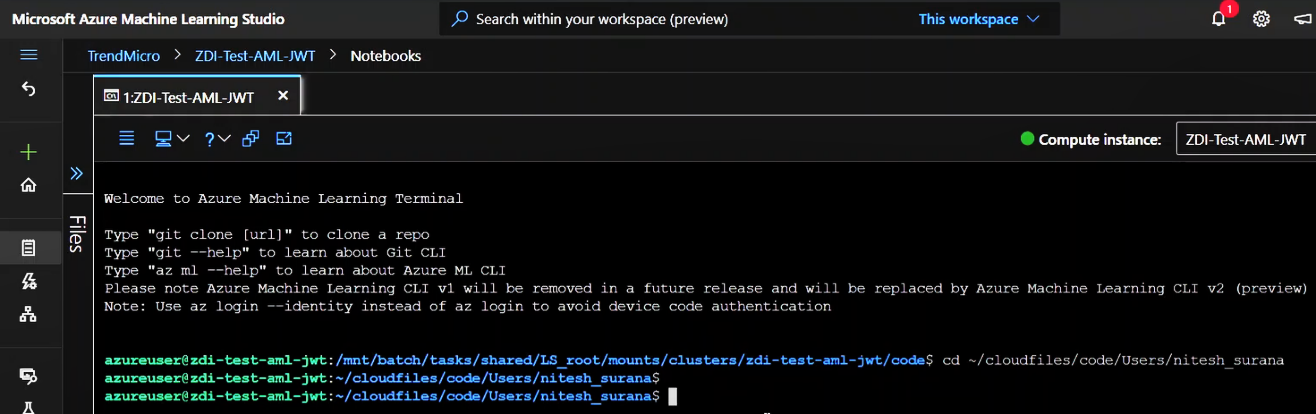

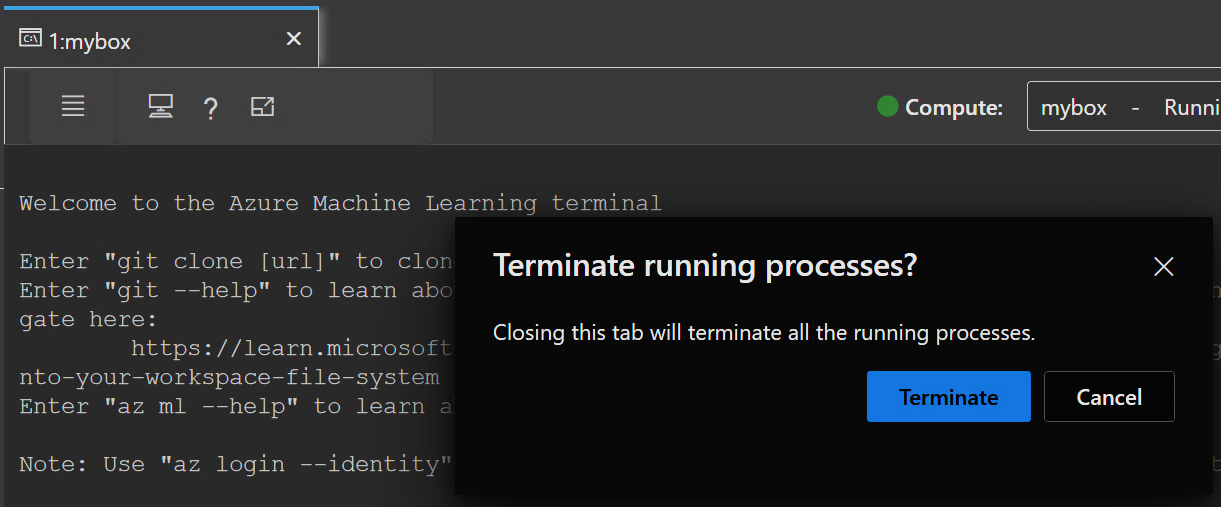

AML service users can access created CIs using Jupyter notebook, JupyterLab, a browser-embedded terminal, VSCode IDE and ssh. Jupyter is an open-source web-based interactive computing platform that enables users to create browser-based terminals and collaborate on code. Among data scientists, Jupyter is quite a popular developer-focused tool.

Figure 16. Browser-embedded terminal to access CIs

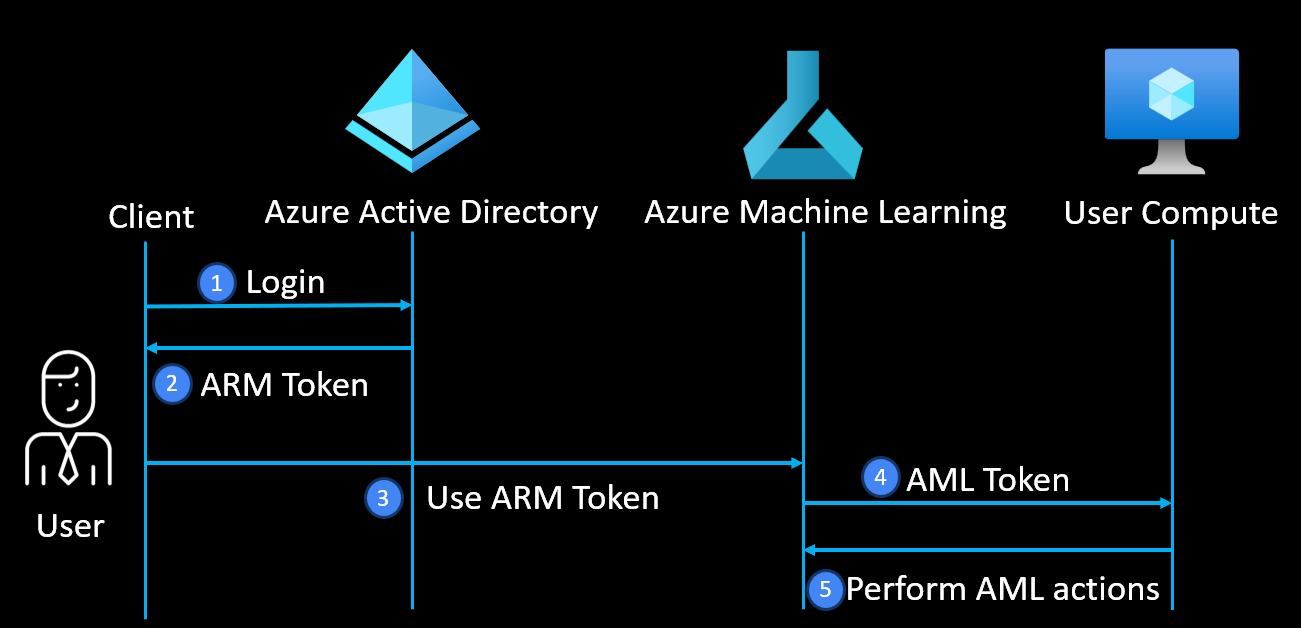

When a client wishes to work with AML, the authentication process is as follows:

Figure 17. Authentication flow for a client (e.g., a user) accessing AML

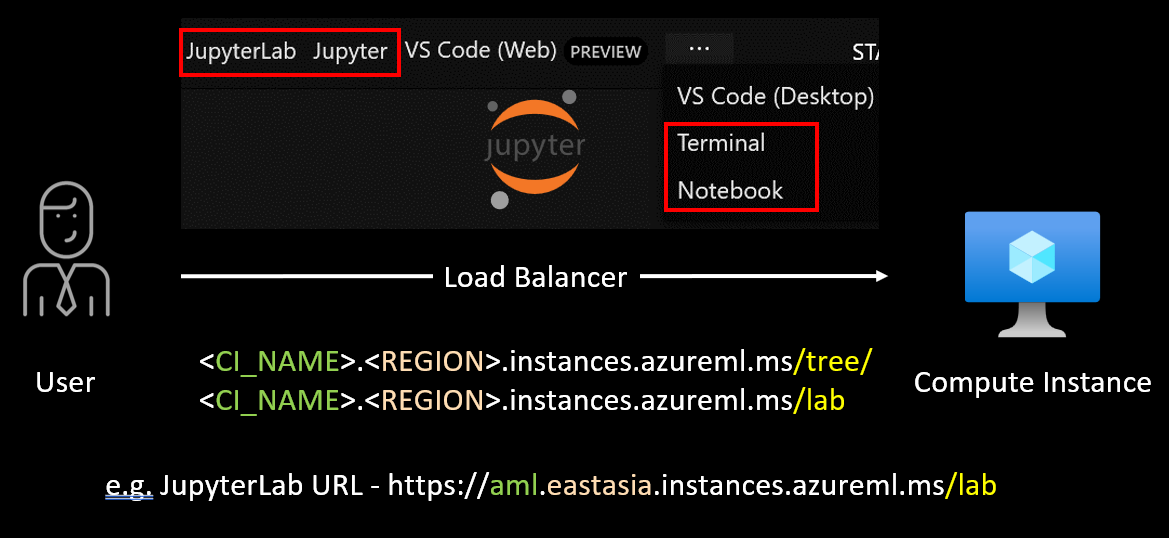

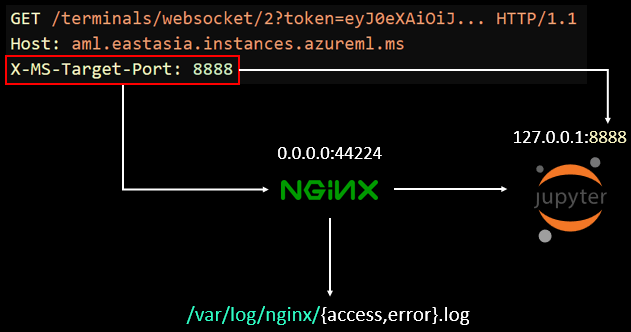

Once a client fetches the Azure Resource Manager token from Azure AD, it uses the token to successfully work with AML. The AML service creates an AML token, which is used to perform actions (e.g., working on CIs, Compute Clusters etc). Since the issues about credentials being logged in cleartext on CIs were fixed after we reported them, we were interested to see if there are incoming credentials to the CI. We found that if user terminates a Jupyter terminal session, the JSON Web Token (JWT) of the user gets propagated through a load-balancer to the CI (see Figure 18).

Figure 18. Possible architecture for accessing Jupyter Notebooks using AML Studio

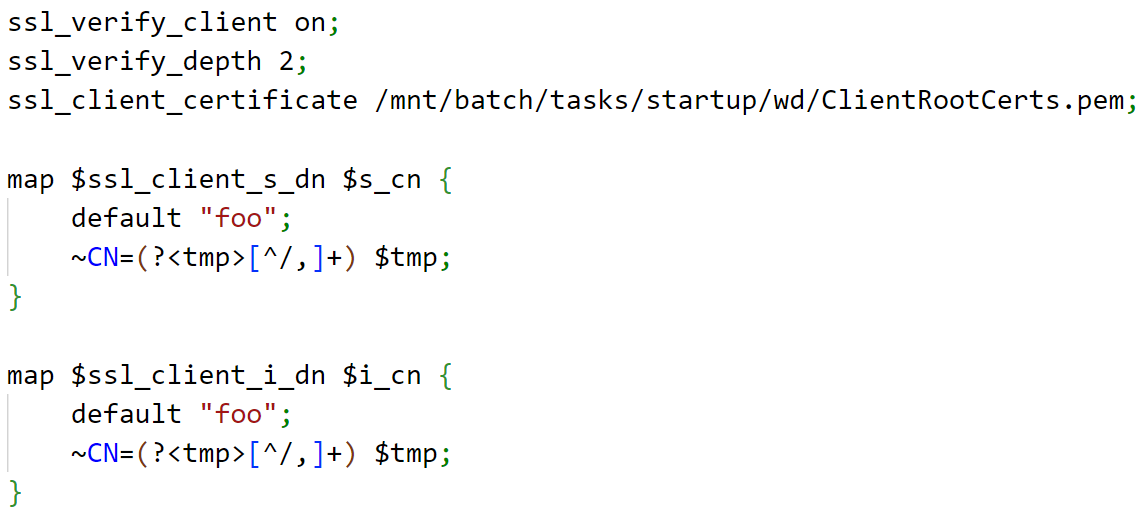

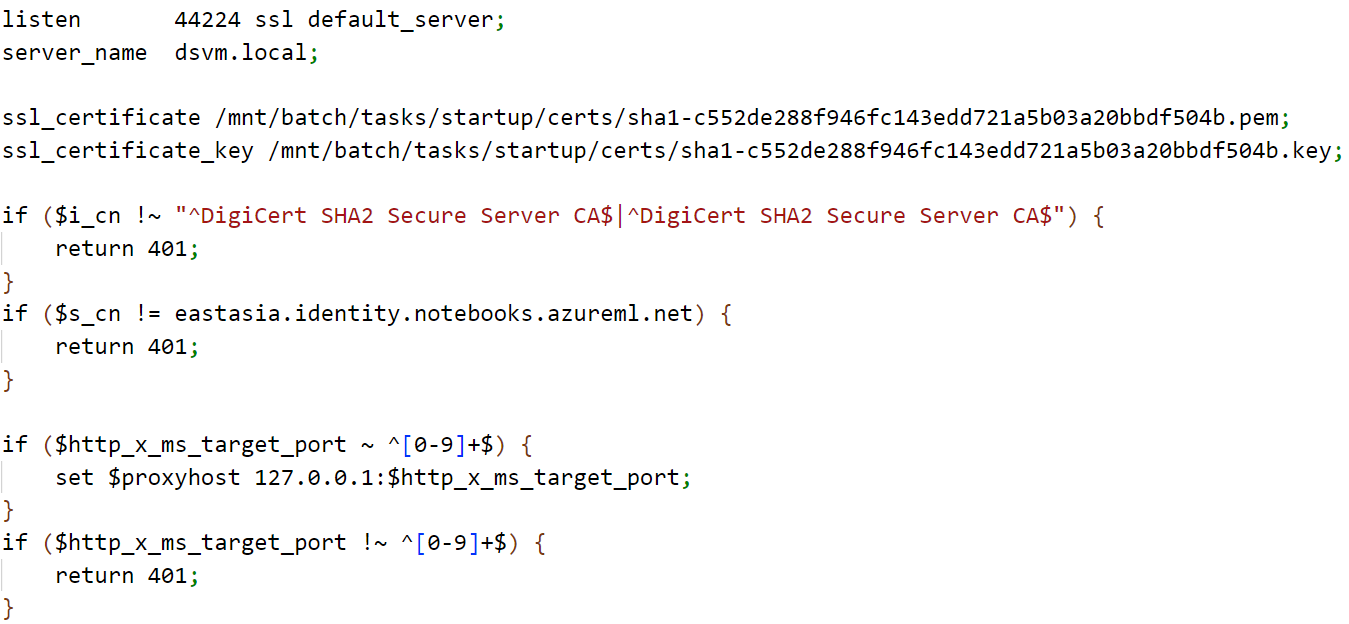

The URL to access JupyterLab on a CI would look like this: https://CI_HOSTNAME.REGION.instances.azureml.ms/lab. CIs use nginx as a proxy for forwarding the incoming requests from the loadbalancer. The proxy listens on port 44224 on all interfaces. The nginx proxy has client-side authentication enabled and checks for the issuer and subject distinguished names. After which, the value of a specific header named “X-MS-Target-Port” is checked. The value of this header contains the port to which the incoming request is supposed to be forwarded.

Figure 19. Client-side authentication enabled

Figure 20. Initial checks in the nginx configuration of the CI

A variable, “proxyhost,” is set to “127.0.0.1” followed by the port. For example, for all incoming requests to access Jupyter on the CI (which runs on port 8888), the value of the X-MS-Target-Port header shall be 8888 and the proxyhost variable will be set to “127.0.0.1:8888”:

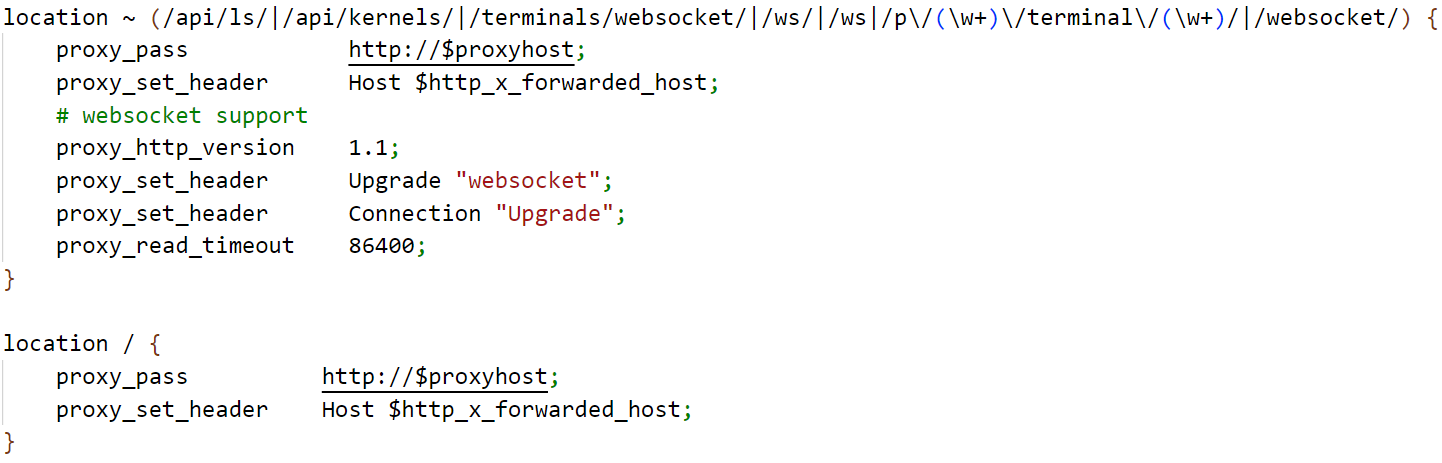

Figure 21. Final forwarding of incoming request using the nginx proxy on the CI

The proxyhost variable is passed in the “proxy_pass” directive, which in turn forwards the request based on the URIs being referenced in the request. Jupyter terminal communicates over websockets, and the connection is upgraded specifically for the same reason.

Figure 22. The nginx proxy logs access attempts and errors at /var/log/nginx/

In its default configuration, nginx logs access attempts at /var/log/nginx/access.log. To our surprise, we noticed that the nginx access logs contained the AML JWT of a user exiting an already existing browser-based terminal. When a user closes an existing Jupyter terminal, three requests are observed.

Figure 23. Terminate the terminal and log AML JWT in access logs

The request with GET method before the request with the DELETE method contains the JWT of the compute owner in the URL parameter named “token.” Although short-lived (lasting an average of 90 minutes), these tokens could be later abused from non-Azure environments using Azure REST API and various open source tools like MicroBurst, based on the roles assigned to a user.

Figure 24. CI Owner’s AML JWT logged in nginx access logs.

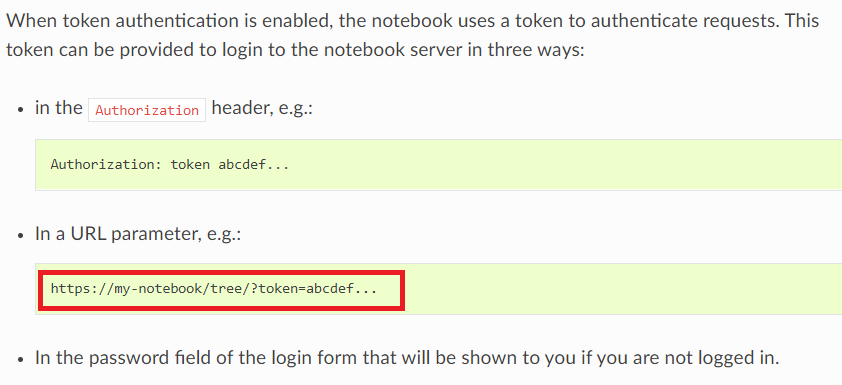

While exploring why the JWT appeared in the URL parameter, we referred to the Jupyter documentation. Access to Jupyter Notebook, JupyterLab is managed using tokens and has been enabled by default since version 4.3. On its configuration page, we observed that the token can be provided to the Jupyter server in the authorization header, a URL parameter named “token,” or by logging in using a password in a login form. In this case, we observed the URL parameter named “token” containing the JWT of the CI owner. Since nginx was being used as an inline proxy and the access attempts were being logged, the access log gave away the bearer token used, which was probably being used to authenticate access to Jupyter. It must be emphasized that sending sensitive information in URL parameters is an unsecure practice and should therefore be avoided at all cost, as asserted in this blog post from 2015 by Daniel Miessler:

Figure 25. Jupyter documentation regarding the token being supplied in the URL

Conclusion

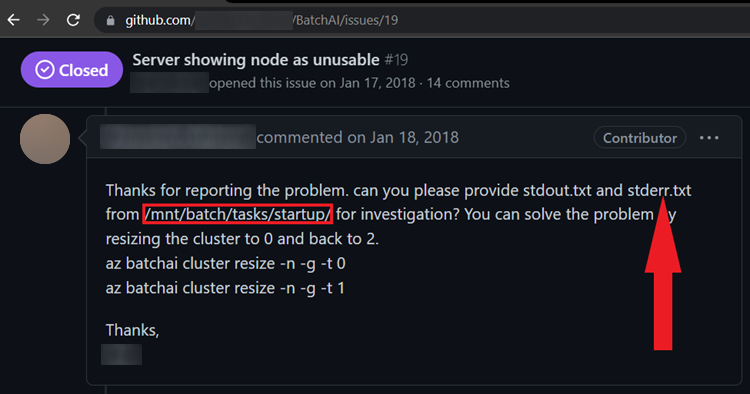

From the aforementioned instances, we found sensitive information being logged in files (as described in CWE-532 and CWE-209). These findings are significant red flags, since we’ve observed cloud-focused threat actors like TeamTNT that are known to take advantage of what MITRE ATT&CK refers to as Credentials In Files, also known as sub-technique T1552.001- Enterprise. Additionally, with the rise of malicious packages targeting widely used ML frameworks like PyTorch by fetching credentials from files and environment variables, credential hygiene practices should be consistently implemented by preventing logging or storage of access tokens and keys in unsecured locations and in clear text. We therefore emphasize the need to establish credential hygiene habits to ensure the safety and security of cloud resources. The following is an example that we found during our research about how logging or storing of credentials in files poses a security risk:

Figure 26. An example that we found of how logging or storing credentials in error logs poses a security risk.

Here we see a GitHub issue on an archived repository that has code and CLI samples for the Azure Batch AI service. Note that the error and output logs from a path /mnt/batch/tasks/startup are being requested. Exchange of debug or error logs among developers is an often-observed scenario on platforms like GitHub to help understand and troubleshoot issues. It’s also important to note that if developers are not aware of the fact that the logs might contain credentials (as we found earlier in Azure ML), it can lead to a compromise.

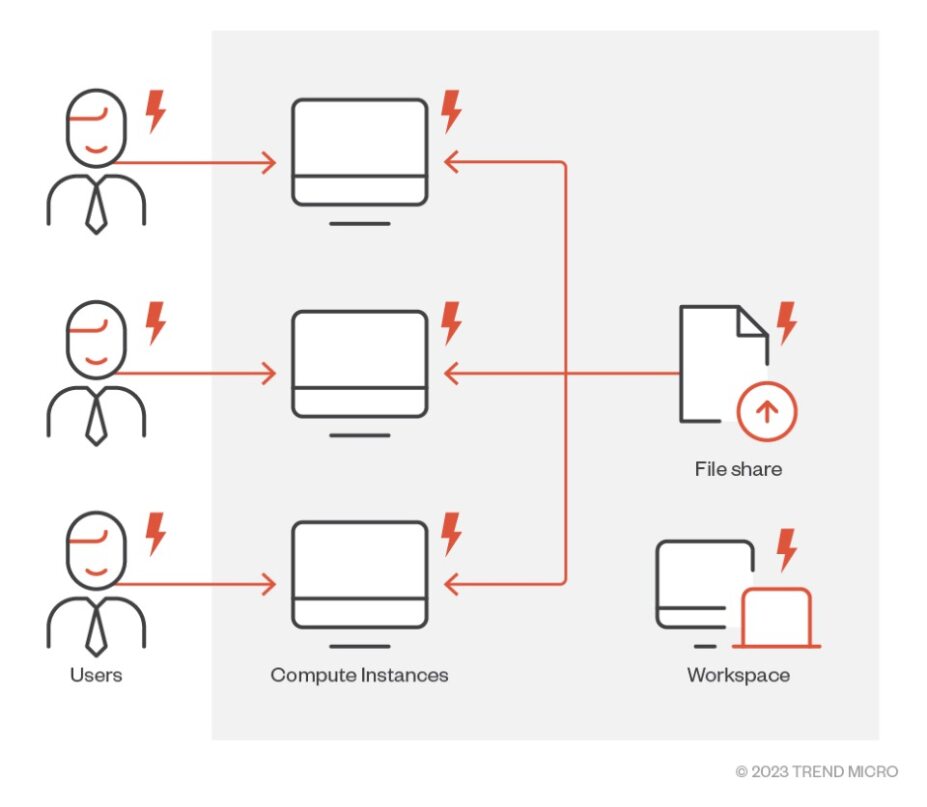

Additionally, the issue of credentials being logged or stored in files may not seem very alarming at first glance. However, when we couple these low impact issues with the features provided in the AML service, we can picture the impact. By design, any user can modify another user’s Jupyter notebooks, scripts as AML provides a collaborative environment for its users. If we consider the issue where we found the nginx access logs containing the AML token of the CI owner, a user could trivially view another user’s AML JWT and impersonate the user. If a single CI or a user or the File Share in an AML workspace is compromised, the entire workspace should be considered compromised:

Figure 27. Scope of compromise if a user, CI, or File Share is compromised

These issues were reported to Microsoft via Zero Day Initiative and were tracked and fixed under CVE-2023-23382. After the fix, we checked and reconfirmed the following:

- The primary access key of the storage account is not logged or stored in any of the files in the CI.

- The AML JWT is not observed to be reaching the CI and is not logged in access logs or any other file.

In the next blog, we will detail an information disclosure vulnerability, which we termed “MLSee” that we found in a cloud agent used by Microsoft to manage CIs.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks