Hunting Threats on Twitter: How Social Media can be Used to Gather Actionable Threat Intelligence

-

The Good: Social Media as a Viable Source of Threat Intelligence

-

The Bad: Abusing Social Media to Spread Fake News

-

The Ugly: Abusing Twitter for Cybercrime and Fraud

-

Is SOCMINT valuable?

Using Social Media Intelligence (SOCMINT)

How can information security professionals and security teams use social media to gather threat intelligence that they can use to protect their organizations?

Social media platforms allow users and organizations to communicate and share information. For security professionals, it could be more than just a networking tool. It can also be an additional source of valuable information on topics from vulnerabilities, exploits, and malware to threat actors and anomalous cyber activities. In fact, 44% of surveyed organizations cited the importance of social media intelligence (SOCMINT) to their digital risk protection solutions.

We developed tools that examine data and case studies on Twitter to see how social media can be used to gather actionable threat intelligence. The good: Social media can be an alternative source of information that, when validated, can be used to defend organizations against threats. The bad: Social media can be abused to mar a public figure or organization’s reputation. The ugly: Social media can be abused for cybercrime, fraud, and proliferation of misinformation.

Our study also revealed some caveats. SOCMINT can further provide context to an analyst’s research, or enable an enterprise’s security team to utilize additional data that can help secure their online premises. However, it needs context, veracity, and reliability to be effective. Security professionals using SOCMINT should vet the data before they integrate it into the enterprise’s cybersecurity solutions and strategies.

Threat Intelligence via Twitter

Gathering threat intelligence from social media channels requires data that can be processed, analyzed, validated, and given context.

Processing social media

There are multiple ways to come up with raw data. There are open-source intelligence tools (e.g., TWINT) that can scrape data or publicly available Twitter streaming application programming interfaces (API) that can collect sample data for analysis. Another option involves a deeper probe into existing datasets, such as those used in a FiveThirtyEight story on troll tweets. For our research, we used a Twitter public API, as it conforms with Twitter’s terms of service and usage policy. We've also reported to Twitter all the related IoCs in this research.

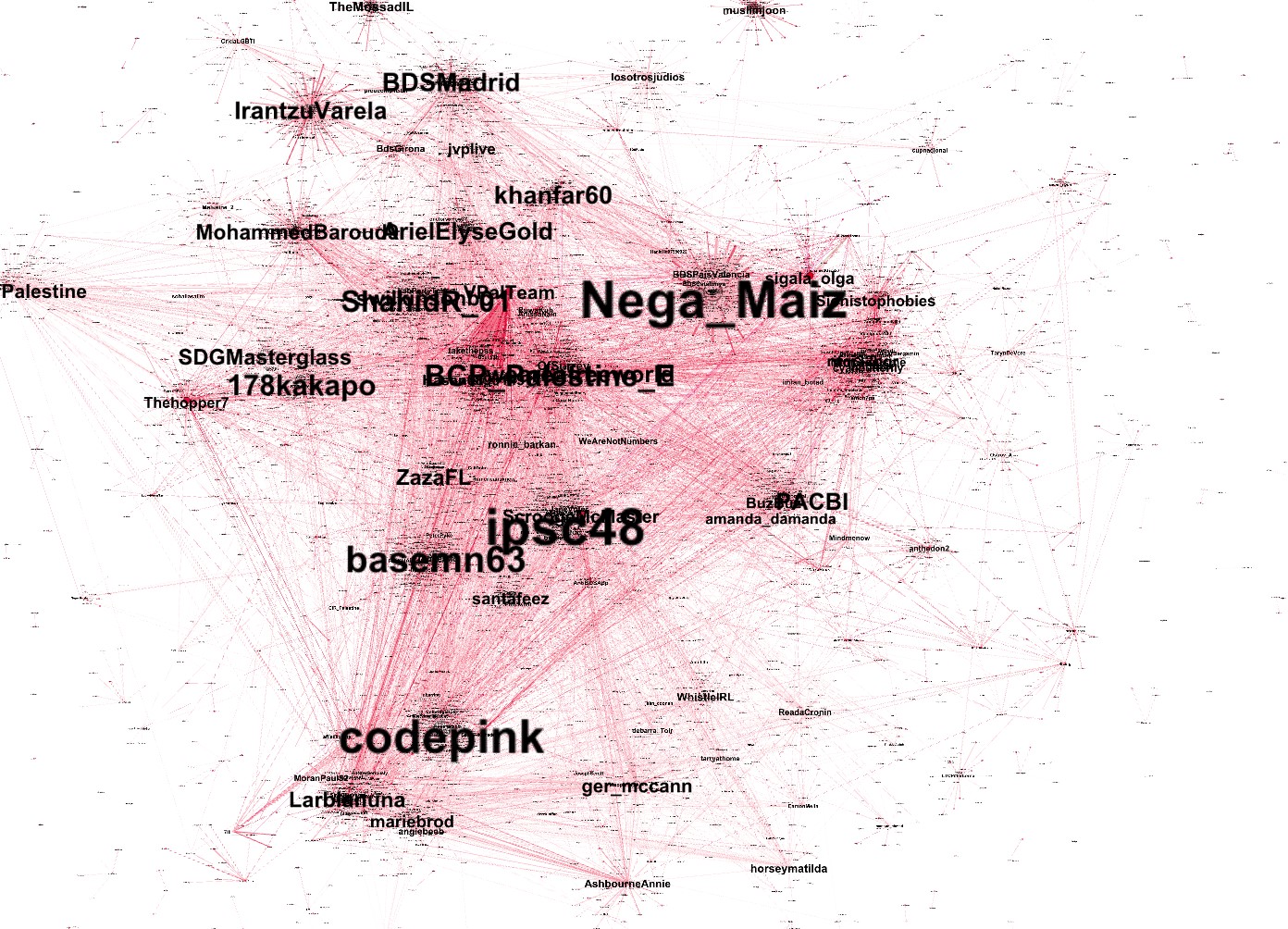

Our research identified relationships between social media entities and checked for potential anomalies in these relationships. We did this by processing the raw input data, then identified the "actions" taken by an "object" on a "subject." Social media entities and their relationships shaped our social network graphs. The actions, objects, and subjects formed the graphs' nodes and edges. We also came up with major social network interactions that we converted into actions: "follower," "following," "quote," and "retweet."

The guru-follower pattern that we explored during our research on fake news and cyber propaganda is an example of this relationship network. The guru-follower behavior can be seen when social media bots are used to promote content. This repeatable, predictable, and programmable behavior is used to amplify messages posted by the guru’s account, increasing the messages’ chances of reaching regular social media users.

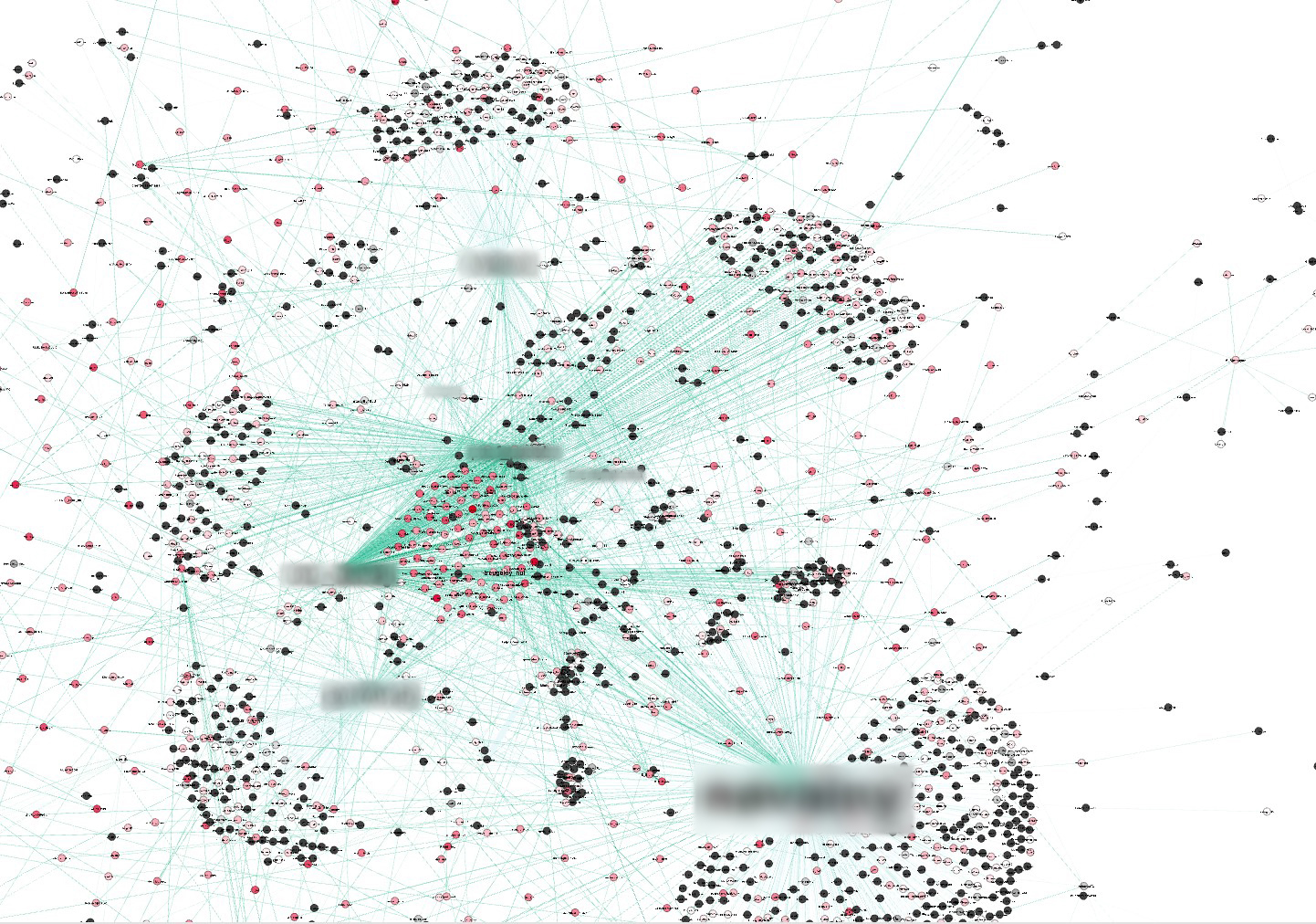

We analyzed the data we collected in slices due to its volume, then applied topical characteristics and selectively extracted content that matched particular topics or subjects. These datasets — which we call topical slices — were then converted into a graph that represents interactions between Twitter accounts within the slice.

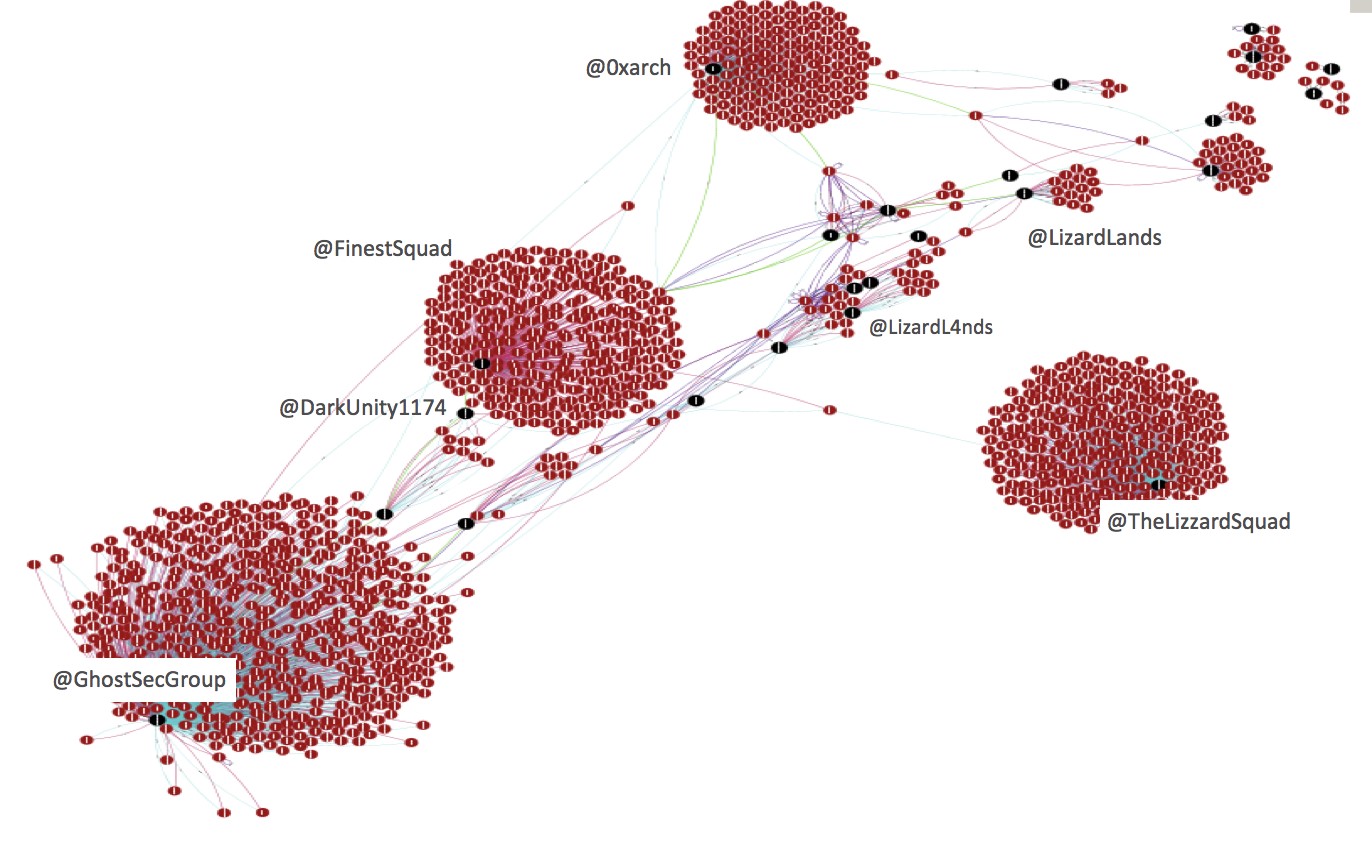

Figure 1 visualizes these relationships. Increasing its timeframe and analyzing a larger piece of data shows a wider view that reveals clusters of related accounts and traces of how information propagated between these clusters. It is also possible to identify the accounts that act individually, those that heavily interact with other groups, and even the social media bots. We observed different use cases of Twitter among different communities.

Figure 1. An example of a topical slice: The graph was produced using a slice of data that match “Anonymous”-related activities on Twitter within a period.

Figure 1 shows the relationships of Twitter accounts that appear to correlate to various hacktivist groups based on a number of indicators such as hashtags. Hacktivism hashtags were fed as an initial filter to collect the input data. This resulted in social activity clusters forming around several accounts such as GhostSecGroup, TheLizzardSquad, DarkUnity1174, and FinestSquad, among others. They are the leaders of their own clusters, as most of the activities were centered on these accounts. The others mainly served to further distribute the information/content.

The Good:

Social Media as a Viable Source of Threat Intelligence

Twitter isn’t just a personal platform for content sharing and promotion. We also saw bots sharing the most recent indicators of compromise (IoCs) and even threat detection rules. In fact, there’s publicly available information on how Twitter bots can be used to monitor internet-of-things (IoT) devices. There are also open-source honeypots that can log data onto Twitter.

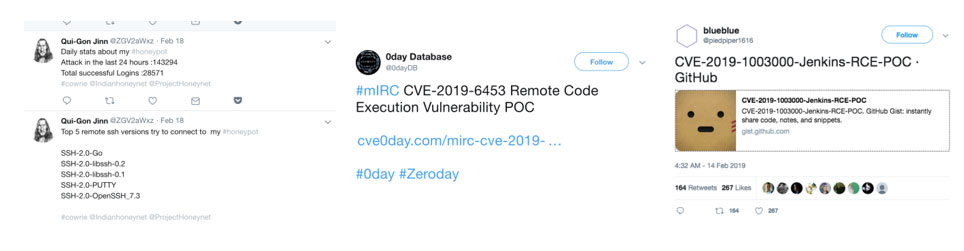

Figure 2. Example of a Twitter honeypot logger (top left); keywords/hashtags matching searches for vulnerability (top center); result of “CVE”- and “Github”-related searches (top right); and data visualized into a word cloud showing the distribution of CVE-related keywords (bottom)

Social media channels like Twitter can serve as alternative platforms to source threat intelligence. However, the information needs context to make it actionable.

Vulnerability Monitoring

The speed with which information is distributed on social media channels like Twitter make them viable alternative platforms for monitoring if known (N-Day) vulnerabilities are being exploited in the wild. This information can help enrich an organization’s situational awareness — understanding the ever-changing environments where critical assets and sensitive data are stored and processed. Security teams can then use this visibility to assess and verify if their online premises are vulnerable or exposed.

To demonstrate social media's use in threat monitoring, we searched for specific keywords, such as: “0-day”, “CVE-“, “CVE-2018-*”, “CVE-2019-*”, and “bugbounty”. The search yielded interesting results, shown in Figure 2, as we were able to see the distribution of context per mention of “CVE”-related keywords on Twitter. We then used additional CVE-related tags/hashtags to get a deeper look into these search results, as this gave more context and details or discussions on recently disclosed vulnerabilities. This is especially useful for security operations (SecOps), as they can determine if there are existing proofs of concept (PoCs) that exploit these vulnerabilities. Searches with keyword combinations like “Github” and “CVE” can also yield GitHub repositories with PoCs for N-Day vulnerabilities.

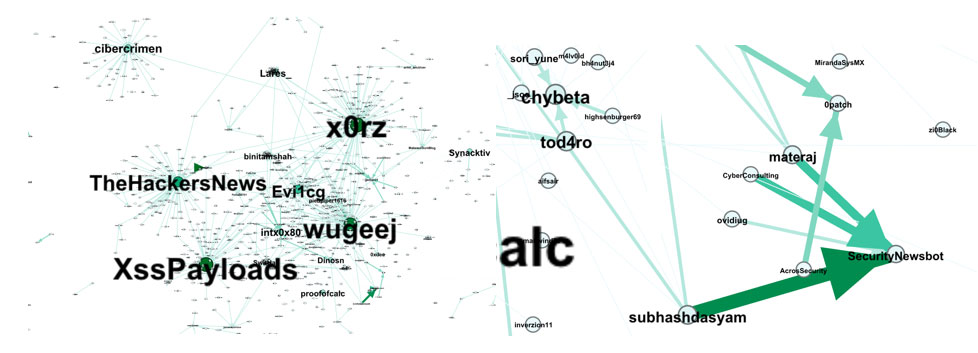

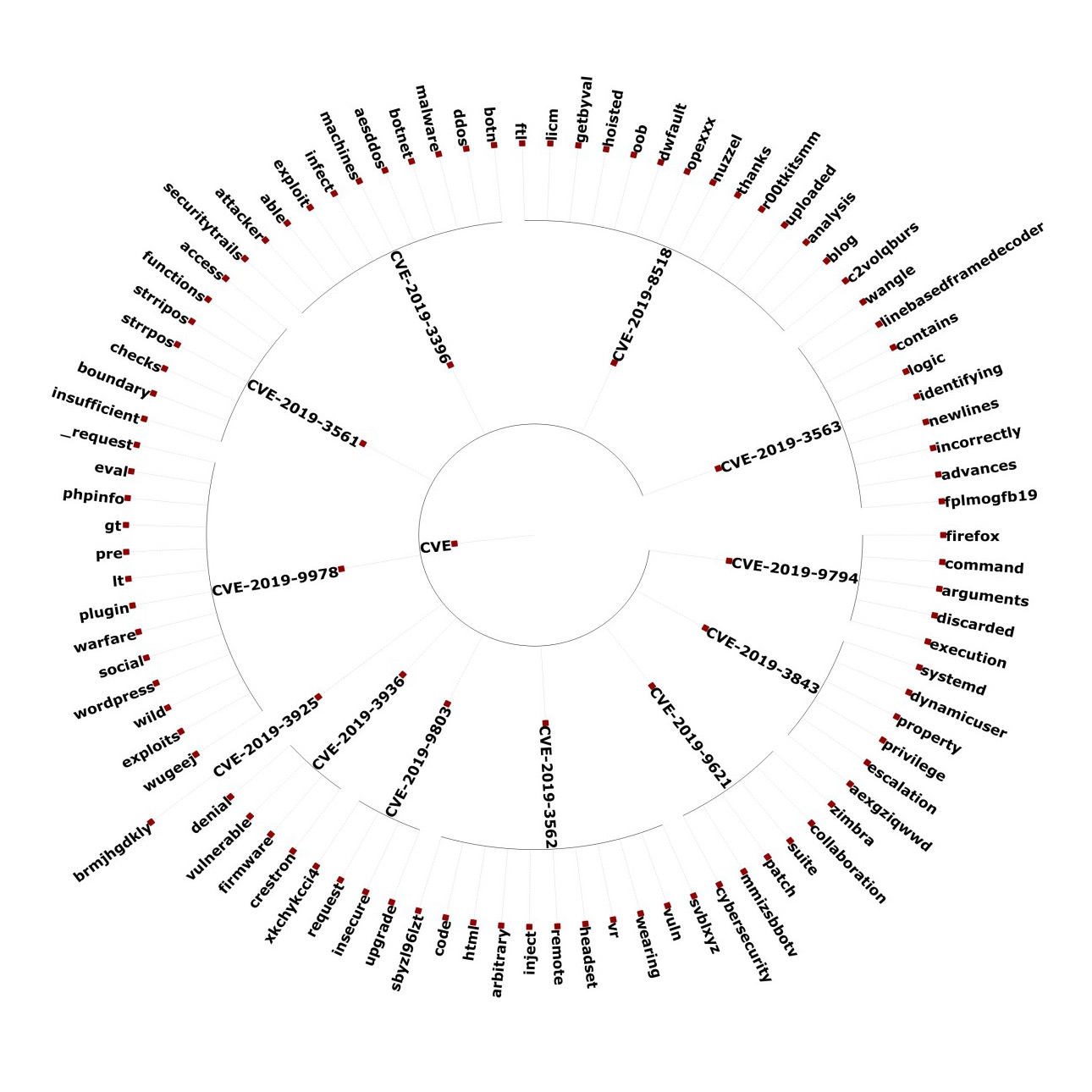

Figure 3 shows the breakdown of users discussing different CVEs in a topical slice we analyzed. CVEs with bigger visualizations in the graph indicate that they were the most discussed.

Figure 3. Diagram showing the relationships of Twitter accounts that actively discuss specific vulnerabilities

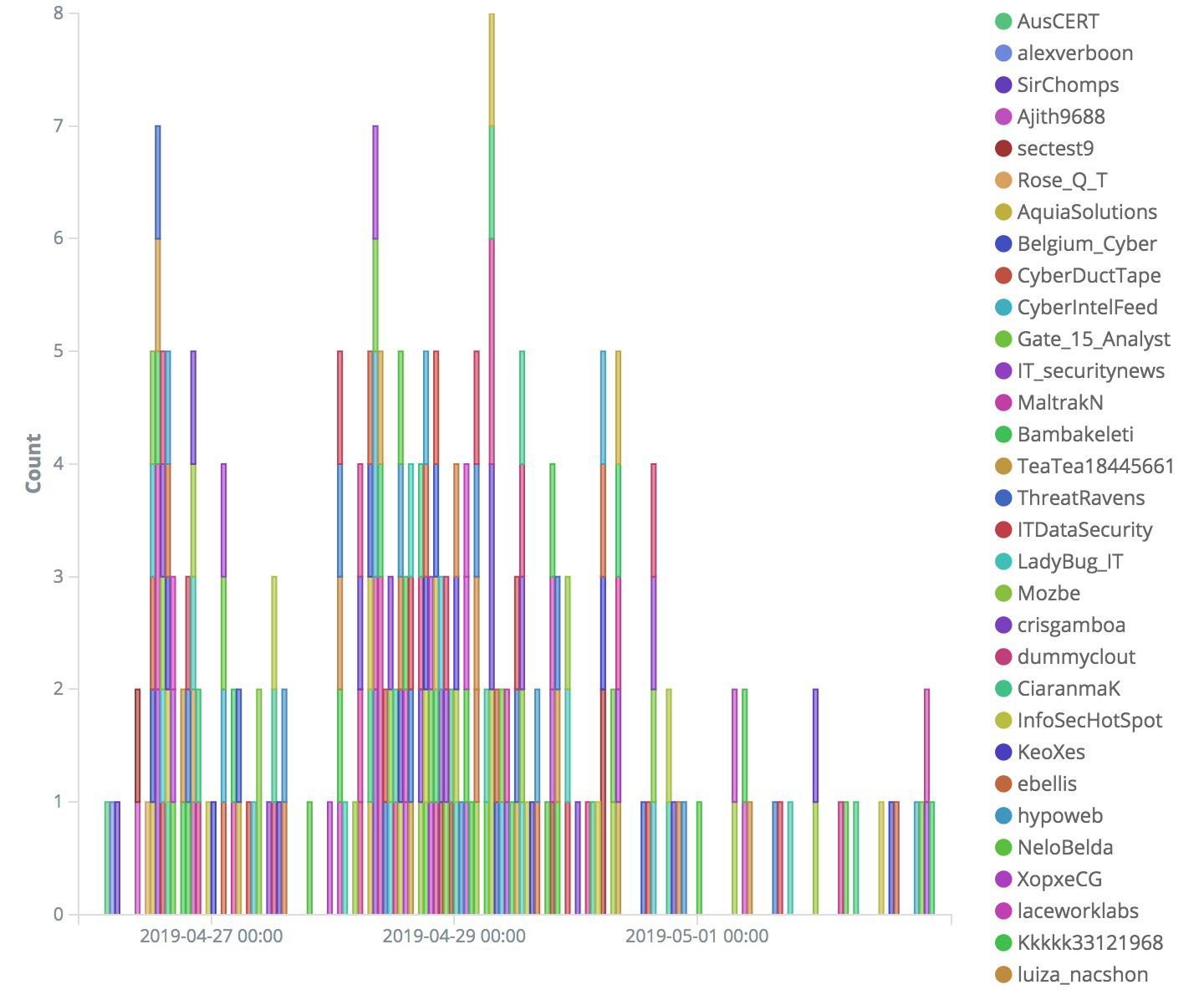

As shown in Figure 4, narrowing down the searches to a fixed number of Twitter accounts resulted in a social interaction graph that showed interesting accounts and the most useful conversations about particular CVEs. A closer look into this graph reveals Twitter accounts that serve as aggregators of cybersecurity-related news.

A Twitter-based program monitoring for threats can focus on these accounts, as they provide related and topical news and information. These accounts can be validated through the number of its retweets or followers, but note that this can also be fabricated by social media bots.

Figure 4. Graph showing interactions of Twitter accounts and discussions on particular vulnerabilities or threats (left), and its zoomed-in version showing accounts that act as security news aggregators (right)

Actionable Threat Intelligence

For SOCMINT to be actionable, it should have accuracy, context, timeline, and time-to-live (TTL), which is the lifespan of data in the system or network. For instance, IoCs or detection signatures with context and timing related to the monitored threats are actionable threat intelligence.

Context provides insight and actionability to threat intelligence. An example is an IoC’s TTL, especially network indicators. Attackers, for instance, could rent internet hosting with an IP address that a legitimate internet shop/café might use later on. Attackers could compromise the latter’s assets. Domains used by attackers can be pointed to well-known IP spaces when they’re not in use. They would only need to configure the domain name system (DNS) to point to real IP addresses to evade detection or obfuscate their trails.

These elements should be considered when applying threat intelligence, because using it haphazardly could have the opposite effect. In fact, there have been cases where the automation of the threat intelligence feed and misapplication of indicators on the security perimeter of a network caused it to be completely isolated from the internet.

Indicators of Compromise

Social media channels — and in our research’s case, Twitter — can also be used as an additional source for IoC mining. Hashtags like #ThreatHunting, for example, can provide information about ongoing or recent cybercriminal campaigns, data breaches, and cyberattacks. Figure 5 shows how this can also be extended to monitoring physical threats, such as incidents in critical infrastructure like airports.

For instance, when we visualized our topical slice that discussed airports, many of the messages gave flight status information (delays, end of registration, last calls, etc.). Interestingly, combining the prominent keywords — such as “airport” and “flight” — with “incident” results in different keywords and search results.

Figure 5. Tag/word cloud of discussions on airports, with keywords “flight” or “airport” prominently used (top); and related content that provides context on the keywords used when searching for incidents

Threat Intelligence Sharing

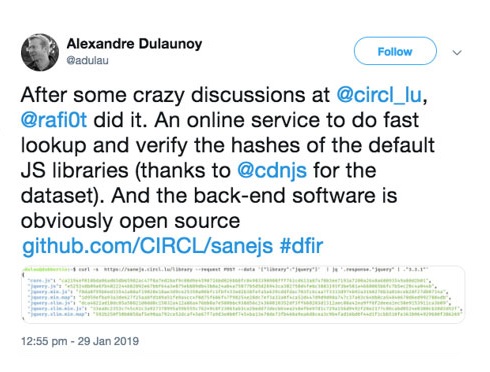

Knowledge sharing is ingrained within the information security (InfoSec) community. Novel techniques employed to handle incident response, when shared, saves time for other security teams facing similar situations. They can range from NetFlow analysis tools and open-source repositories of security-enabling JavaScript (JS) libraries to knowledge bases of specific incident response plans.

Many security researchers also share threat detection rules via social media, either manually or automatically, that other analysts can use. Artifacts like YARA rules, for instance, can be used for file analysis and endpoint protection. They can also serve as additional countermeasures against threats. Network intrusion detection system (IDS) rules can also be used to improve attack detection ratios, although security teams must validate them and beware of poisoning attacks.

Figure 6. An example of how Twitter was used to share tools or techniques with the InfoSec community

Contextual Knowledge of IoCs

A malicious or suspicious binary raises red flags, especially when detected in a specific environment. Even if an IPS or endpoint security system blocks a threat, it still needs to be further assessed or examined. Punctually provided contextual information is important in incident response. These assessments can be useful when similar incidents are encountered in the future:

- How long the attacker was in the compromised network, or if lateral movement was carried out

- The initial point of compromise and the stage in the kill chain where the attack was detected

- The attack vectors and vulnerabilities exploited

- If the attack was targeted or opportunistic

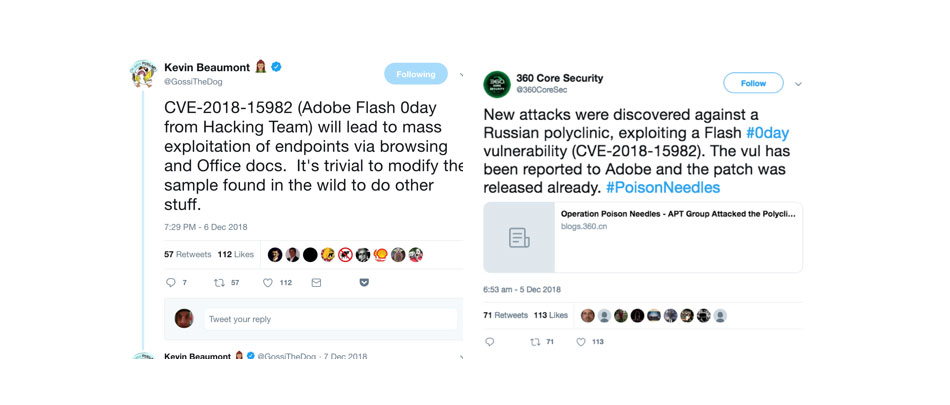

The security team also has to assess what tasks need to be prioritized and how much effort and resources are needed while also continuously monitoring and detecting other threats. Figure 7 shows that social media can also provide context on specific threats, like how a vulnerability is being exploited in the wild. An example of this: We saw tweets about a threat exploiting a vulnerability in Abobe Flash (CVE-2018-15982), possibly to target a medical institution in Russia.

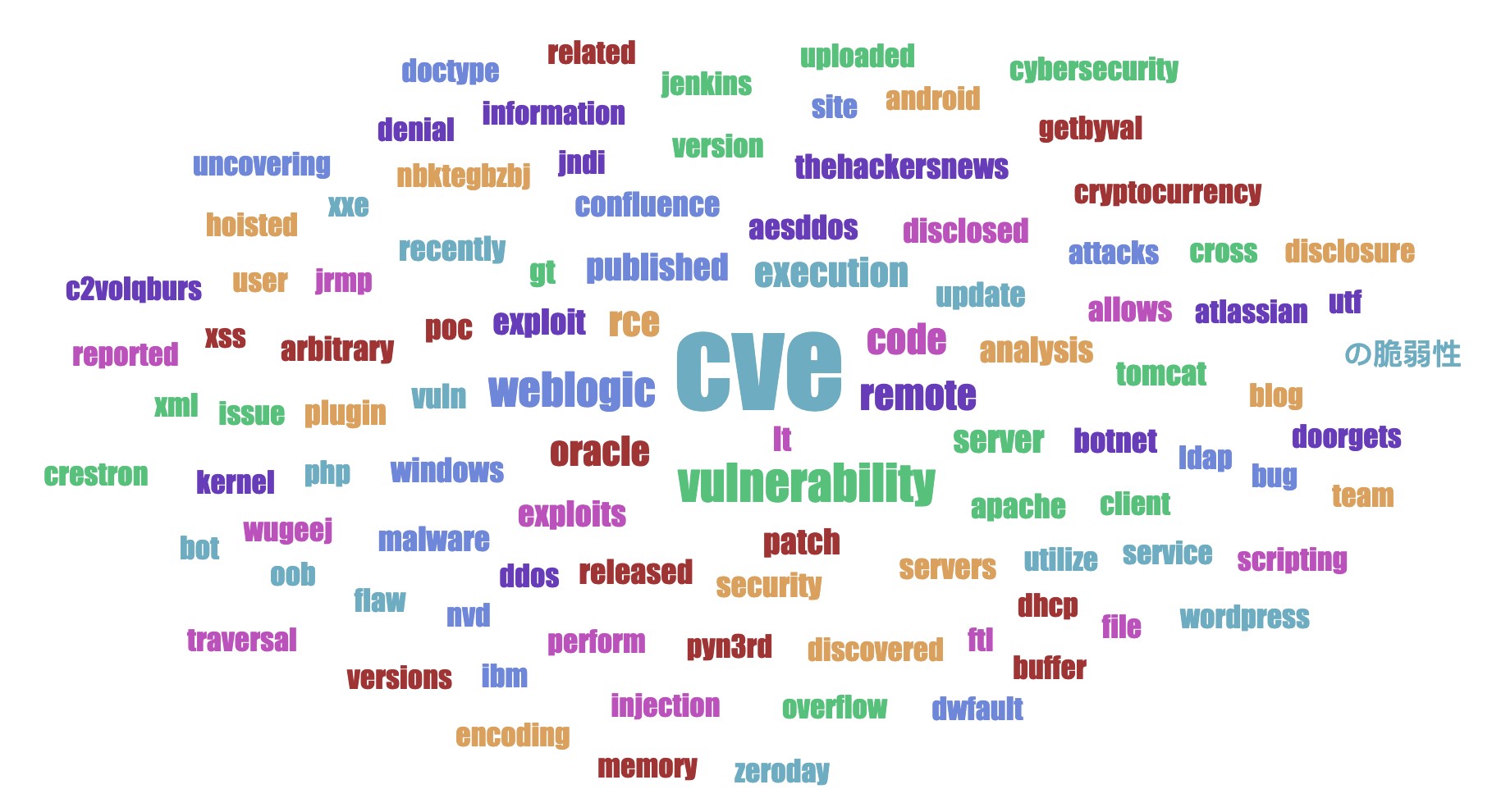

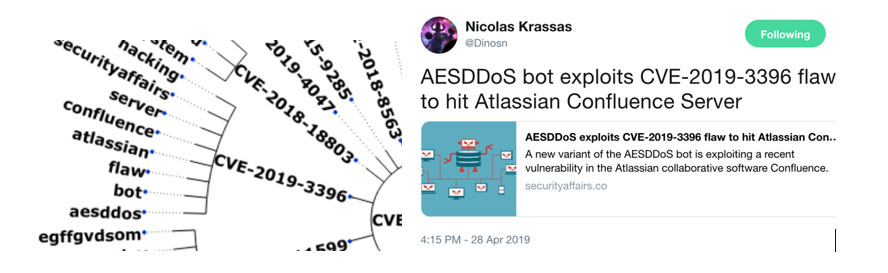

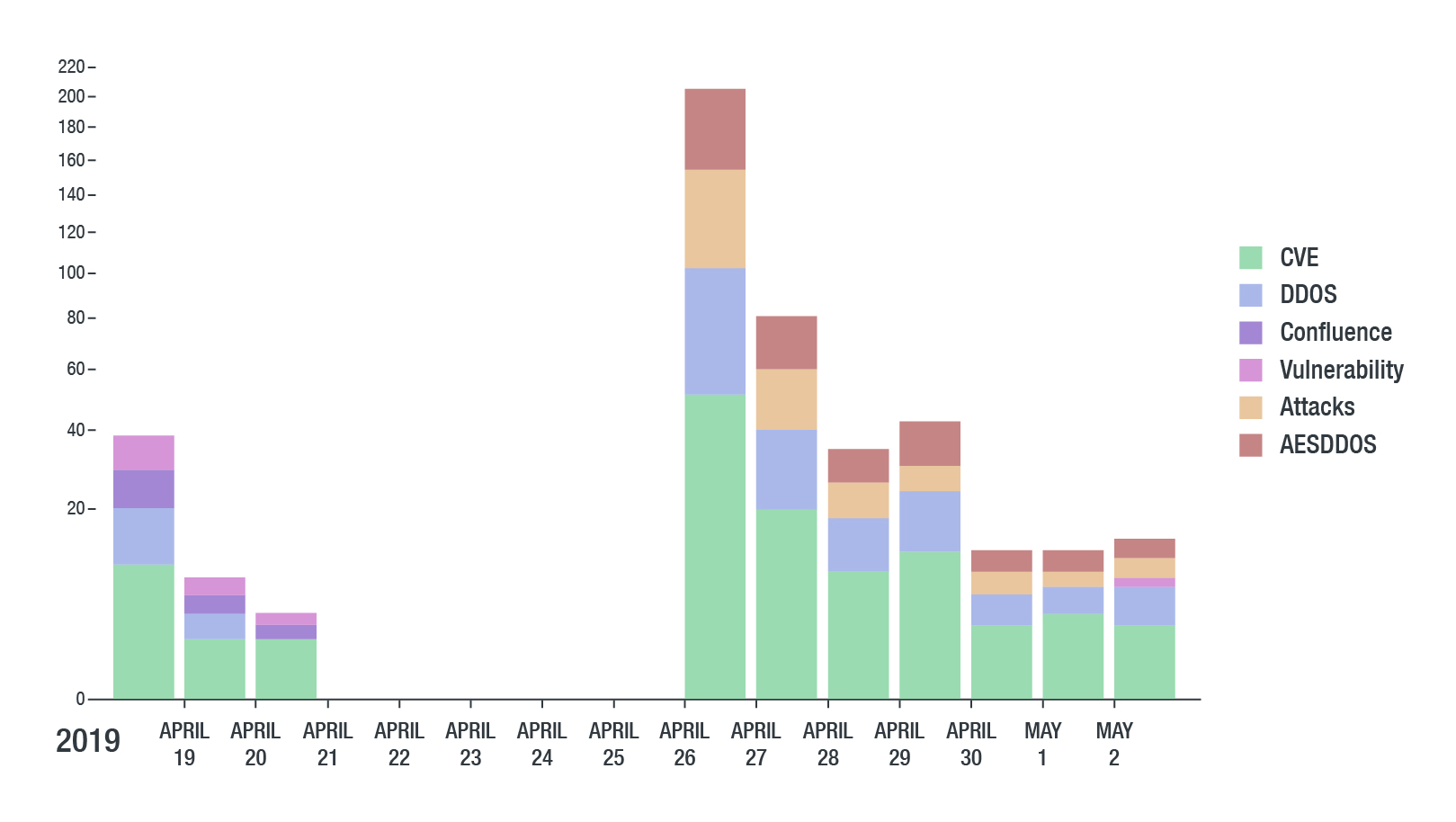

It’s also possible to automate the semantical analysis of context of vulnerabilities. Our tool further analyzed topical slices (broken down per week) containing discussions on vulnerabilities. The resulting visualization (Figure 7, bottom) matched the vulnerabilities being discussed to keywords that indicate the affected software and the vulnerability’s impact. For example, CVE-2019-3396 is matched to keyword “confluence”, and is consistent with the finding that an exploit for the vulnerability was scripted into a distributed-denial-of-service (DDoS) bot.

Figure 7. Tweets that provide context for CVE-2018-15982 (top); visualization of vulnerability-related keywords and how they were matched to other keywords (center); and how CVE-2019-3396 was exploited to target a Russia-based polyclinic (bottom)

Further visualizing the prevalence of CVE-, CVSS-, and CVE-2019-3396-related tweets, we saw that keywords like “infected” appeared after some time. This could provide additional context to a vulnerability, as they can show if they are being actively exploited. But as with any information from social media, it should pass sanity checks and consider the reputation of the information’s source before it is integrated into a security team’s threat mitigation process.

Figure 8. Visualization of prevalently used keywords related to CVE-2019-3396, broken down into sources of information (top) and context about the vulnerability (bottom)

The Bad:

Abusing Social Media to Spread Fake News

Abusing social media to promote fake news or mar a public figure or organization’s reputation isn’t new. In fact, the proliferation of misinformation is readily offered as a service in underground or gray marketplaces. Gray activities are more concerning, however, because while they're suspicious, they can’t be classified as malicious. They can be unregulated or illegal in several jurisdictions. They also don’t blatantly violate the social network’s terms of service or policies, which makes them tricky to ban unless vetoed by legal request. The way information spreads on social media also makes it difficult to attribute these activities accurately.

To further validate this, we looked at a recent example of social media being abused to manipulate public perception involving discussions on export issues in Russia. One of the country’s biggest meat producers was heavily affected when an interview of its CEO about importation of meat and milk products triggered a wave of campaigns calling for a boycott of the company’s products.

Figure 9. Visualization of interactions on Twitter discussing the boycott of the company’s products; red dots indicate suspected social media bots

We visualized the interactions during a three-day period (Figure 9) and saw that the tweets were also posted by bots, which we’ve identified through criteria like pronounceability tests of the account name/handle, registration date, and frequency of posts, among others. A closer look into these accounts revealed that certain posts from these bots got more attention than others. The followers of these bots paint a similar picture. While there are no activities obviously violating Twitter’s abuse policies, these accounts are mostly part of a tool or service used to amplify messages and opinions.

Another example is the recent campaign calling for a boycott of the popular song contest Eurovision. Bots posted many of the tweets, sometimes accompanied by political images.

Figure 10. A Twitter account’s interactions with its followers, indicators of automation such as names that appear machine-generated, which strongly suggest that an automated social media network is being used

Figure 11. Visualization of interactions on Twitter discussing the boycott of Eurovision, which also involved accounts that appear to use automation tools

The Ugly:

Abusing Twitter for Cybercrime and Fraud

Social media is a double-edged sword: cybercriminals and attackers can abuse it for malicious purposes.

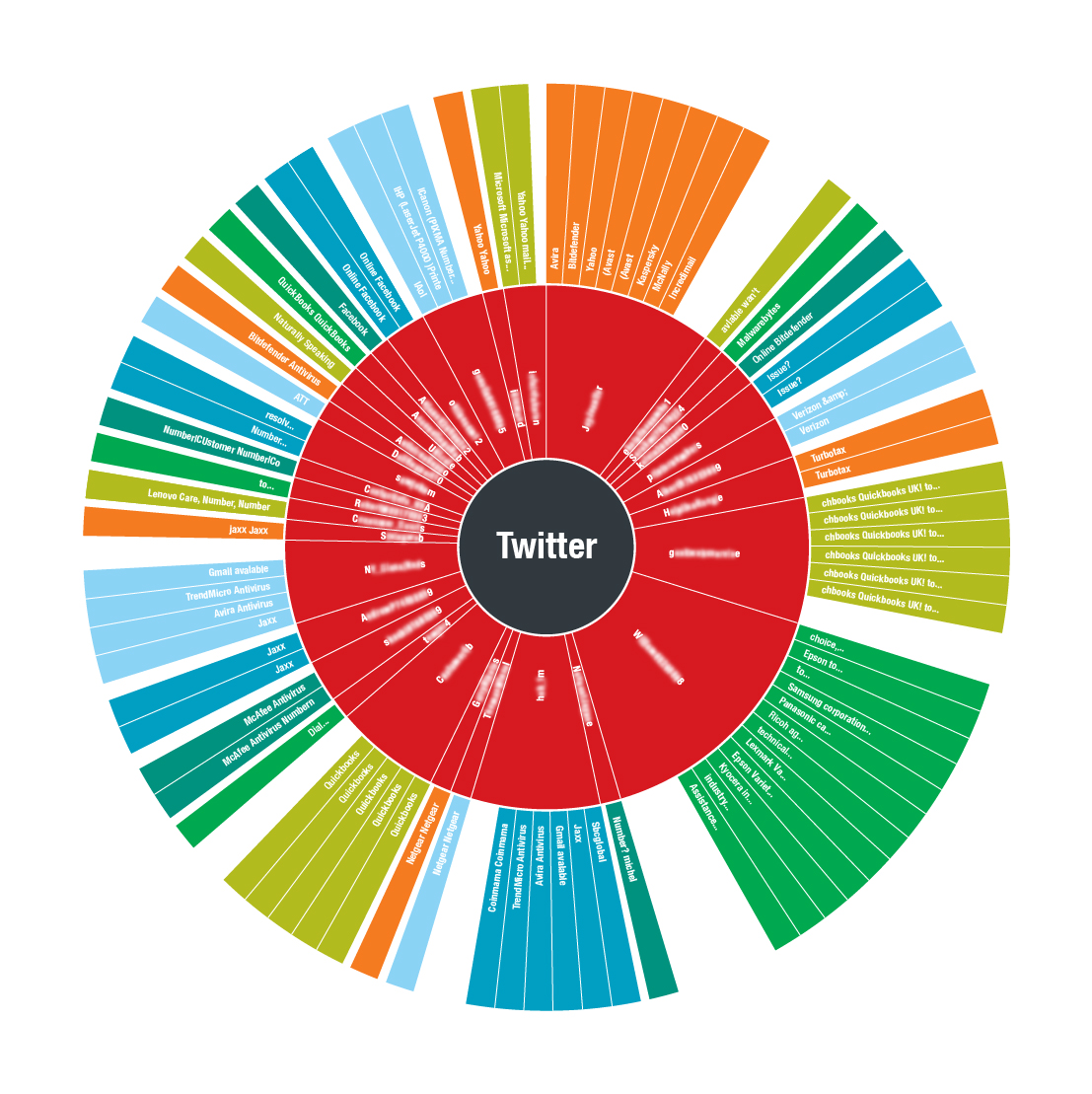

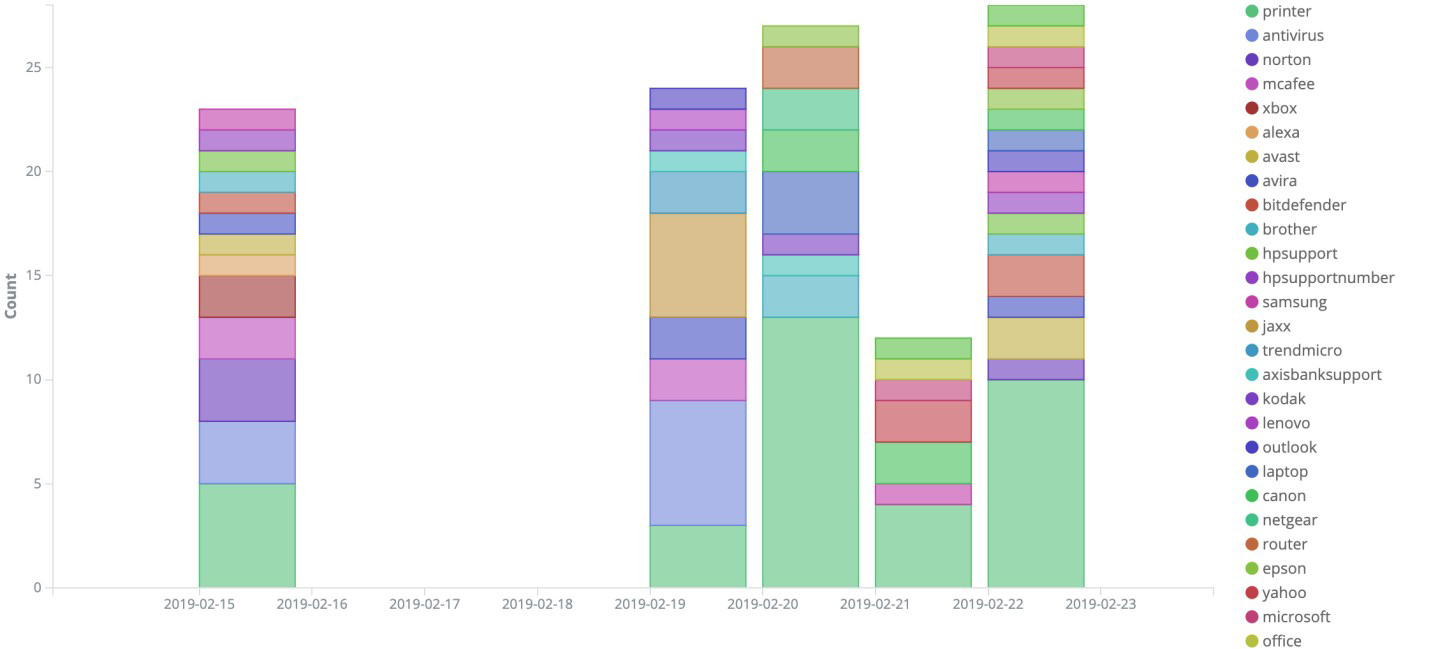

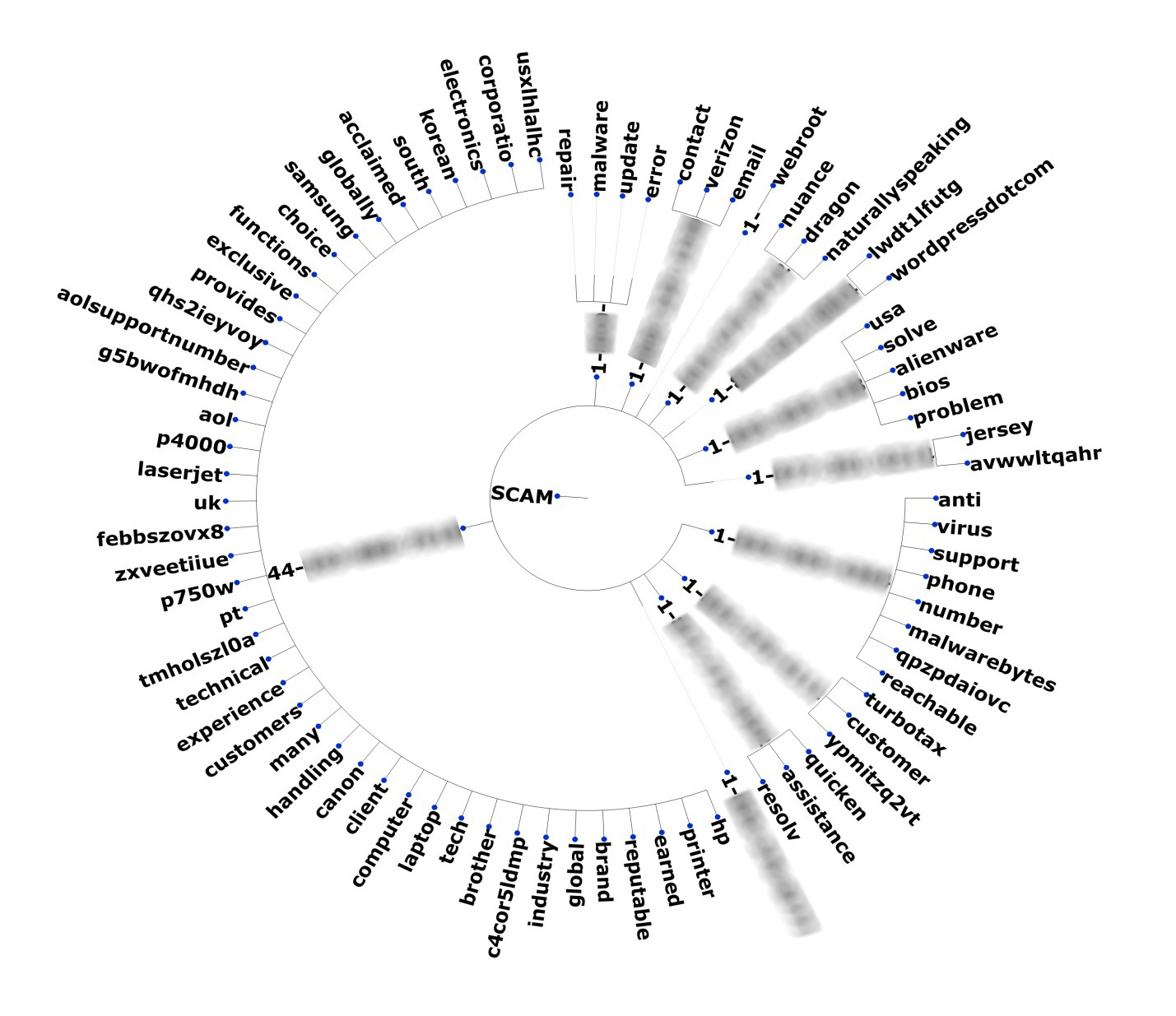

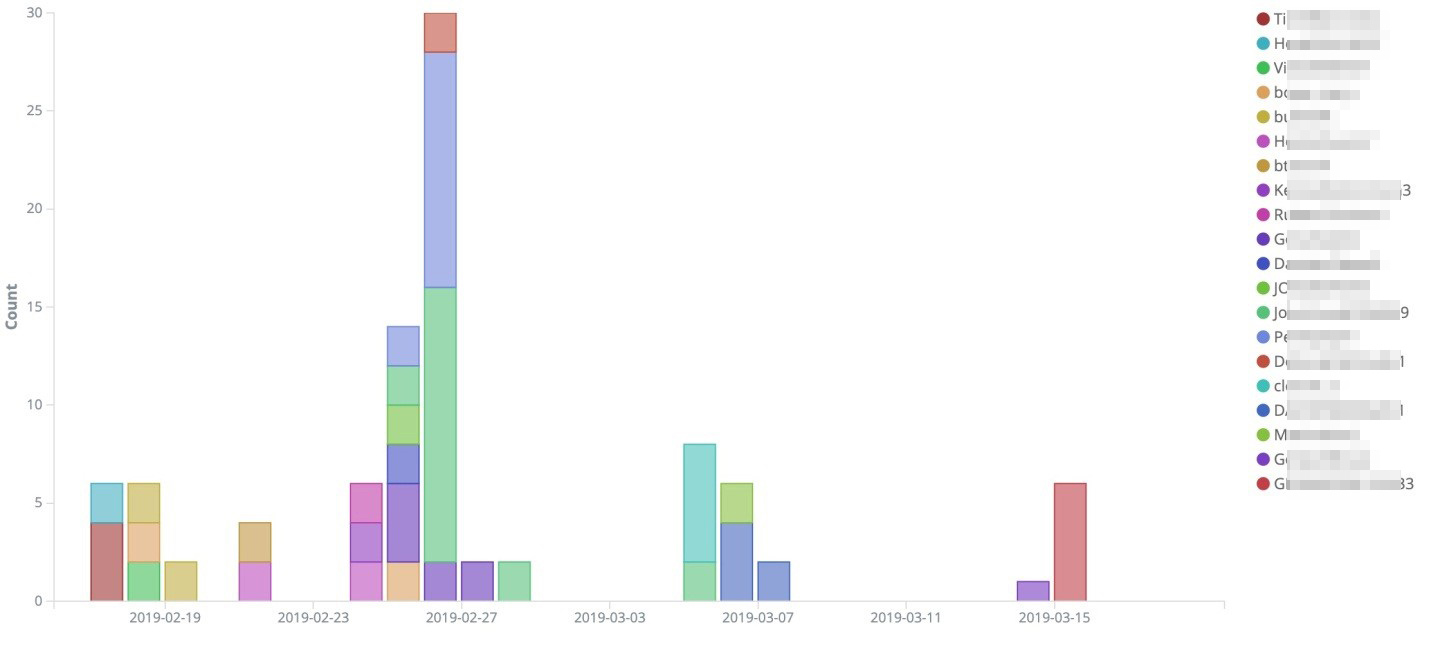

Technical Support Scams

Figure 12 shows a visualization of tech support scams that used Twitter to boost their activities within a three-day period in February 2019. The graph includes the Twitter accounts involved in the scams, and the product or service they impersonated. Figure 13 narrows this visualization down, revealing that all of these tweets had fake contact numbers and websites. This fake information wasn't just distributed on Twitter, as we’ve also seen them on other social networks like Facebook, YouTube, Pinterest, and Telegram. Fraudsters are also increasingly using search engine optimization (SEO) techniques to perpetrate the scams.

Figure 12. Visualization of tech support scams found within a three-day period in February 2019, including the involved Twitter accounts and the product they were mimicking

Figure 13. A zoomed-in visualization of tech support scams that actively posted fake contact numbers and websites

Figure 14 (bottom) shows the semantical context of the phone numbers that the scammers posted on Twitter. Both diagrams show the prevalence of certain keywords used, and the Twitter accounts related to the fake phone numbers and the products they posed as.

Directly thwarting tech support scams are tricky as they don’t rely on malicious executables or hacking tools. They instead prey on unsuspecting victims through social engineering. Fortunately, Twitter proactively enforces their policies against abuse. Many of the scammers’ accounts we saw, for instance, were quickly taken down.

Figure 14. Keywords used (top) and semantical context (bottom) of tech support scams seen on Twitter

Intermediate C&C Server

We saw how certain malware families were coded to connect to social media, using it as an intermediary for their C&C server. The Anubis Android banking malware, for example, uses Twitter and Telegram to check its C&C server. This involves Anubis’ bot herders passing C&C information to the infected Android device.

We analyzed a topical slice involving Twitter accounts used to distribute C&C information and saw how active some of these accounts were. We also identified several malware samples linked to particular Twitter accounts. These samples were likely written by a Russian speaker, as the code contains some references to transliterated Russian words like “perehvat” (intercept).

Figure 15. A topical slice of Anubis-related activity (based on the number of posts) on Twitter by different affiliated accounts

Figure 16. A fake Twitter account that Anubis used as a communication channel

Anubis is just one among many other threats that abuse social media. The Android malware FakeSpy is also known to abuse social media platforms like Qzone, Suhu, Baidu, Kinja, and Twitter for its C&C communications. Threats like Elirks, which is used in cyberespionage campaigns, also abuse social media and microblogging services to retrieve its C&C information. Elirks’ operators abused social media channels that are popular in the countries of their targets, as this helps draw attention away. This evasion technique is also complemented by the obfuscation of their actual C&C servers behind public DNS services such as Google’s.

Steganography/Exfiltration Tool for Malware

We also saw the abuse of social media platforms like Twitter to hide the malware’s routines and configurations or attacker-owned domains from where it can be retrieved. An example is how a threat, delivered by exploit kits or targeted attack campaigns, would use steganography — hiding code or data within an image — to retrieve its final payload. This is the case for a recently uncovered data-stealing malware that connects to a Twitter account and searches for HTML tags embedded in the image. The image is downloaded and parsed to extract hidden commands for retrieving C&C configurations, taking screenshots, and stealing data.

Figure 17. A Twitter account that a data-stealing malware uses to retrieve its malicious routines

Is SOCMINT valuable?

SOCMINT is a valuable addition to existing solutions, but organizations looking to incorporate it into their cybersecurity strategy should first identify its use case.

Social media has transformed many aspects of our lives. Our study showed that it can also play an important role in threat hunting. The rich volume of information available and distributed through social media makes it a viable platform for obtaining strategic, actionable, and operational threat intelligence. This can help enrich a security team’s capability to anticipate, preempt, monitor, and remediate threats as well as mitigate risks. Organizational PR and crisis management teams can also use social media intelligence to further understand how they can distribute information.

On the other hand, social media can be abused to orchestrate misinformation campaigns and serve as intermediary platforms to hide or carry out cybercriminal or malicious activities.

Organizations looking to incorporate SOCMINT into their cybersecurity strategy should first identify its use case. While this intelligence is a boon to security professionals, its value depends on how they apply it to address the organization’s risk profile. An effective SOCMINT requires good data to monitor potential sources of threats or online risks.

In an ever-changing threat landscape, security teams may find themselves swamped by a sea of data and consequently lose sight of threats or vulnerabilities that they need to prioritize. Data from social media should also have a life cycle, from processing and analysis to application of context and validation. This transforms raw data into actionable intelligence that can help make informed decisions — examining the threat, blocking an intrusion, increasing security controls, and investing in additional cybersecurity resources.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks