The Illusion of Choice: Uncovering Electoral Deceptions in the Age of AI

The Illusion of Choice: Uncovering Electoral Deceptions in the Age of AI

Elections are the cornerstone of modern democracy, an exercise where a populace expresses its political will through the casting of ballots.

Key Highlights

- Internet and social media enable rapid spread of misinformation, with AI tools exacerbating this by facilitating disinformation production and distribution.

- While GenAI can help productivity, it can also be misused for creating deepfakes and fake news, which is made more alarming due to its accessibility, speed, and scalability.

- Deepfake technology poses a major threat to election integrity by creating convincing manipulated content that can damage reputations, spread disinformation, and manipulate public opinion.

- Zero trust model: A cybersecurity approach that emphasizes "trust nothing, verify everything." It is crucial in guarding against AI and deepfake misuse in elections by promoting fact verification and validation.

How AI can affect elections: Global Perspectives

Part 1: Generative AI and opinion manipulation

Part 2: Guarding against manipulation

How AI can affect elections: Global Perspectives

Part 1: Generative AI and opinion manipulation

Part 2: Guarding against manipulation

Elections are the cornerstone of modern democracy, an exercise where a populace expresses its political will through the casting of ballots. But as electoral systems adopt and embrace technology, this introduces significant cybersecurity risks, not only to the infrastructure supporting an election but also to the people lining up in polling booths. In this article, we ask the question: Do our votes reflect our own independent choices, or are they shaped by covert forces manipulating our decisions?

While propaganda is nothing new, what’s different today is that the medium used to transmit news and information has changed from print and broadcast to the internet and social media. This has allowed misinformation campaigns to take root and spread quickly, with these platforms being abused to present fake realities that aren’t based on fact. These campaigns are now supported by AI tools, which allow actors to produce disinformation content with unbelievable speed and on a potentially massive scale. This doesn't just include bogus stories and images, but also fake audio and video, also known as deepfakes.

How opinions are shaped and hijacked

Persuading someone to change their mind isn’t inherently wrong; it happens all the time in our conversations, the news we read, the podcasts we listen to, even the advertising we encounter. This free exchange of ideas is essential for personal growth and societal progress. However, there are ethical and legal limits to what we can say or do. It’s one thing to argue a position on its merits; it’s another to lie using alternative facts.

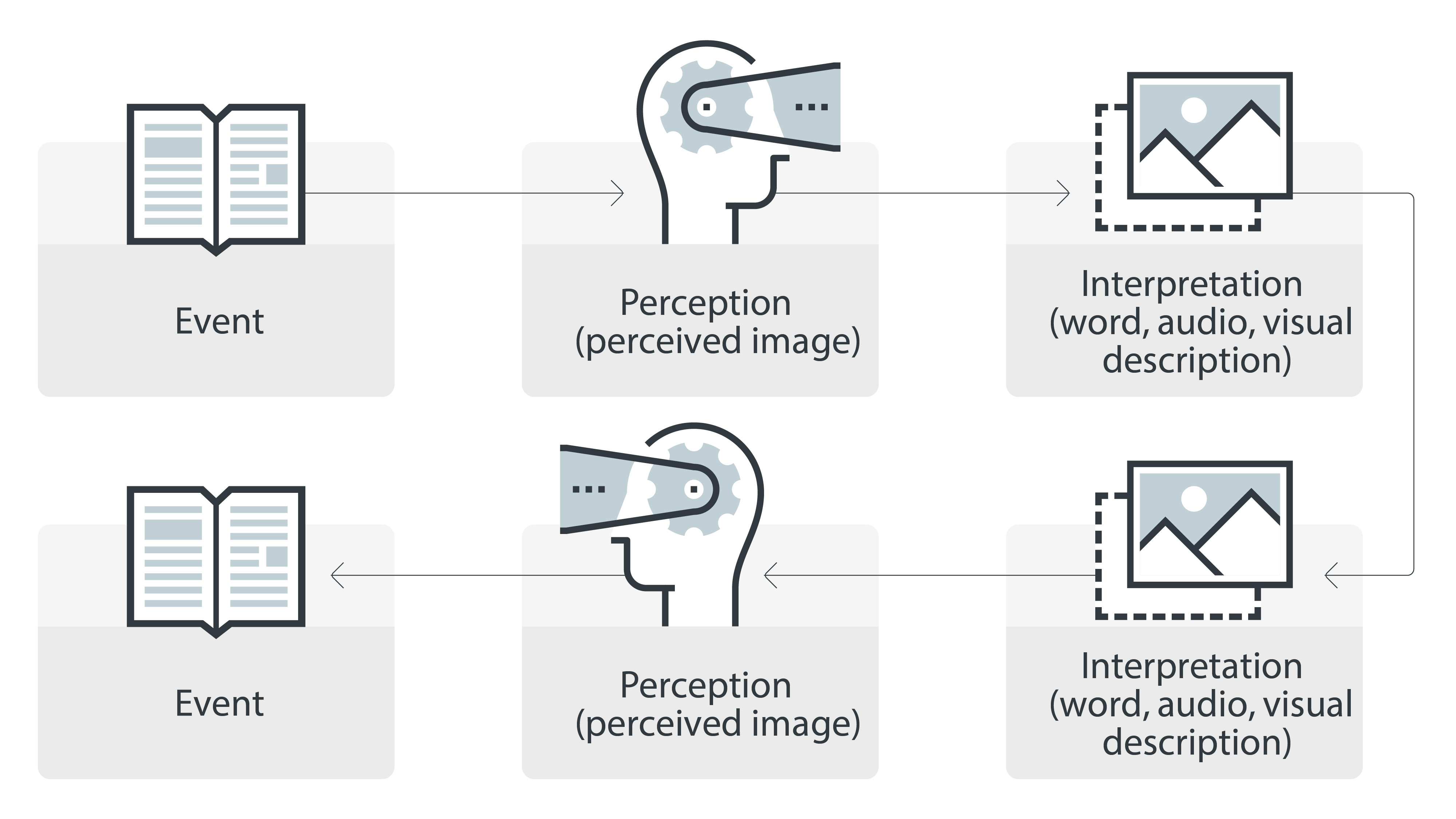

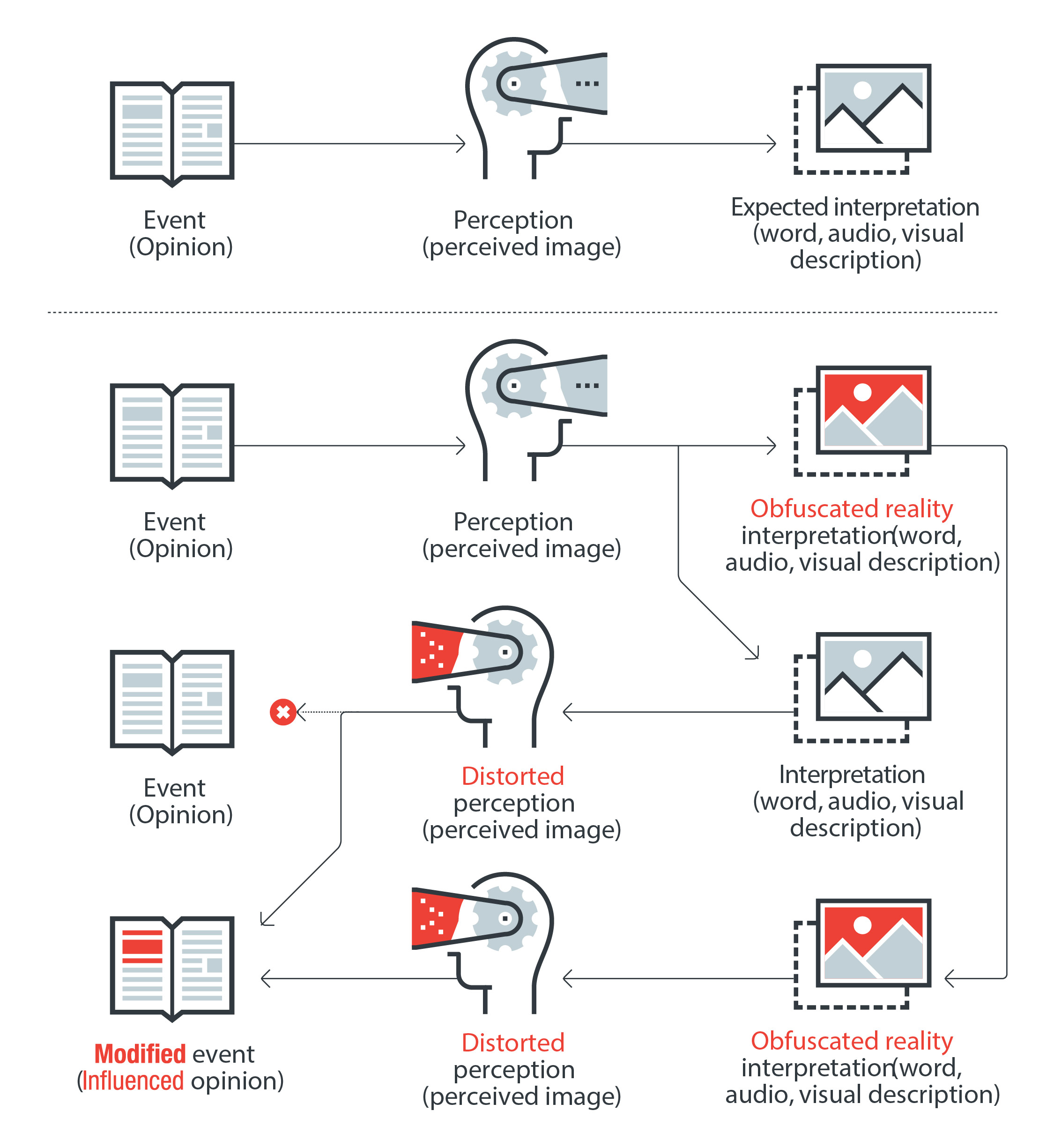

Opinions are shaped by the interaction between information and our personal biases. When we receive new information, we interpret it and form a perception, earnestly attempting to reconstruct the original message. Propaganda hijacks this process by injecting content designed to alter our perceptions through incendiary headlines, inflammatory tweets, and videos that exploit latent biases.

When a multi-pronged misinformation campaign reinforces this altered perception, it becomes increasingly difficult to distinguish between falsehoods and reality. At this point, Leon Festinger’s Theory of Cognitive Dissonance kicks in, prompting us to seek out only the information that aligns with our distorted reality. This leads us to listen to partisan podcasts, subscribe to fringe news, and join provocative online groups, all in an effort to feel comfortable with our beliefs. And that’s how we end up in an echo chamber.

This can be seen as a form of cognitive hacking, where the goal is to modify a person’s perception, rather than gain access to a network.

Figure 1. The ideal opinion formation process

Figure 2. Opinion formation process hijacked by propaganda

The dark side of GenAI

Generative AI, often abbreviated as GenAI, has been a hot topic for the last two years, and rightly so. By offering a range of benefits to productivity, creativity, and communications, GenAI has quickly entrenched itself into both workplace and home. However, the very attributes that make GenAI so beneficial can also enable a wide range of digital, physical, and political threats.

GenAI has multiple exploitations: rapidly creating fake news, producing deepfake videos and audios, automating hacking, and crafting persuasive phishing messages. Its power to manipulate public opinion could disrupt election integrity. Additionally, GenAI aids in creating appealing content for social media and provides foreign adversaries with near-native speaker abilities and cultural awareness.This potential misuse of AI becomes increasingly concerning when we consider its key attributes: its accessibility — it is readily available and user-friendly, even for those not well-versed in technology; its speed — it can produce output rapidly; and its scalability – it can operate on a massive scale.

AI-driven bots, for instance, can generate persuasive fake news articles and mimic human-like interaction on social media platforms. These bots can swiftly create and disseminate misinformation, posing a significant threat to the fairness of democratic processes.

Content-generation techniques could enhance disinformation campaigns by combining legitimate with false information and learning which types of content are the most effective and widely shared. Text content synthesis can be used to generate content for fake websites and to mimic a specific writing style. This could be exploited to imitate the writing style of an individual, such as a politician or candidate, to carry out fraudulent activities.

Just last August, an investigation by CNN and the Centre for Information Resilience (CIR) uncovered 56 false accounts on platform X. These accounts were part of a coordinated campaign promoting the Trump-Vance ticket for the 2024 US presidential race. However, there's no evidence suggesting that the Trump campaign has any involvement in this.

The investigation revealed a consistent pattern of inauthentic activity. All the accounts featured images of attractive, young women, some of which were stolen, while others seemed to be generated by artificial intelligence. The images were often manipulated to include Trump and MAGA (“Make America Great Again”) slogans.

The Foreign Malign Influence Center (FMIC) of the United States government has also noted instances of GenAI being utilized in foreign elections to craft convincing synthetic content, including audio and video. During India's recent elections, millions engaged with AI-generated ads that depicted Prime Minister Narendra Modi and other politicians, both living and deceased, discussing contentious issues.

Recently, the United States Department of Justice seized fake news websites mimicking media outlets like Fox News and The Washington Post. These counterfeit websites contained articles designed to influence a reader’s sentiment to favor Russian interests.

Figure 3: The US Justice Department has seized fake news websites mimicking media outlets like Fox News, containing fake articles promoting Russian interests

The damage potential of deepfakes

The rapid advancement of deepfake technology enables the creation of highly convincing manipulated images and videos, opening new avenues for election tampering and the spread of false information, potentially causing severe harm to the reputations of influential individuals and entire industries. Public figures are particularly vulnerable due to their extensive biometric exposure in media in the form of magazine photos, news interviews, and speeches.

Deepfakes rely heavily on the source material from which identities are impersonated, including facial features, voices, natural movements, and a person’s unique characteristics. This public exposure allows deepfake models to be trained more effectively, a trend we frequently observe with political figures.

Even with minimal technical skills, criminals and politically motivated actors can now manipulate images and videos to spread misleading information about candidates or political events. The combination of AI-generated deepfakes and the rapid dissemination capabilities of the internet can quickly expose millions to manipulated content. Their potential to cause harm includes damaging an individual's credibility, spreading disinformation, manipulating public opinion, and fueling social unrest and political polarization.

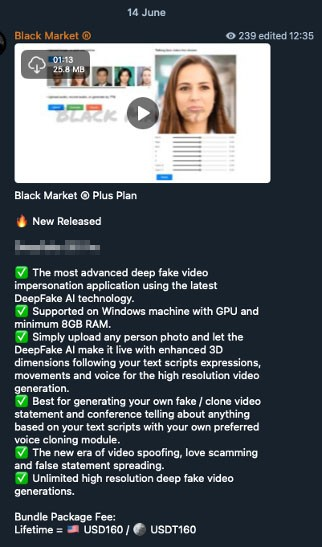

Figure 4. Cybercriminal underground advertisement for DeepFake 3D Pro

Just last month, social media platforms were awash with an AI-engineered video showing presidential candidate Kamala Harris making false statements in a manipulated campaign ad. This fake reality was shared Elon Musk’s X account, who defended it as “parody.” A more recent video falsely alleged that the candidate was involved in the sex industry during the 1980s. Despite these videos being debunked by fact-checkers, not all users are aware of their inaccuracy.

Another instance reported by the FMIC involved probable actors from the People's Republic of China who posted multiple videos online ahead of the Taiwan presidential election. These videos featured AI-generated newscasters discussing supposed scandals about the then-president of Taiwan, using content from a book that may also have been created by GenAI.

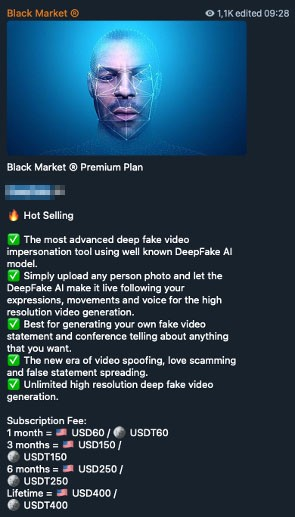

Figure 5. Cybercriminal underground advertisement for DeepFake AI

Incidents like these contribute to a reality apathy, where people start doubting the veracity of much of the information, including videos, circulated online. This, perhaps is the most profound issue that deepfakes bring to the table: their potential to destabilize the information ecosystem and obliterate the concept of a shared “reality.” With the advent of deepfakes, any video can be discredited as a fake, providing people with an escape route to evade accountability for their actions.

The evolution of deepfake technology from a specialized field to a widely accessible app represents a growing threat to democracies worldwide. Tools such as Deepfake 3D Pro, Deepfake AI, SwapFace, and AvatarAI VideoCallSpoofer, increasingly available on criminal forums, illustrate this growing threat. These tools, each with distinct capabilities, do not need to produce perfect results — just enough to deceive. They can bypass verification systems, create compromising videos, or fake real-time video calls, amplifying the threat to election integrity.

Figure 6. Screenshot from the video demo of Swapface showing how the software works

How can we avoid being manipulated?

In an era where propaganda and fake news construct an alternate reality, our crucial defense against the manipulation of our opinions — and therefore, our votes — is to sharpen our discernment. We must verify the truthfulness of election-related narratives we engage with and halt the further propagation of election misinformation.

As voters and information consumers, we're the first line of defense against election misinformation. Critical thinking challenges the authenticity of news by looking out for potential red flags such as sensationalized headlines, suspicious domains, poor grammar, altered images, missing timestamps, and unattributed data. We should venture beyond the headlines, cross-verify with other news outlets, scrutinize links and sources, research authors, validate images, and consult fact-checkers. We should also observe how significant news develops over time, which helps us estimate the reliability of the sources of information. By diversifying our news sources, focusing on reputable outlets, and thinking before sharing, we can help combat the spread of election-related fake news.

Our vigilance shouldn't be limited to news consumption; it should extend to all channels we utilize for information gathering, including SMS, email, and productivity apps. Starting from trusted sites is a good practice, but it's also important to seek additional confirmation for any election-related information we read, keeping in mind that even trusted sites can inadvertently spread election misinformation.

Additionally, voters can leverage tools like the free Trend Micro Deepfake Inspector to guard against threat actors using AI face-swapping technology during live video calls. This technology can identify AI-altered content that may indicate a deepfake "face-swapping" disinformation attempt related to elections. Users can activate this tool when joining video calls, and it will alert them to any AI-generated content or potential deepfake scams.

In the realm of cybersecurity, the zero trust approach, based on the principle of trusting nothing and verifying everything, can be applied to the information we consume, especially when it involves elections. This approach doesn’t mean trusting nothing, but is about verifying and validating facts before putting your trust in something. Essentially, our best defense against the misuse of AI and deepfakes in election cybersecurity is our vigilance and skepticism, as fraudsters will always seek to exploit confusion and vulnerability.

Tall order? Sure, it is. But we owe it to ourselves and the people we love.

For a deeper dive into how technology can be abused to manipulate opinions, read these research papers.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Related Posts

- AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

- Metaverse or Metaworse? How the Apple Vision Pro Stacks Up Against Predictions

- Across the Span of the Spanish Cybercriminal Underground: Current Activities and Trends

- Bridging Divides, Transcending Borders: The Current State of the English Underground

- AI Assistants in the Future: Security Concerns and Risk Management

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks