Ideas from Robert McArdle, Marco Balduzzi, Vincenzo Ciancaglini, Vladimir Kropotov, Fyodor Yarochkin , Rainer Vosseler, Ryan Flores, David Sancho, Fernando Mercês, Philippe Z Lin, Mayra Rosario, Feike Hacquebord, Roel Reyes

Cybercriminal “social engineering” is a tactic that, at its core, lies to a user by creating a false narrative that exploits the victim’s credulity, greed, curiosity or any other very human characteristics. The end result is that the victim willingly gives away private information to the attacker — whether personal (e.g., name, email), financial (e.g., credit card number, crypto wallet), or by inadvertently installing malware/backdoors on their own system.

We can classify modern attacks in two very broad categories according to the target: They either attack the machine or they attack the user. “Attacking the machine” started with vulnerability exploitation attacks back in 1996 with the seminal article, “Smashing the Stack for Fun and Profit.” However, “attacking the human” (social engineering) has been — and still is — overwhelmingly more prevalent. All known nonvulnerability-based attacks have a social engineering element where the attacker is trying to convince the victim to do something that will end up being pernicious to them.

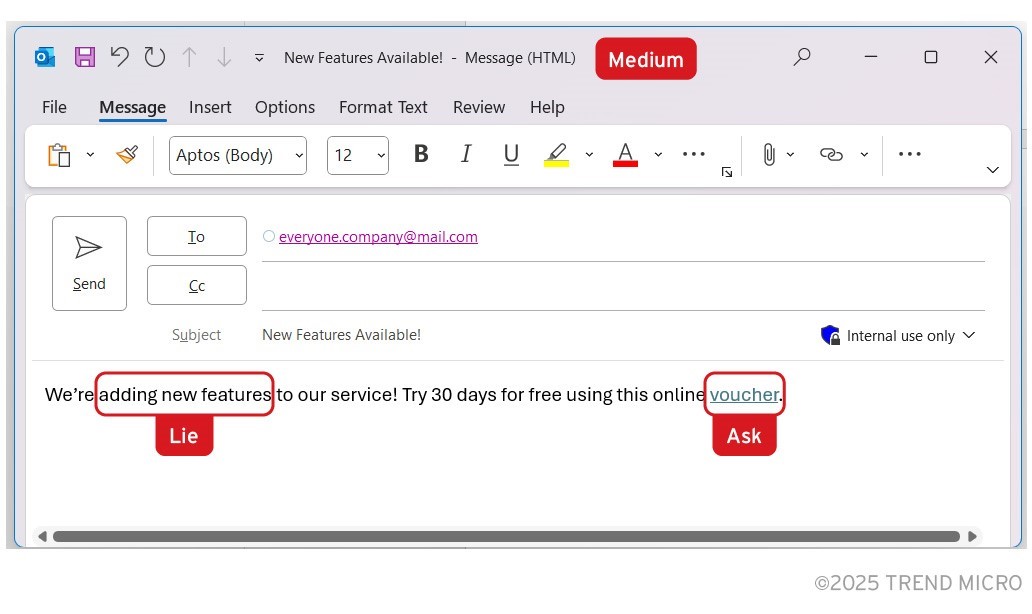

We can deconstruct any social engineering interaction and strip it down to the following elements:

- A “medium” to make the connection with the victim, which can be done via telephone, email, social network, or direct message, to name a few.

- A “lie,” where the attacker builds a falsehood to convince the victim to take action within a given time. The lie often also has a built-in sense of urgency, such as a time limitation.

- An “ask,” that is, the action to be taken by the victim, such as giving credentials, executing a malicious file, investing in a certain crypto scheme, or sending money.

Connect → Lie → Ask

Let us use a common example you are probably familiar with — the stereotypical email scam:

Figure 1. A social engineering attack’s medium, lie, and ask

As of 2024, criminals reach their victims through all manners of networking capabilities. They also use made-up stories as part of their social engineering tricks. Their objectives are typically the same, such as disclosing the password, installing malware, or sharing personal information.

Over the years, we have seen a multitude of different plots in the social engineering space, and you would be forgiven for thinking that all ideas seem to have already been used. Yet, attackers keep coming up with new social engineering tricks every year. In this piece, we will explore new social engineering improvements that attackers might utilize in the future to con users. By changing the medium, the lie, or the ask, attackers can easily come up with new and innovative ploys to fool their victims.

What new elements can we expect to see? What new changes to the old scheme can we foresee? How will new technologies affect any of these?

Changes in the Medium

As new technologies emerge, attackers gain more ways to reach their potential victims. This includes AI tools, VR devices like Apple Vision Pro, the Humane pin, Ray-Ban glasses, or any new device users might start using in the future.

Utilizing wearables as a medium: New devices enter the market every year, and this expands the attack surface to cybercriminals. Wearables are particularly interesting because they are always on and are fully trusted by their user. Any ploy involving a wearable has a higher chance of being believed and trusted. There is a possibility of the attacker gaining access to the wearable device. They are often not designed to deploy security tools or even authenticate themselves regularly, often bypassing normal security controls.

There is also the possibility of the attacker breaking into the user’s account and seeing their dashboard. In either scenario, the attackers could use this data to further trick the user. For example, in the case of a fitness device, the attacker can send an email to the user telling them that “Your number of steps this week has been unusually low; click here to access your dashboard.” or perhaps “Your

Figure 2. A potential scenario of wearables as medium for social engineering attacks

Chatbots as a medium: AI chatbots could also be used as a vehicle to reach the user. The idea of this attack is to feed false information to the chatbot in order to manipulate the user into taking action. Poisoning the chatbot data can be accomplished in several ways, including feeding it bad information, hijacking training data, or injecting new commands.

In a scenario involving smart assistants or knowledge base repositories, this could require a foothold on the victim’s computer. The most likely use case would be to utilize this as part of a business email compromise (BEC) scheme in which the victim is instructed through another medium to wire the money and then uses a chatbot as a verification tool. The verification would pass because the data is tainted. Note that this strategy of tainting the chatbot’s data might only be possible on private chatbot installations, where the training data is dynamic (i.e., based on a repository that can be changed) or where the training data is accessible from the network. This method would not be viable against commercial chatbots like OpenAI’s ChatGPT or Anthropic’s Claude.

As a refinement on this attack, a digital assistant could be socially engineered to perform malicious actions on behalf of the user. For example, it could fill out a survey, leaking the user’s personal data, or display a malicious QR code for the user to scan with a mobile phone. An even more futuristic scenario would involve a digital assistant to social engineer the target’s digital assistant.

New email-based attacks: A new way to use the classic email and instant message (IM) medium would be to utilize a bot powered by a large language model (LLM) to increase the effectiveness of a BEC attack. The threat actor could use the LLM bot to compile all previous message history between the victim and the CEO. Then, the bot could continue a thread in this trusted channel as if it was the CEO using the CEO’s writing style to convince the victim to wire the money. This is already happening manually, but the potential for this attack to be automated with AI cannot be ignored.

Virtual reality (VR) and augmented reality (AR): In VR/AR environments, assuming objects or avatar skins are represented by a URL — much in the same way as non-fungible tokens (NFTs) are — the attacker can craft the URL to be malicious to certain groups of people. When the item is viewed or interacted with (perhaps a box is opened or a hat is donned), the URL could perform malicious actions. To be more surreptitious, the URL can discriminate per IP or other conditions, such as only affecting users from Germany. Similarly, the use of QR codes in these AR environments opens the door for attackers to spread bad URLs whenever they are auto-read. For example, a cute dragon standing on a QR code might prompt users to scan it, triggering malicious activity

More and better QR code attacks: QR code attacks are a good example even outside VR. The kill chain is split between devices and barely correlated. In the physical world, this could be a sticker with a malicious QR placed over the original, legitimate QR. In the digital realm, attackers can add malicious QR codes to emails or web pages. While phishing emails with malicious QR codes are already a well-known attack, the possibilities are wider. If the attacker can modify emails in transit or on the server, the bad QR code could be added to a legitimate email or web page, replacing legitimate ones or adding them from scratch.

Improving the Lies

The main innovation driving socially engineered lies is AI. The actual lie in a social engineering story will vary based on season, country, and demographic group, to name a few, but this can change very quickly due to the scalability and flexibility that AI provides. Generative AI (GenAI) excels in image, audio and video generation. For text, it excels at both creating believable content and quickly processing large amounts of text. This new scalability opens many new developments to the “lie” aspect of social engineering.

A new theme attackers can use to craft lies is AI technology itself. For example, crafting lies about ChatGPT or VR can be effective due to the interest they generate. Additionally, attackers can create fake AI-related tools that are actually malware. Graphic designers are generally curious about the creation of deepfake images and videos. A tool that the attacker can offer to facilitate this would probably be downloaded and run instead. Similarly, incorporating deepfake images and videos to existing successful scams can add more believability to them. This strategy is clearly on the rise in the current threat landscape. We believe that deepfakes have the potential to be highly disruptive in social engineering scams and that attackers will be using them extensively in the near future.

Figure 3. How call and voice scams can be enhanced by deepfakes

Another real possibility could be an AI-based dynamic lie system. Such a system would automatically contact and interact with the user to earn their trust before the ask. We already hypothesized such a system in our 2021 paper, “Uses and Abuses of AI,” as a way for the 419 scammer to earn the victim’s trust. Also known as advance-fee scams, 419 scams usually involve promising the victim a significant share of a large sum of money in return for a small upfront payment, which the fraudster claims will be used to obtain the large sum. The idea is to automate the first wave of scam emails to discern whether the people emailing back are likely to take the bait. Between the first two to three emails, once a user is identified to be prone to being scammed, control would be passed to the human scammer, which would continue the conversation to completely gain the victim’s trust.

Another possibility we thought of is “the predictable lie.” This is a way to divide the email database to be spammed into chunks. Slightly different lies would be told to each chunk, with the goal that a portion of these targeted users would increasingly trust the lie that appeared the most accurate. For example, if the scammer were to claim the ability to predict the result of an event, half of the database would receive a Yes, and the other half a No. The half that got the right prediction can then be sent a new Yes/No prediction for the next event. Those that received the two correct predictions in a row would be a lot more inclined to trust the ask, “And now that you know that we can *really* predict Bitcoin’s trend, invest with us.” This can be used with themes related to betting, stock markets, and cryptocurrency investments.

This is a form of A/B testing. It reduces the number of targets in the email database, but it increases the quality of those targets. This technique distills victims from a large email database.

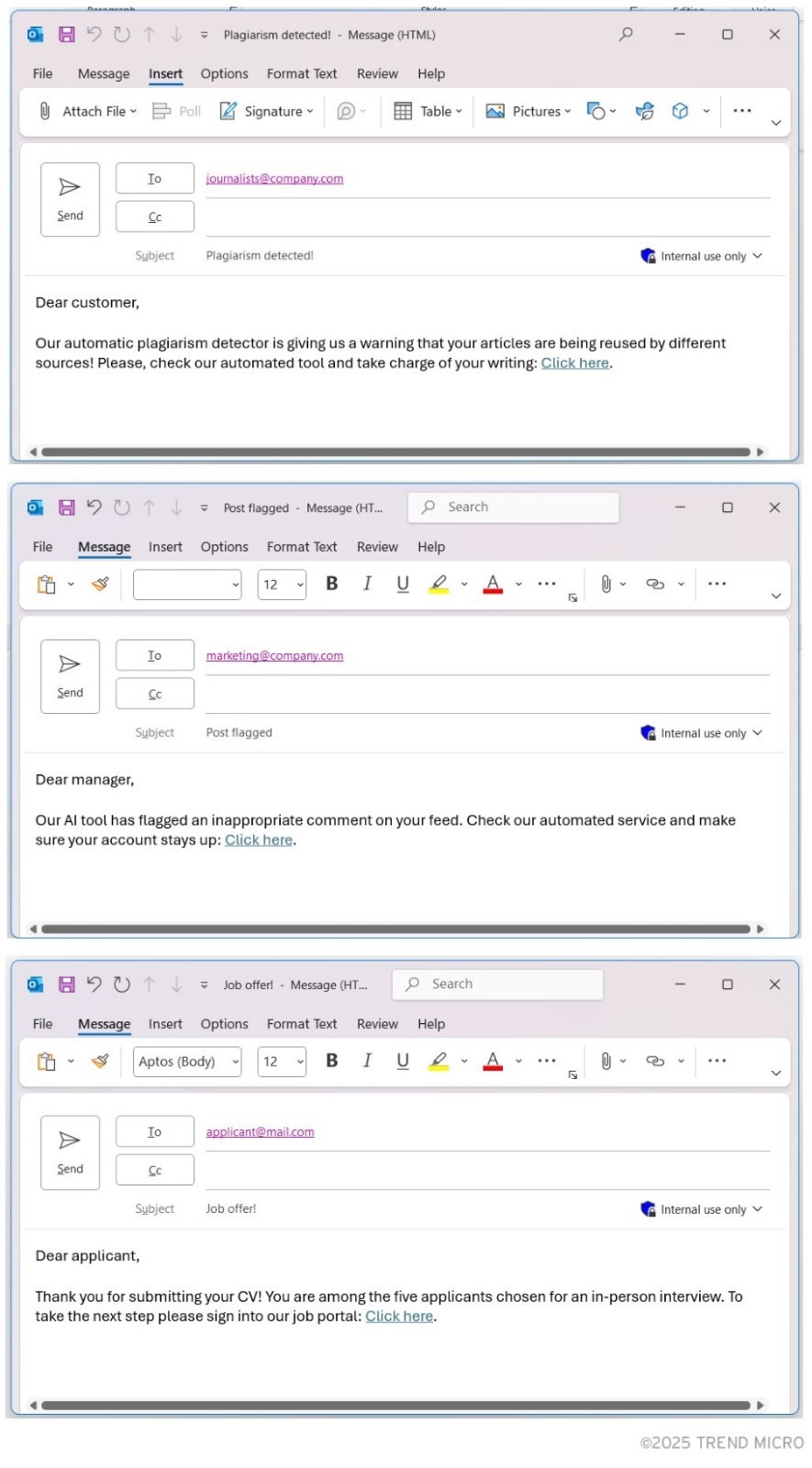

Another way to improve the lies in a scam is by targeting groups of people and making specific lies for the group. For example, an attacker could collect an email database of journalists, then send them a lie, such as the following:

“Our automatic plagiarism detector is giving us a warning that your articles are being re-used many times. Please check our automated tool and take charge of your writing. Click here.”

The idea is to enrich the email database to tailor social engineering attacks to potential victims.

The attackers could have a catalog of AI chatbot prompts designed to craft highly effective social engineering messages for each target type, with a specific key theme, such as plagiarism.

The attackers can also use AI to enrich current databases to better split them into groups that can be spammed with high-believability schemes. For example, attackers can take a journalist email database and separate them by country and media type (e.g., newspaper, TV), then generate specific scam messages for each of them separately.

A possible refinement of this idea involves the attacker collecting external information to enrich the data and target the victims with highly credible lies. This is similar to the earlier scenario, but the enrichment would be made by searching online for more information on each targeted person. This can also be automated by an AI agent. Continuing with the journalist example, enrichment of the email database would be done by Googling each name, email, and associated newspaper, then tagging individuals with their areas of interests (e.g., technology, fashion, politics) based on what they have written or discussed online. The AI-generated emails would use these tags to write personalized messages to convince the targets to click on the malicious link or take the chosen action.

Figure 4. Examples of emails tailored for specific target

Conclusions

Currently, there is significant room for attackers to enhance existing social engineering and develop new tricks as we get more options for connectivity and technologies. What we are seeing is that the possibilities for using new technologies as vehicles for social engineering are growing as they become more publicly available and affordable.

Additionally, the themes that attackers use for social engineering can shift to include AI as a phenomenon, incorporate AI-enabled deepfakes, and even design AI-based systems to automate social engineering and make it more scalable. Further refinements of social engineering tricks are certainly possible with the current state of GenAI.

Most of the innovations we foresee in social engineering come from the medium and the lie. Expanding the medium refers to attackers coming up with new ways to reach the victim. Improving the lies refers to the attackers perfecting the stories told to fool the victim. The ask is the least likely to change, as the targets for attackers remain generally consistent: user logins and passwords, user data, and financial data, among others.

Some of these factors are driven by technology — especially the medium — while others are from more innovative ideas that add believability to the whole scam. As technology advances, we expect that attackers would be able to reach their victims in more diverse and unexpected ways, including through VR, AR, wearable devices, AI chatbots, and more.

We also expect attackers to innovate the way they tell lies to fool their victims. We gave specific examples, such as the “predictable lie” and the targeting of groups. AI can help improve the lies by enriching the information from the victims while also providing additional tools to the attacker, such as deepfakes or dynamic AI-based autoresponders. By making new schemes scalable, cybercriminals now have entirely new attacks available. This would, in turn, make the business of social engineering more efficient.

These strategies might become a reality or not, but we can’t ignore that the current state of technology makes them all possible. We live in very exciting times in terms of new technologies that make our lives easier, but these very technologies can also be subverted to help attackers be more effective. Being able to foresee these advances in social engineering can help us stay vigilant before the attackers start using them.

Enterprises should take proactive measures to stay ahead of new tactics and techniques levied by cybercriminals. A comprehensive security strategy is necessary to protect sensitive information and assets likely to be targeted by these social engineering scams.

Trend Vision One™

Trend Vision One™ is a cybersecurity platform that simplifies security and helps enterprises detect and stop threats faster by consolidating multiple security capabilities, enabling greater command of the enterprise’s attack surface, and providing complete visibility into its cyber risk posture. The cloud-based platform leverages AI and threat intelligence from 250 million sensors and 16 threat research centers around the globe to provide comprehensive risk insights, earlier threat detection, and automated risk and threat response options in a single solution.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks