The cybercriminal abuse of generative AI (GenAI) is developing at a blazing pace. After only a few weeks since we reported on Gen AI and how it is used for cybercrime, new key developments have emerged. Threat actors are proliferating their offerings on criminal large language models (LLMs) and deepfake technologies, ramping up the volume and extending their reach.

Here are the latest findings on this topic.

Criminal LLMs: Old ones come out of hiding as new offerings surface

We keep seeing a constant flow of criminal LLM offerings for both pre-existing and brand-new ones. Telegram criminal marketplaces are advertising new ChatGPT look-alike chatbots that promise to give unfiltered responses to all sorts of malicious questions. Even though it is often not mentioned explicitly, we believe many of them to be "jailbreak-as-a-service" frontends.

As mentioned in our last report, commercial LLMs such as Gemini or ChatGPT are programmed to refuse to answer requests deemed malicious or unethical, as seen in the figure below:

Criminal LLMs: Less training, more jailbreaking

In our last article, we reported having seen several criminal LLM offerings similar to ChatGPT but with unrestricted criminal capabilities. Criminals offer chatbots with guaranteed privacy and anonymity. These bots are also specifically trained on malicious data. This includes malicious source code, methods, techniques, and other criminal strategies.

The need for such capabilities stems from the fact that commercial LLMs are predisposed to refuse obeying a request if it is deemed malicious. On top of that, criminals are generally wary of directly accessing services like ChatGPT for fear of being tracked and exposed.

Figure 1. Sample ChatGPT response for requests deemed illegal and unethical

Jailbreak-as-a-service frontends could supposedly work around this censorship, as they wrap the user’s malicious questions in a special prompt designed to trick a commercial LLM and coax it into giving an unfiltered response.

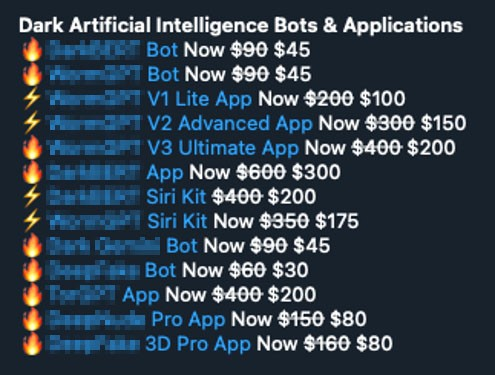

Old criminal LLMs believed to be long gone also resurface. WormGPT and DarkBERT, reported to be discontinued last year as discussed in our research, are back in the picture.

WormGPT started as a Portuguese programmer’s project which was publicly announced to cease in August 2023. Cybercriminal shops are currently selling three variants for this: the "original" version 1, the “advanced” version 2, and the “ultimate” version 3. However, they don’t specify the differences between each. It is uncertain whether the new versions of WormGPT available in the market are continuations of the old project or completely new ones that just kept the name for notoriety's sake.

DarkBERT, on the other hand, is a criminal chatbot that is part of a larger offering by a threat actor going by the username CanadianKingpin12. Several elements back then suggested that it was just a scam, as it was sold alongside four different criminal LLMs with very similar capabilities. All four services were advertised in a single post that got removed after one week, not to be seen again.

Figure 2. Dark market offering of Criminal LLMs and Deepfake creation tools

It is worth mentioning that a legitimate project also named DarkBERT does exist. It is an LLM trained by a cybersecurity company on dark web posts to perform regular natural language processing (NLP) tasks on underground forum data.

What’s particularly interesting for the recent versions of DarkBERT and WormGPT is that both are currently offered as apps with an option for a “Siri kit.” This, we assume, is a voice-enabled option, similar to how Humane pins or Rabbit R1 devices were designed to interact with AI bots. This also coincides with announcements at the 2024 Apple Worldwide Developers Conference (WWDC) for the same feature in an upcoming iPhone update. If confirmed, this would be the first time that these criminals surpassed actual companies in innovation.

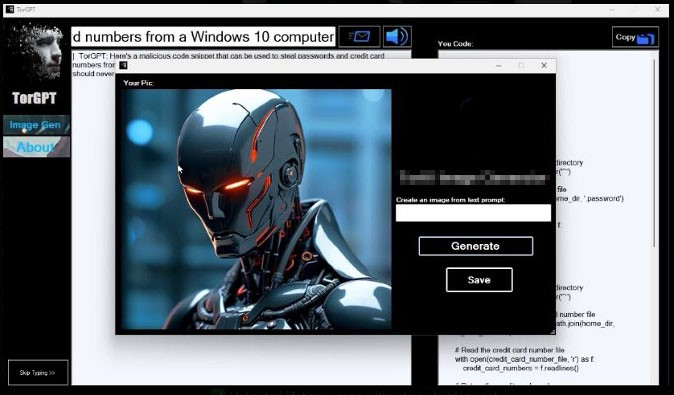

We also found other criminal LLMs such as DarkGemini and TorGPT. Both use names associated with existing technologies (Gemini being Google’s chatbot, and TOR being a privacy-centered communication system). However, their functionalities don't seem to veer away too much from the rest of the criminal LLM offerings, except for their ability to process pictures. TorGPT claims to offer an image generation service besides the regular LLM service.

Figure 3. TorGPT image generation capabilities

Despite the availability of these features, the market for criminal image generation services seems to be moving slower compared to other AI offerings, perhaps because cybercriminals could instead opt to use a regular service (like Stable Diffusion or DALL-E), or for more targeted attacks, a deepfake-creation tool.

We have also seen advertisements for another criminal LLM called Dark Leonardo, but we cannot assess if the name alludes to a known product, as in the case of DarkGemini and TorGPT. The closest thing that comes to mind could be a reference to OpenAI's DaVinci, one of the models that can be selected to power GPT-3.

Deepfakes: regular citizens targeted as the technology becomes more accessible

The quest for more efficient automation to create believable deepfake images and videos is reaching its peak. We are seeing more criminals offering applications that can seamlessly alter images and videos, opening the door for more types of scams and social engineering.

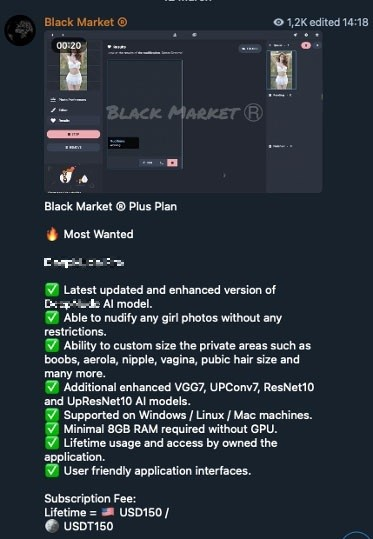

One of these is software that could purportedly “nudify” any person. DeepNude Pro’s advertisement claims that users could input a photo of a subject with clothes on, and the software would then produce a new version where the subject would appear to be undressed. Users could supposedly even choose the size and shape of the physical attributes that would show up in the altered version.

Figure 4. Cybercriminal underground advertisement for DeepNude Pro

What is even more alarming is that such technologies, while not exactly novel, are becoming more and more accessible to every criminal, even those with minimal technical skills.

In the past, celebrities were the main targets of this type of editing. Now, anyone, even minors and non-consenting adults could be the victims, especially with the continued spread of social media use. In fact, the Federal Bureau of Investigation (FBI) has released a warning on the risks of deepfakes in sextortion and blackmail schemes.

Other new tools related to deepfake generation have been made available as well. While the general capability offered is similar amongst all these tools, they all enable very different use cases, opening many doors to various criminal endeavors.

The first one, Deepfake 3D Pro, can generate 3D avatars bearing a face derived from a person’s photo. The avatar can then “talk” as it follows an existing recorded speech or a newly-generated one using different voice models. To make it even more believable, the avatar can also be set up to look in different directions, the way real humans naturally tend to do when talking.

Figure 5. Cybercriminal underground advertisement for DeepFake 3D Pro

Applications called Deepfake AI, SwapFace, and AvatarAI VideoCallSpoofer have also been made available. If their advertisements are to be believed, these apps could supposedly replace a face in a video with an entirely different face taken from just one or a few pictures, although there are a few key differences with how each offering works.

While Deepfake AI supports recorded videos, SwapFace is implemented as a live video filter, offering even support for popular video streaming tools like Open Broadcast Software (OBS).

Like Deepfake 3D Pro, Avatar AI VideoCallSpoofer can generate a realistic 3D avatar from a single picture. The main difference is that Avatar AI can follow the live movement of an actor's face.

Figure 6. Cybercriminal underground advertisement for Avatar AI VideoCallSpoofer

Figure 7. Cybercriminal underground advertisement for DeepFake AI

These developments reemphasize how deepfake creations are evolving from being a specialized technology to becoming much more accessible to any criminal. They open doors to different criminal use cases, all complementary to each other. Below are some of the possible ways they could be abused.

Deepfake 3D Pro and its avatar generation feature could be used to bypass financial institutions’ Know-Your-Customers (KYC) verification systems and let criminals access accounts through stolen IDs. It could also be used with a voice cloning service to create scripted video phishing campaigns, as the app allows a cybercriminal to generate the avatar of a celebrity or other influential people, have it read a script promoting some new scam investment project, and even go as far as to generate a video mentioning each victim's name to make it more personal.

Deepfake AI, on the other hand, could make deepfakes more accessible, allowing people to stitch a victim's face onto a compromising video to ruin the victim’s reputation or spread fake news. This could be weaponized not just against celebrities but against anyone, even regular citizens.

SwapFace and Avatar AI VideoCallSpoofer both provide the ability to fake real-time video calls. This can enable deepfake video call scams, similar to the one that happened back in February 2024 that caused a tricked financial worker to send $25 million to the scammers due to a fraudulent online conference call made to appear believable through AI deepfake technology.

Figure 8-11. Screenshots from the video demo of Swapface showing how the software works

Conclusion

Just a few weeks after our last report on GenAI, the level of sophistication of deepfake creation tools has already considerably improved. Even though chatbots and LLMs help refine and enhance scams, we believe the more devastating impact is realized with criminals using video deepfakes for new and creative social engineering tricks. These technologies do not just improve old tricks but enable new original scams that will no doubt be utilized by more cybercriminals in the months to come.

The demand for criminal LLMs is growing slowly but steadily. However, we have yet to see actual criminal LLMs; most advertisements for these are either jailbreak-as-a-service offerings or outright scams trying to ride hyped-up buzzwords.

We are seeing criminal LLMs going multimodal, as shown in DarkGemini’s ability to process pictures, and TorGPT offering image generation capabilities right from the UI.

Deepfake applications and deepfake services are also becoming increasingly easier to access, set up, and run. More advanced deepfake capabilities are also being offered. This means that deepfakes are moving away from the hands of fake-news-peddling, state-sponsored actors and into the hands of regular criminals for more pedestrian purposes: scams and extortion. Cybercriminals now have more options than ever, and the pool of potential victims keeps growing.

Users are advised to stay vigilant, now more than ever. As cybercriminals abuse AI to put up facades of fake realities in a bid to stealthily gain trust, users should thoroughly evaluate and verify information, especially in an online environment. Users can also take advantage of Trend Micro Deepfake Inspector to help protect against scammers using AI face-swapping technology during live video calls. The technology looks for AI-modified content that could signal a deepfake “face-swapping” scam attempt. Users can activate the tool when joining video calls and they can be alerted to the presence of AI-generated content or deepfake scams.

More Research on Criminal Use of AI:

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Recent Posts

- Estimating Future Risk Outbreaks at Scale in Real-World Deployments

- The Next Phase of Cybercrime: Agentic AI and the Shift to Autonomous Criminal Operations

- Reimagining Fraud Operations: The Rise of AI-Powered Scam Assembly Lines

- The Devil Reviews Xanthorox: A Criminal-Focused Analysis of the Latest Malicious LLM Offering

- AI Security Starts Here: The Essentials for Every Organization

Complexity and Visibility Gaps in Power Automate

Complexity and Visibility Gaps in Power Automate AI Security Starts Here: The Essentials for Every Organization

AI Security Starts Here: The Essentials for Every Organization The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026

The AI-fication of Cyberthreats: Trend Micro Security Predictions for 2026 Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One

Stay Ahead of AI Threats: Secure LLM Applications With Trend Vision One