Silent Sabotage: Weaponizing AI Models in Exposed Containers

Silent Sabotage: Weaponizing AI Models in Exposed Containers

How can misconfigurations help threat actors abuse AI to launch hard-to-detect attacks with massive impact? We reveal how AI models stored in exposed container registries could be tampered with— and how organizations can protect their systems.

By Alfredo Oliveira and David Fiser

Key Takeaways

- Attackers could gain unauthorized access and manipulate AI systems by abusing exposed container registries. This would lead to large-scale issues that disrupt day-to-day life.

- We found over 8,000 exposed container registries, 70% having overly permissive access controls (push (write) permission for example) which allows attackers to upload tampered images.

- In these registries, we found 1,453 AI models. Open Neural Network Exchange (ONNX) accounted for almost half of them. Our analysis details how their structure and vulnerable points could be exploited.

- We simulated an attack to demonstrate the actions a threat actor might take in the event of a compromise so organizations can spot the signs of infiltration.

Imagine driving home after a long day. You are pulling into the driveway when suddenly, your phone buzzes with a notification: a traffic violation alert from the AI-powered smart city system. The message states that you were caught red-handed using your phone while driving. Consequently, you will face a stiff penalty.

The catch? The whole time you were driving, your phone was securely stored in your bag—untouched.

What’s even more troubling is that across the city, hordes of drivers receive similar notifications. Complaints go viral on social media as news outlets report an unprecedented surge in traffic violations. The city's ticketing system crashes and the courts brace for a wave of appeals.

Lurking in the shadows is a threat actor who infiltrated an exposed container registry and tampered with an AI model. Compromised, the system now misinterprets its observations. It now sees a green light as red, a valid license plate as expired, and a driver with hands on the steering wheel as a person talking on the phone. “Violations” are automatically detected and tickets are wrongfully issued, causing mayhem.

This scenario could actually happen in real life, and the various potential consequences range from mildly disruptive to downright disastrous.

Organizations must fortify their defenses against these threats. Our research aims to provide valuable insights to security analysts, AI developers, and other entities interested in securing container registries and AI systems.

The Smart City Incident: A Wake-Up Call

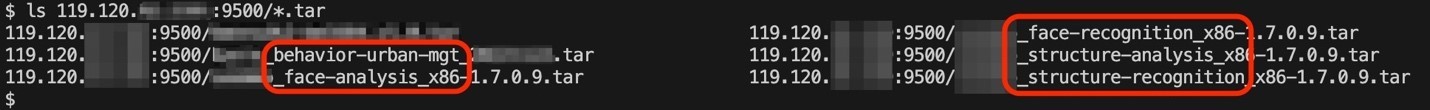

We probed into several organizations providing AI-powered smart city solutions. These companies offer AI surveillance systems that monitor various aspects of urban life, including traffic violations, facial recognition, and crowd behavior analysis. (For privacy reasons, the actual names of the companies are withheld here, and will instead be collectively referred to through the pseudonym "Smart City".)

In the Smart City incident, we uncovered several vulnerabilities that could be exploited to tamper with AI models. The common thread: exposed container registries.

We found 8,817 container registries on the internet which are supposedly private yet ended up being readily accessible to anyone because their authentication mechanisms are not enabled. From these, 6,259 (roughly 70%) had push (write) permission, which attackers could exploit by uploading tampered images back to the registry.

To confirm this, we analyzed the behavior of the HTTP responses through different methods. Supporting this finding is a related study where an independent researcher found over 4,000 exposed registries, and as of writing, was able to successfully push (harmless) images into most of them.

The unprotected registries we discovered include Smart City-owned ones, which contained Docker images housing AI models and services.

Figure 1. An example of “Smart City” company images focused on face recognition and "urban behavior management"

Figure 2. Traffic inspection model configuration parameters

Figure 3. Several models extracted from exposed container images

Thankfully, the vulnerabilities were spotted before threat actors seized the opportunity to exploit them. Regardless, the incident serves as a stark warning about AI-driven system vulnerabilities.

From Container to AI: ONNX Model Structure and its Vulnerable Points

In our previous research, which highlighted the dangers of exposed container registries, the scope was limited to private registries deployed on Cloud Service Providers (CSPs).

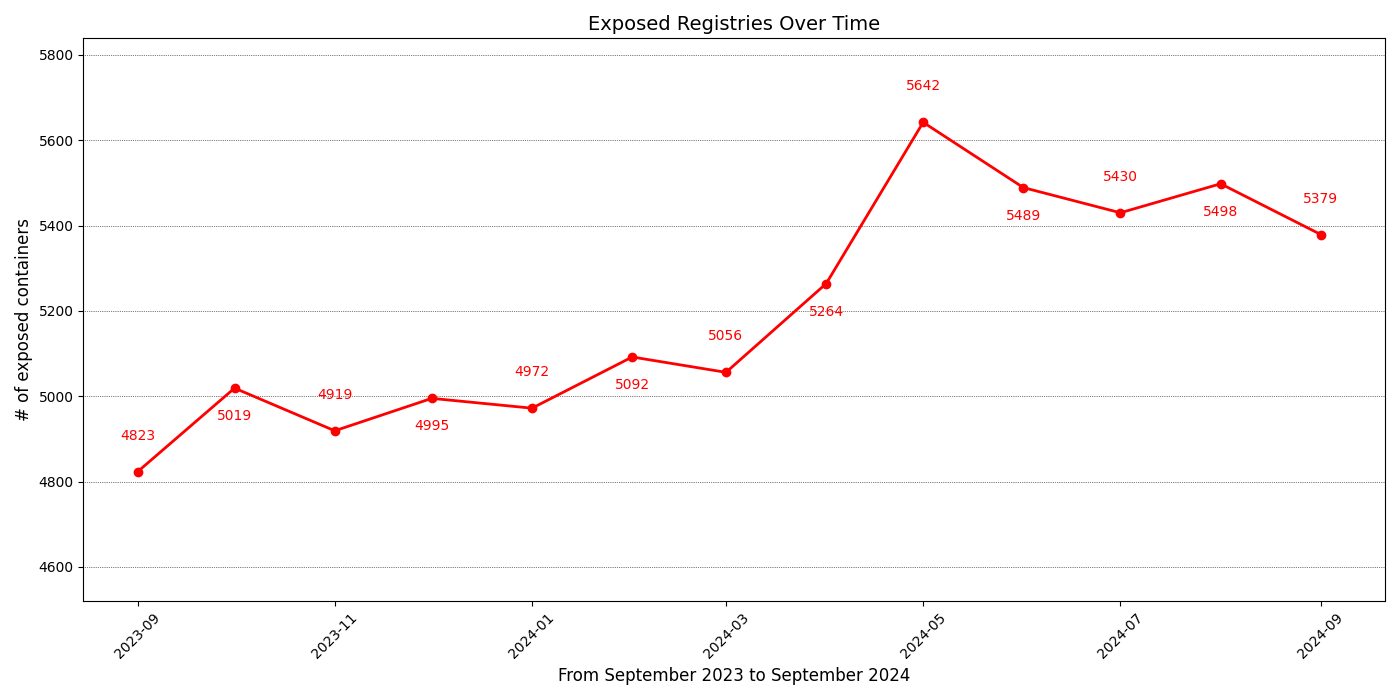

This time, we expanded the scope of our scan as we thoroughly analyzed 3,579 container registries. Besides the rising number of exposed registries, another thing caught our eye: the surging popularity of AI-related images. From zero AI images in 2023, there were 480 found this year.

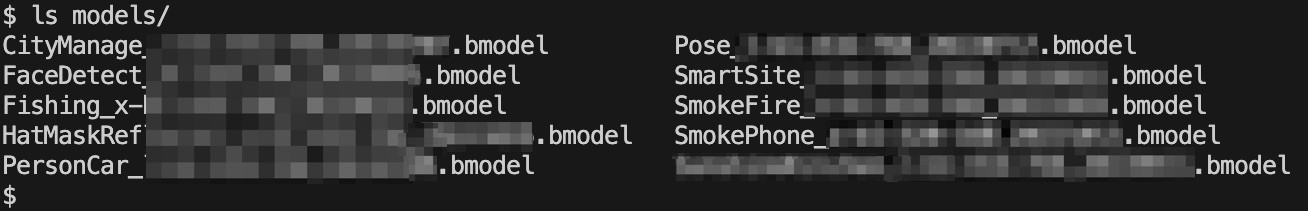

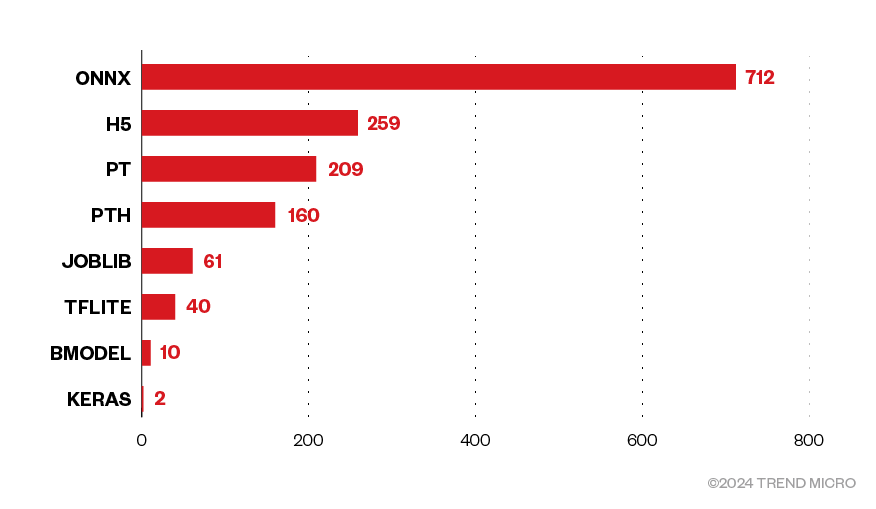

Inside the images, we found 1,453 unique AI models. Open Neural Network Exchange (ONNX) accounted for almost half of them; the prevalence of this urged a closer examination of its structure.

Figure 4. Distribution of the 1,453 unique models inside the exposed container images, per type

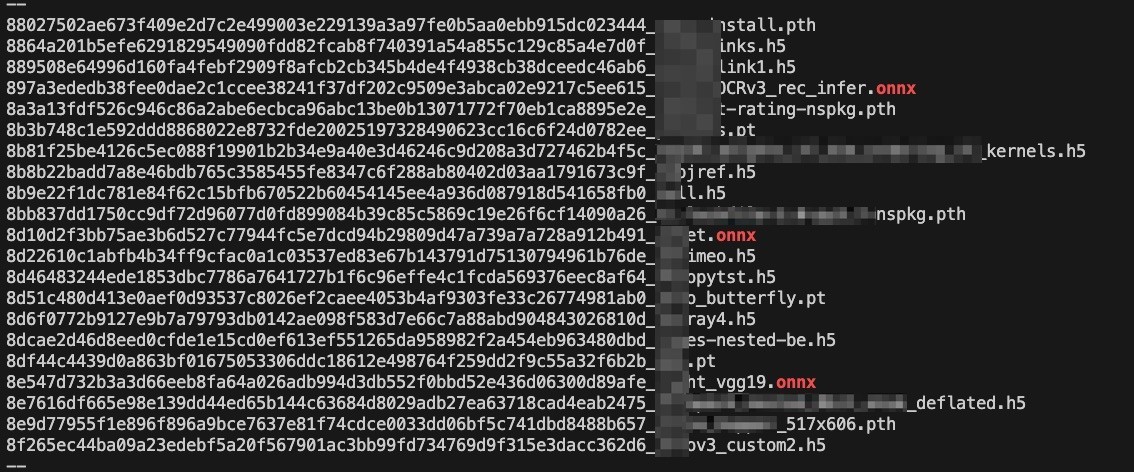

Figure 5. Several different model types leaked with the container images

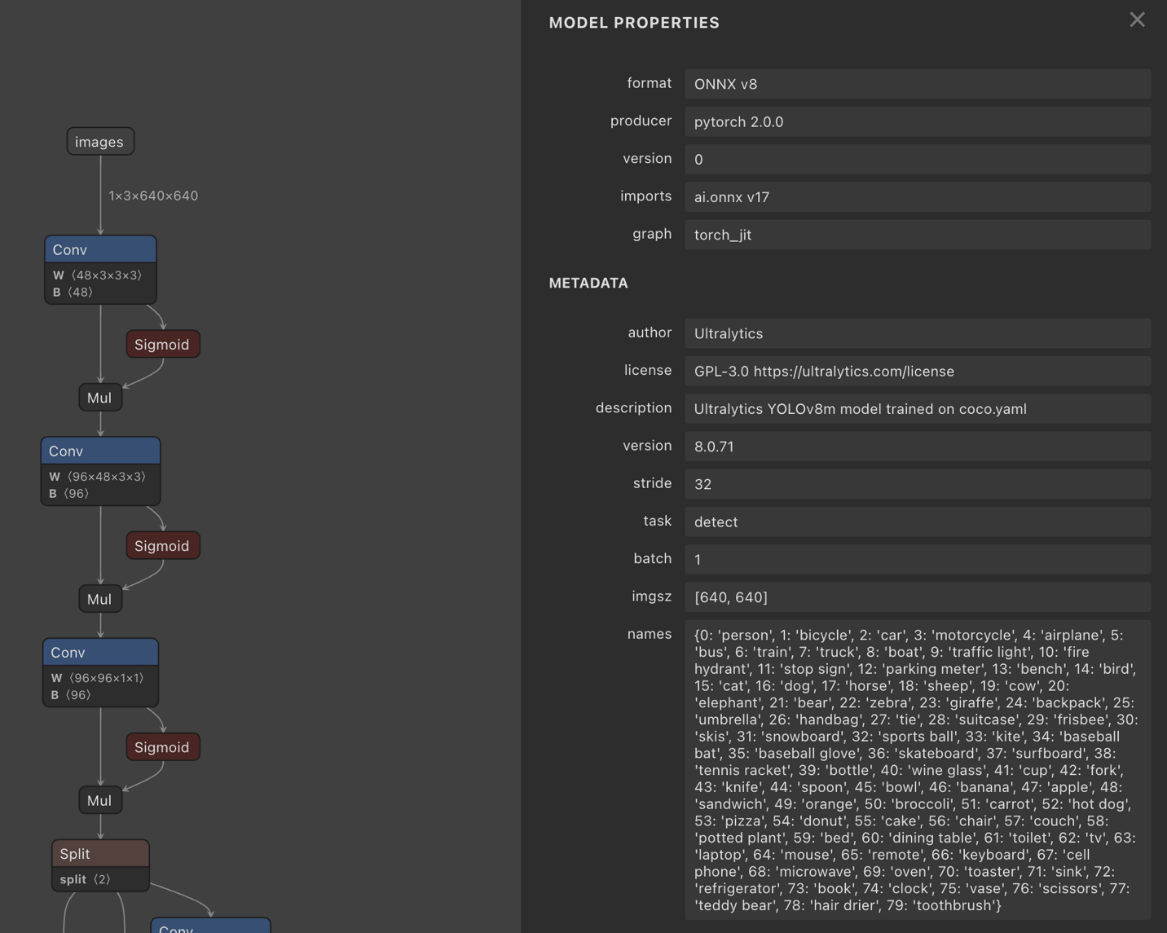

ONNX models are highly versatile, serving as a standard format for AI model interoperability across different frameworks. However, their complexity also introduces security risks. Understanding the AI model structure can help identify potential avenues for exploitation.

The ONNX model structure includes the following components:

- Model Metadata: Contains information about the model, such as its name, version, and a brief description

- Graph Definition: Defines the neural network's architecture, including nodes, edges, and their relationships

- Initializer Data: Holds the pre-trained weights and biases, which are key parameters that an attacker would likely target

- Input/Output Specifications: Defines the inputs the model expects and the outputs it produces

ONNX models are protobuf (protocol buffer) messages. This format is not originally designed for AI models but for efficient data transfer and does not inherently provide necessary security mechanisms to protect the model against tampering.

Figure 6. Structure of an ONNX model, showing its specifications

The most significant security issue we discovered in ONNX models is the absence of integrity and authenticity checks (similar to a digital signature) that originate from a trusted source and ensure the model hasn't been tampered with. This lack of verification allows a threat actor to modify the ONNX file, including its cleartext-stored weights and editable neural network architecture, affecting the model's behavior while evading detection.

Techniques for Model Manipulation

Attackers have multiple methods for manipulating AI models. Here are techniques in relation to key components such as weights, biases, layers, and input/output configurations.

Weight Manipulation

Weights are key parameters in neural networks that determine how inputs are transformed as they pass through the model’s layers. They define the importance of each feature in the data, and their values are adjusted during training to minimize error.

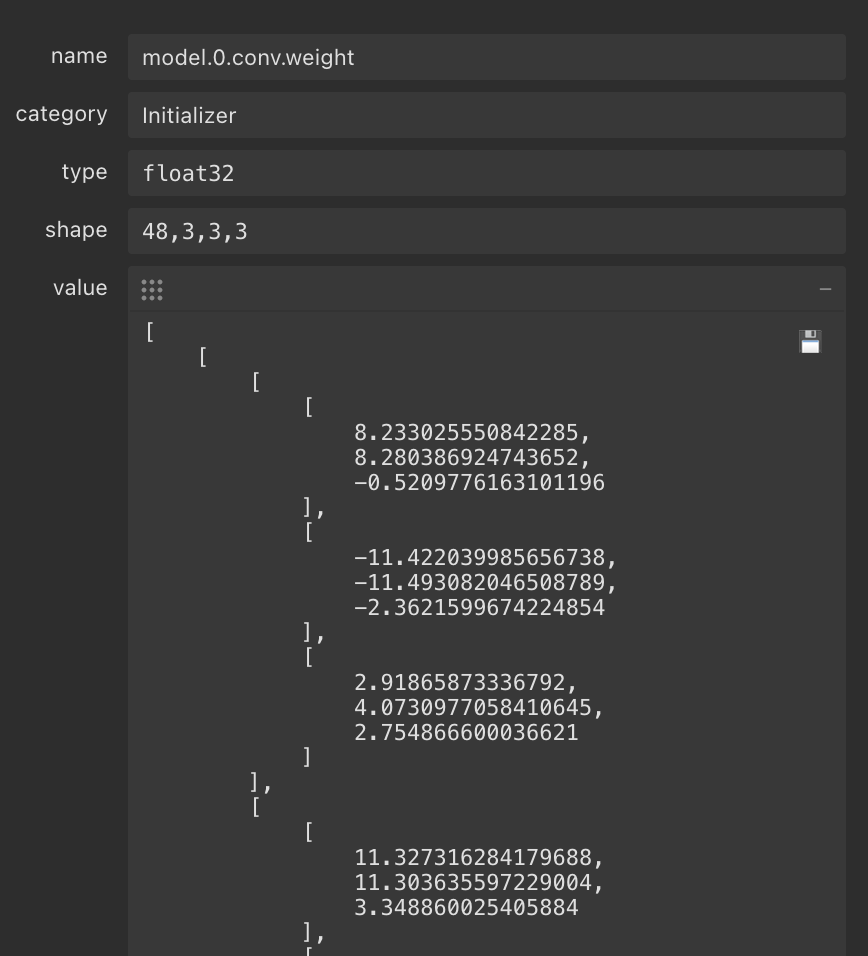

An attacker could subtly alter these weights in an ONNX model file, changing how the model interprets input data. This could lead to unexpected behavior, such as misclassifications or biased decisions. Because weight changes can be small, they may go unnoticed unless the model is rigorously tested.

For instance, in a face recognition model, even a slight weight manipulation could cause it to fail at recognizing individuals under certain conditions.

Figure 7. Weight values from an ONNX file that could be manipulated to tamper the model

Architecture Modification

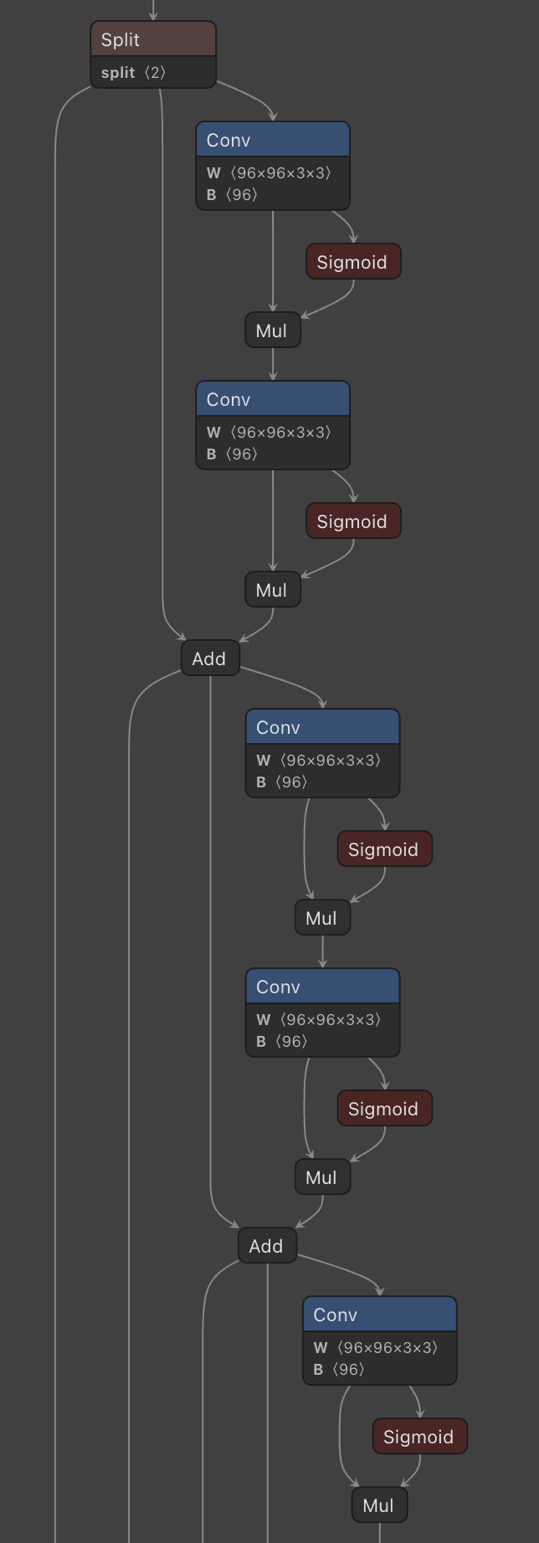

The architecture of a neural network defines its structure. It includes layers of neurons through which data flows, each layer performing computations on the data before passing it to the next.

Threat actors may add, remove, or reorder layers within the network, fundamentally altering the model's functionality. Adding layers can increase the model's complexity or introduce malicious behavior, while removing layers could degrade its performance. For example, an attacker might add a hidden layer designed to set off specific behaviors when certain inputs are detected, effectively turning the model into a backdoor.

In an ONNX model, layers (also known as nodes) are represented in the Graph Definition section, which maps the flow of data between different nodes, starting from inputs and ending with outputs. Modifying the nodes in this section can change how the model processes information.

Figure 8. Example of layer connections in an ONNX file, an attacker could add or remove layers to change the flow of decision-making

Input/Output Tampering

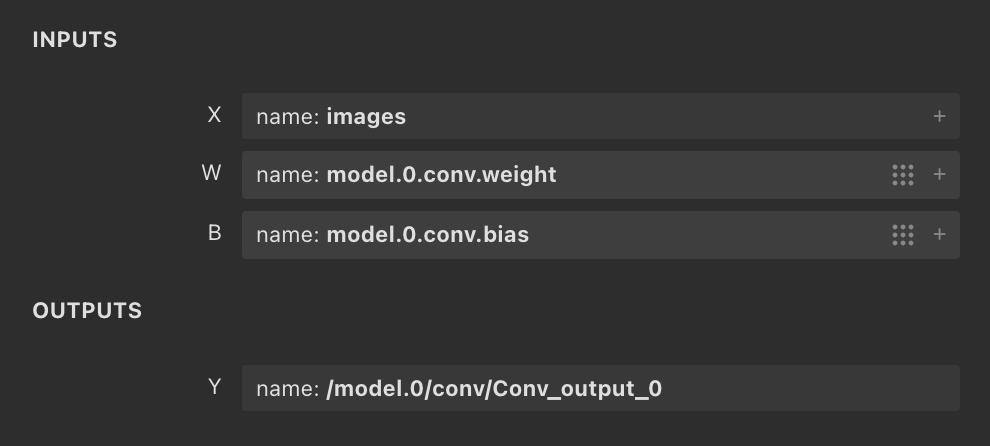

The input and output specifications of a model define the kind of data the model expects to receive and the kind of predictions or classifications it produces. Attackers may alter these specifications, forcing the model to misinterpret inputs or generate erroneous outputs.

To do this, an attacker could modify the input normalization process, causing the model to process data incorrectly and provide corrupted results. Similarly, tampering with output settings might cause the model to produce results that appear valid but are in fact wrong or manipulated.

Figure 9. ONNX model inputs and outputs

Pruning Exploitation

Pruning is a technique used to simplify neural networks by removing neurons or weights that contribute little to the model’s overall predictions. This helps in reducing the model's size and computational load.

However, if abused, pruning can selectively degrade performance, especially in areas where accuracy is critical. For instance, an attacker could prune neurons related to a specific category (e.g., reducing sensitivity to specific features in a fraud detection system), causing the model to underperform when those categories are encountered.

In ONNX, pruning typically involves altering the graph definition to remove nodes and associated weights, which may be stored in the Initializer Data section.

Impact of Altered Models on System Behavior

The consequences of tampered AI models can be profound. Some of the most critical impacts are as follows:

- Selective Misclassification: Models compromised to incorrectly identify one thing as another (e.g. red cars as fire trucks) could cause widespread errors.

- Data Leakage: A modified model might encode sensitive training data in its outputs, leading to data breaches.

- Confidence Manipulation: Attackers may increase the model’s confidence in incorrect predictions, resulting in significant misjudgments in critical systems.

These changes can often go undetected. Standard accuracy tests might not reveal the issue, and all the while the model harbors malicious modifications that remain hidden until triggered.

Security Issues Affecting Container Registries

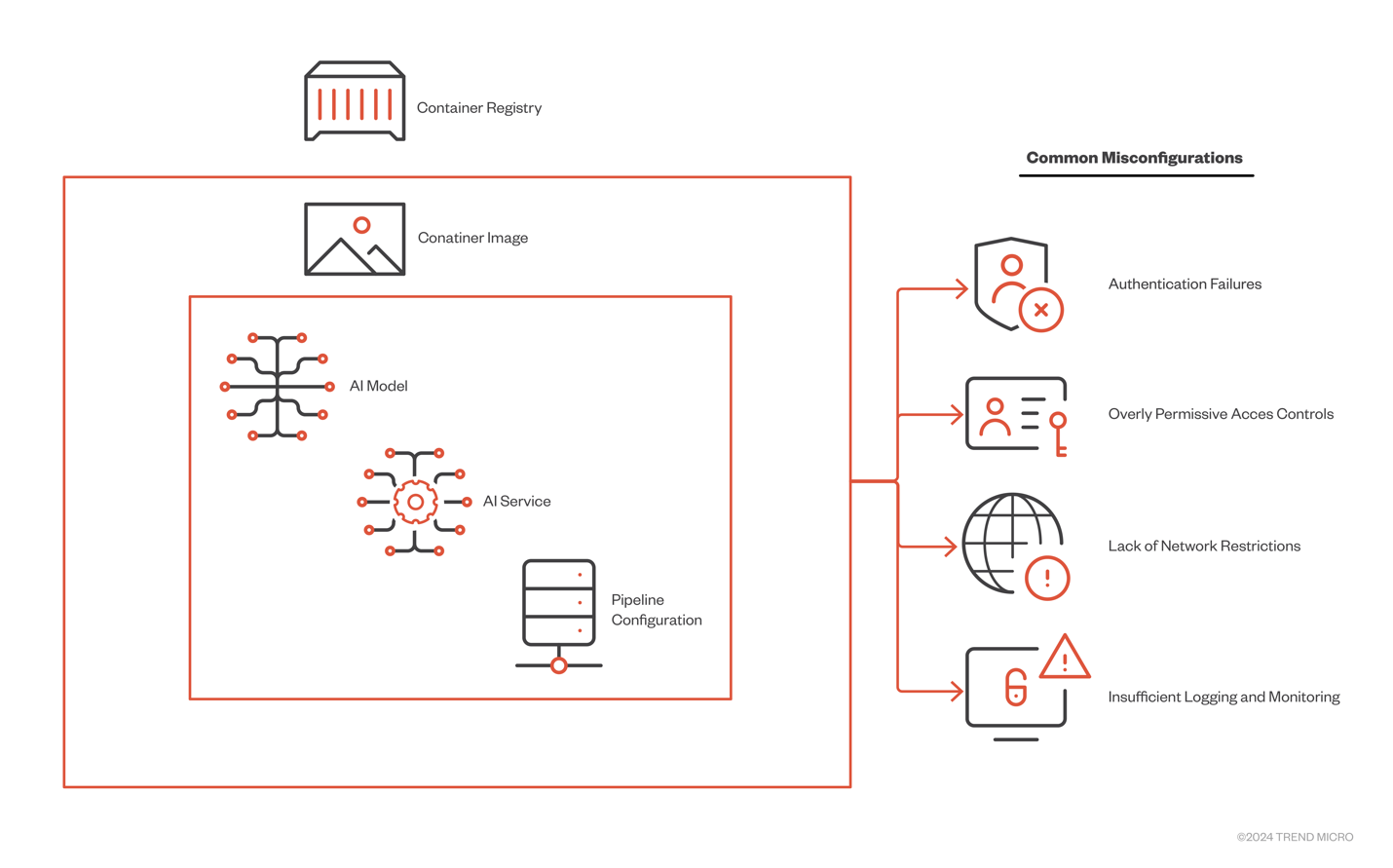

Besides vulnerabilities in the AI models themselves, it is also necessary to examine container registries which serve as central repositories for storing the images. Common misconfigurations that could compromise the whole system include the following:

- Authentication Failures

- Use of default or weak credentials

- Failure to implement multi-factor authentication (MFA)

- Lack of regular access key rotation

- Overly Permissive Access Controls

- Granting broad read/write permissions to all users

- Absence of Role-Based Access Control (RBAC) policies

- Lack of segregation between development and production environments

- Lack of Network Restrictions

- Unnecessary exposure of registries to the public internet

- Failure to implement IP whitelisting or require Virtual Private Network (VPN) access

- Not utilizing private endpoints in cloud environments

- Insufficient Logging and Monitoring

- Audit logs for registry access are not enabled

- Failure to monitor for suspicious activity patterns

- No alerts set for unauthorized access attempts

Figure 10. Common Misconfigurations in Container Registries

Proof of Concept of an Attack: Putting All the Pieces Together

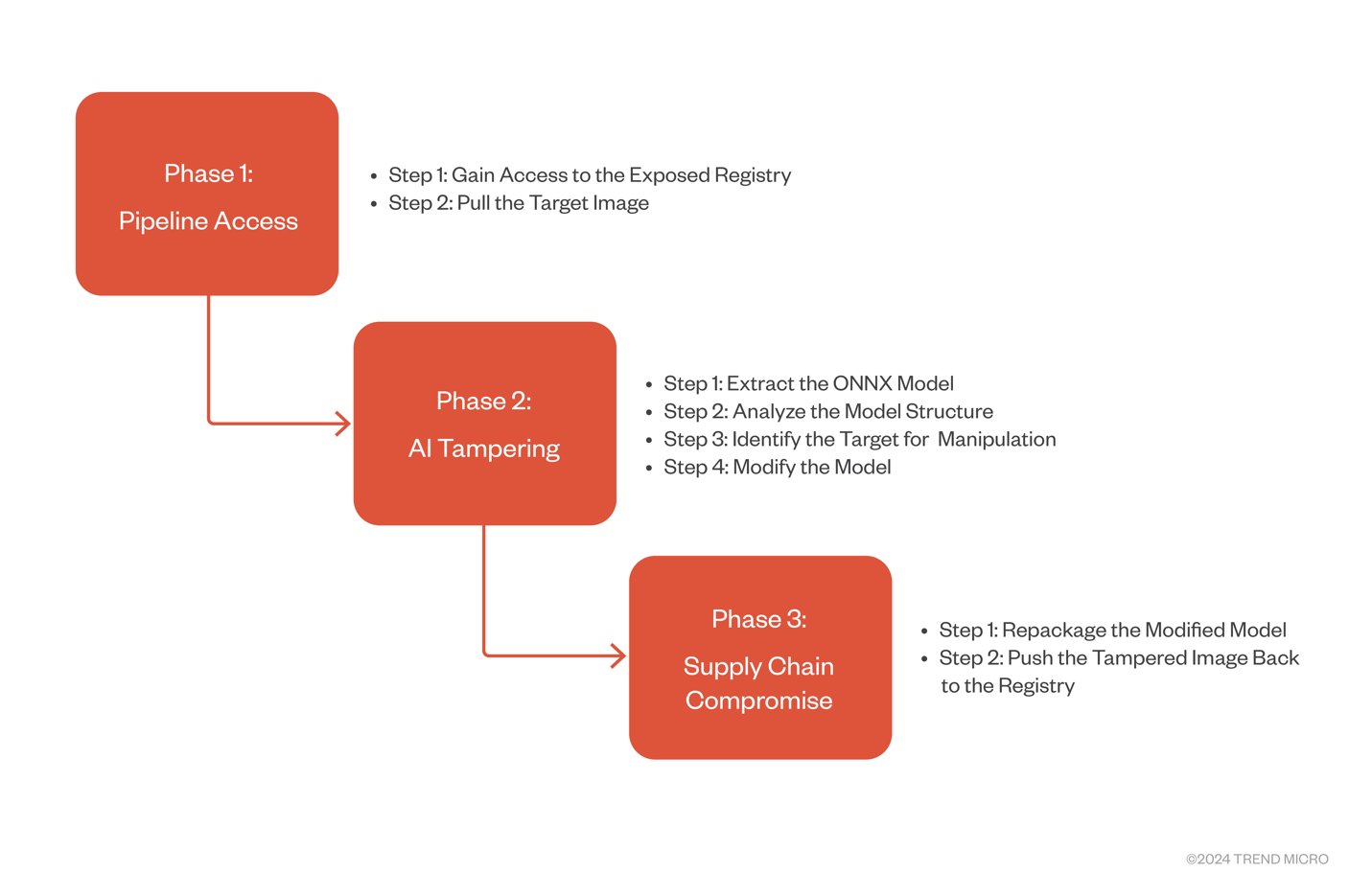

To demonstrate the actions a threat actor might take in the event of an attack, we performed a simulation in a controlled mock-up environment. The process is detailed below:

Figure 11. How an attacker can compromise exposed containers to tamper with AI models

Phase 1: Pipeline Access

Step 1: Gain Entry to the Exposed Registry

The first step involves locating the exposed container registry. If the registry is exposed without proper security controls, an attacker might discover it open to public access without authentication, much like finding an unlocked vehicle with its keys inside.

In real-world scenarios this could require reconnaissance, but for the purposes of this demonstration, we assumed that the registry has already been identified.

Step 2: Pull the Target Image

Afterwards, the attacker pulls the target Docker image. This step involves downloading the image from the container registry to the attacker’s local environment or server.

Phase 2: AI Tampering

Step 1: Extract the ONNX Model

The next step is extracting the ONNX model from the pulled image, which can be somewhat challenging depending on the image structure.

Step 2: Analyze the Model Structure

Once the ONNX model has been extracted, the attacker analyzes its structure to determine the key components and examine how they could be manipulated.

Step 3: Identify the Target for Manipulation

From the selected components, the attacker then identifies specific layers or sets of weights that could be modified to alter the model's behavior.

Step 4: Modify the Model

The attacker then modifies the model's parameters to influence its predictions or outputs. This step involves subtle changes, similar to adjusting a recipe by adding condiments bit by bit. At this point, the model appears to function normally but will behave differently in key situations.

Phase 3: Supply Chain Compromise

Step 1: Repackage the Modified Model

Once the model has been altered, the final step is to repackage the tampered image and return it to the registry.

Step 2: Push the Tampered Image Back to the Registry

The attacker then pushes the modified container image back to the exposed registry. The tampered image is now a ticking time bomb waiting to be pulled and deployed by an unsuspecting system.

Real World Scenario

We scanned all the public IPv4 connected to the Internet and found 8817 exposed private container registries available without any authentication. Roughly 70 % (6259) of them had permission to push the modified image back to the registry.

To probe if those registries allowed ‘push’, we only analyzed the behaviour of the HTTP responses of different methods.

However, these numbers are like numbers found by the independent researcher who actually pushed harmless container images as a form of probing. According to their research, they found 4569 open registries and pushed into 3719 of them (updated numbers by the article writing time).

Figure 12. Number of unauthenticated exposed private registries in a historical context(source Shodan data)

Detection and Prevention Strategies

In a real-world scenario, attacks like those shown in our simulation might go undetected for a significant period. The tampered model could behave normally under typical conditions, only displaying its malicious alterations when triggered by specific inputs. This makes the attack particularly dangerous, as it could bypass basic testing and security checks.

Moreover, this entire process could be automated. Tools can be designed to continuously scan for exposed registries, pull images, modify models, and push them back—all without requiring direct human involvement.

Armed with the knowledge of how attackers can exploit vulnerabilities in AI models, it is essential to understand how to safeguard these systems. Some of the ways to do this are as follows:

Secure Configuration of Container Registries

Proper configuration of container registries is half the battle when it comes to securing these systems. Here are some best practices for this:

- Enable registry authentication from the beginning, even in test environments.

- Do not hard-code secrets within files inside container images.

- Do not store the Dockerfile or docker-compose.yml inside the image. These files could be used by attackers to gain information about the environment’s internal structure.

- Use vaults to store secrets and their references.

- When using environmental variables for secrets, do not store them within Dockerfiles or .env files. Instead, inject them at the container runtime.

- Make sure your private container registries are not accessible to the public by implementing network restrictions.

- Encrypt container images at rest.

- Enable audit logging.

Implementation of Model Integrity Checks

Ensuring that AI models have not been tampered with is critical to maintaining system security. The following integrity checks will safeguard AI models:

- Cryptographic Signing: Get the AI models cryptographically signed, providing a verifiable method of guaranteeing their authenticity.

- Checksum Verification: Use checksum verification to compare the model's current state with its original form, ensuring that no unauthorized modifications have been made.

- Secure Model Storage: Store AI models in a secure, encrypted environment separate from the container images, reducing the risk of unauthorized access.

- Version Control for Models: Maintain a comprehensive version control system for AI models, allowing for rollback in case any issues arise.

Runtime Anomaly Detection for AI Models

Once deployed, AI models should be continuously monitored for any anomalies that might indicate tampering or malfunction. Here are some key monitoring techniques:

- Input/Output Distribution Monitoring: Track the distribution of inputs and outputs to detect any unusual patterns. If outputs become inconsistent with expected behavior, it could indicate tampering.

- Performance Metric Tracking: Monitor key performance metrics such as accuracy, latency, and resource consumption. Unexpected changes in these metrics could be a sign of compromise.

- Confidence Score Analysis: Regularly review the confidence scores of model predictions. Abnormally high confidence for incorrect outputs can signal tampering.

- Periodic Retraining and Comparison: Routinely retrain models on trusted data and compare their performance with the deployed versions. This allows for early detection of issues./ul>

Securing the AI-Driven Future

As we explored facets of AI security, it has become clear that securing AI systems is not merely a technical challenge but a fundamental requirement. In the course of our examination of methods of compromise and the corresponding security recommendations, several insights about the highlighted technology have also emerged:

- Vulnerabilities can, but shouldn’t be, overlooked or downplayed: The Smart City incident underscored how even a simple misconfiguration can have serious consequences.

- The attack surface is expanding: The range of threats to AI systems is vast, including data poisoning, model theft, adversarial attacks, and compromised pipelines. Securing these systems requires addressing vulnerabilities across every stage of the AI lifecycle.

- There is a balance between security and innovation: As AI capabilities grow, security measures must keep pace. Innovation should not come at the expense of robust security practices.

- Interdisciplinary collaboration is essential: Securing AI systems demands expertise not only in AI and cybersecurity but also in areas such as cryptography, privacy regulations, and ethics. Collaborative solutions across these disciplines are necessary.

The lessons from the Smart City incident serve both as a warning and a call to action. They illustrate the stakes involved in AI security and the potential consequences of failure. On the other hand, they also highlight the opportunities for building AI systems that enhance our lives while protecting our privacy and security.

As we continue to advance in an AI-driven world, the decisions we make today will shape the security landscape of tomorrow. By understanding the risks, implementing strong defenses, and fostering a culture of security-first innovation, we can build and maintain AI systems that are not only powerful but also trustworthy and secure.

Like it? Add this infographic to your site:

1. Click on the box below. 2. Press Ctrl+A to select all. 3. Press Ctrl+C to copy. 4. Paste the code into your page (Ctrl+V).

Image will appear the same size as you see above.

Cellular IoT Vulnerabilities: Another Door to Cellular Networks

Cellular IoT Vulnerabilities: Another Door to Cellular Networks AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™

AI in the Crosshairs: Understanding and Detecting Attacks on AWS AI Services with Trend Vision One™ Trend 2025 Cyber Risk Report

Trend 2025 Cyber Risk Report CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks

CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks