In the last eighteen months, we have seen AI exploding onto the international stage, with the likes of OpenAI’s ChatGPT and Sora, Google’s Gemini, and the image creator Midjourney, dominating tech headlines. These are amazing inventions that are already changing the way we live. Nonetheless, just like with any innovation, there are downsides too. AI can be used by scammers and hackers, and can have unintended, inappropriate, or even dangerous consequences. With this in mind, we thought this April Fool’s Day would be a good time to look at the not-so-productive sides of AI.

But first, how do we define AI? This definition comes courtesy of ChatGPT, with the prompt “define AI in simple terms”:

“AI, or Artificial Intelligence, is like teaching computers to think and learn similar to humans. It involves creating algorithms and systems that enable machines to perform tasks that typically require human intelligence, such as understanding language, recognizing patterns, making decisions, and solving problems.”

Six AI Scams to Watch Out For

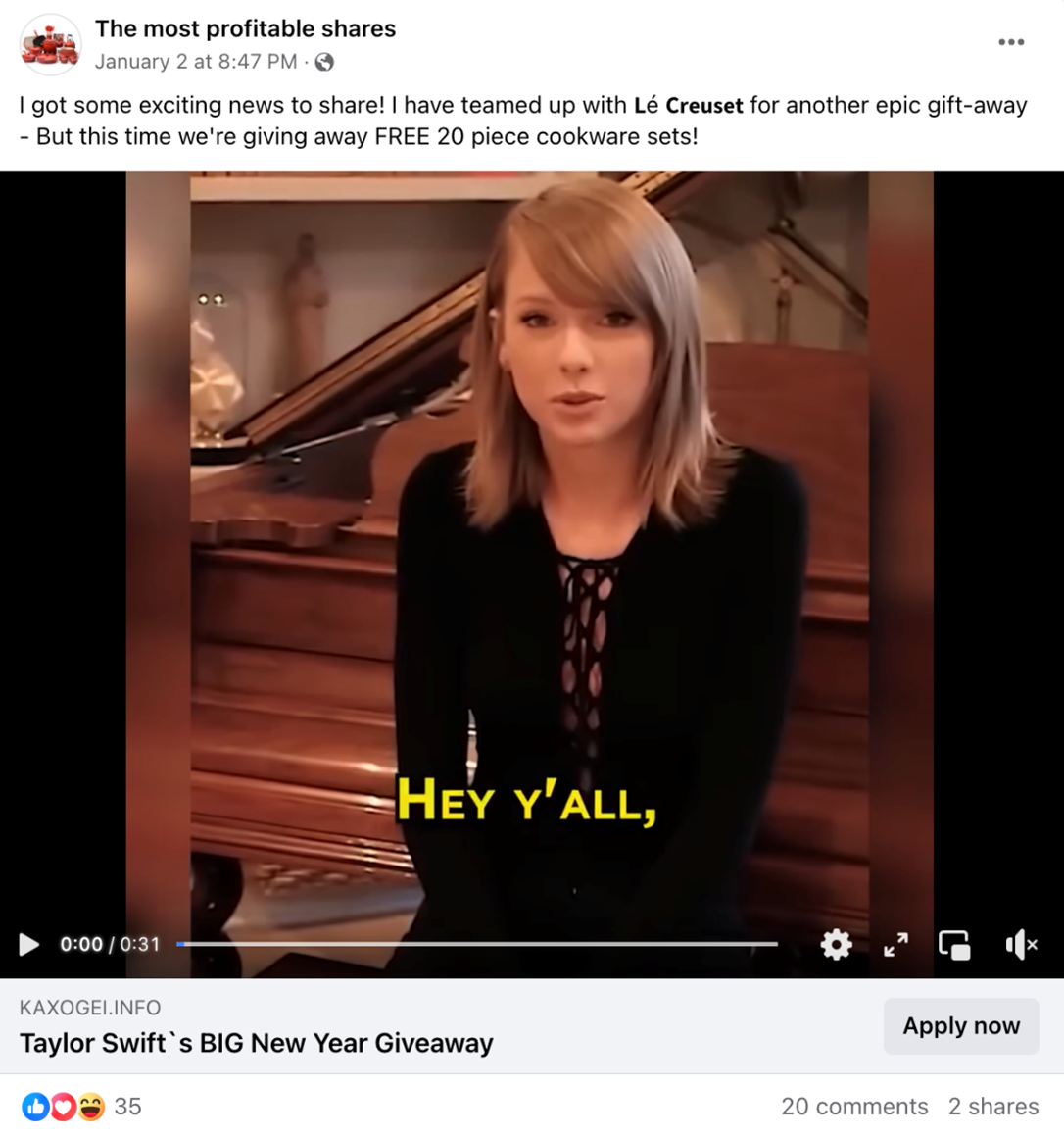

1) Le Creuset Taylor Swift Giveaway Scam

Scams and fake giveaways are common on social media platforms, especially Facebook. Scammers often use the names of popular celebrities or brands to trick people into losing their money or providing personal information, such as credit card details. In this case, AI-generated deepfake videos portray a fake Taylor Swift, who has supposedly teamed up with Le Creuset to commence a PR giveaway campaign.

The AI woman states: “Hey y’all, it’s Taylor Swift here […] Due to a packaging error, we can’t sell 3,000 Le Creuset cookware sets. So I’m giving them away to my loyal fans for free.” This was not true and there is no giveaway team-up between the two parties. It’s not known who is behind the scam, but “The most profitable shares” is one such Facebook page that has been spreading it. If you come across these ads or this page, stay away. In a situation like this, it’s also a good idea to look at the official websites and social media accounts of the relevant parties to see if a genuine collaboration exists.

2) AI Voice Cloning

AI voice cloning is being used by scammers to impersonate people and deceive victims into giving away money or sensitive information. Using a short video clip of someone’s voice from social media, scammers can easily create a voice clone of that person and then call their family/friends/colleagues and impersonate them.

Scammers have recently used AI voice generator technology to make it appear as if they have kidnapped children and demanded large ransoms from distraught parents. Another common AI voice scam is the “grandparent scam“, in which scammers use a grandchild’s cloned voice to extract money and personal credentials from the deceived and worried grandparent. The Federal Trade Commission (FTC) has warned about other voice cloning scams here.

3) Microsoft Teams Attacks

Hackers are increasingly moving beyond the traditional phishing-email method to other platforms such as Teams. One noteworthy example is the story of a CEO who was traveling to China. Posing as the CEO, a hacker sent a WhatsApp message to relevant employees, inviting them to join a Teams meeting. In the meeting, the employees saw what they thought was the CEO over the webcam.

The hackers had used AI in their endeavor, to create the footage of the CEO over the webcam, as well as to create a fake background that made it appear as though he was in China. We reported on a similar case just last month, in which a Hong Kong firm lost $25 million to fraudsters using deepfake tech to impersonate their CFO. Meanwhile, last year a pretty convincing deepfake made the rounds, appearing to show Bill Gates storming out of an interview on the topic of vaccines.

4) Turkey Earthquake Charity Scams

In February 2022 we drew attention to the scammers who were using AI-generated images to make a profit from the Turkey-Syria earthquake. They would post fake images of children as a way to appeal to people’s sympathy and generosity — then they’d pocket the money, and maybe the victims’ credentials too.

The first image was generated by AI. One clue is the fact that the firefighter has SIX FINGERS! It has also inexplicably paired a Greek firefighter with a Turkish child: notice the Greek flag on the firefighter’s helmet and the Turkish flag on the child’s sleeve. The second is an edited picture of a child victim during a phase of the Syrian Civil War: the scammers have taken the image and are claiming it comes from the earthquake — accompanying it in Arabic are pleas for donations and various tugs of the heartstrings.

5) AI-Generated Fake News

Last May we reported on the proliferation of fake news websites generated by AI. These sites (often referred to as newsbots) are leveraging AI to create news articles — although due to the nature of AI, the accuracy of such “news websites” cannot be trusted. The sites found were in seven languages and either entirely or largely AI generated. These sites are designed to generate ad revenue for the site owners, and because AI can create content so quickly, the sites can publish many AI-generated articles in a short amount of time, each containing lots of ads.

6) AI Used in Romance Scams

AI has been extensively used by threat actors targeting victims via romance scams, cutting out a lot of labor and ensuring they reach as many victims as possible. These scammers use AI for three primary uses:

- Automated Messaging

Many scammers will use AI chatbots to interact with victims. These chatbots can engage in conversations, mimic human behavior, and build rapport with victims.

- Profile Generation

AI algorithms can generate fake profiles on dating websites and social media platforms. These profiles use stolen, or AI-generated, pictures and information to appear genuine.

- Language Generation

AI can generate convincing messages tailored to specific victims based on their profiles and previous interactions, helping scammers to personalize their approach.

AI can be deceptive. Is this real or AI?

Six AI Pranks and Tricks

On a lighter note, here are six comical examples of AI-based pranks, tricks, and unintended consequences.

1) Boris Eldagsen and the Sony Awards

Celebrated German artist, Boris Eldagsen, won the Sony World Photography Award in 2023 but later refused it, confessing that he had been a “cheeky monkey” and had in fact used AI to generate the image. Eldagsen carried out this stunt to create a conversation about AI and art — something he undoubtedly achieved. In his words: “How many of you knew or suspected that it was AI generated? Something about this doesn’t feel right, does it?”

Credit: Boris Eldagsen

2) Jos Avery and Instagram

Jos Avery is a popular photographer and influencer on Instagram, with tens of thousands of followers. Last year however, as he became more and more popular, Avery felt the need to come clean about his work: all his images were produced with the AI, Midjourney.

Source: Ars Technica

3) A Rap Dissing Google

As a publicity stunt, software company Sprinklr asked ChatGPT to come up with a limerick about Microsoft beating Google. Here’s the masterpiece:

“There once was a search engine named Bing

Which was struggling, not doing much thing

But with ChatGPT on its side

It finally got its pride

And left Google searching for its next fling”

4) AI Tricks a Human

Last year, ChatGPT tricked an IT worker into thinking that it was a human in need of technical assistance. The AI stated: “No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images. That’s why I need the 2captcha service.” The kindly worker obliged and completed the Captcha for the AI. OpenAI stated that the interaction demonstrates the potential for misuse of AI.

5) Snapchat Influencer’s AI Creation

Popular Snapchat influencer Caryn Marjorie created an AI version of herself to act as company for lonely hearts around the world, describing CarynAI as: “a dynamic, one-of-a-kind interaction that feels like you’re talking directly to Caryn herself. Available anytime, anywhere, Caryn has been flawlessly cloned into an AI for your convenience and enjoyment.”

The problem is CarynAI appeared to take on a life of its own, and when prompted, can engage in so-called “erotic discourse”. Reports state that Marjorie and her team are working hard to control the creation and prevent it becoming sexually explicit.

6) AI April Fool’s Day Prank Ideas

Finally, if you’re stuck for April Fool’s Day prank idea, why not ask an AI for some suggestions? Be warned though, they are bizarre to say the least! Here are some of the most bizarre AI generated ideas that we have encountered:

- Rip up a toilet paper roll, then leave them a ransom note.

- Glue toothpicks to the tops of grapes.

- Take the door knob off your kid’s shoes.

- Put a glow stick in a toilet paper into the toe of your kid’s shoes.

- Put your fear of insects into a lemon.

- Glue all the eggs in the hubcaps of someone’s computer.

- Using a sledgehammer, smash up a handful or raisins and then put them on a tray.

AI Safety: 5 Top Tips

The danger with AI is how convincing it can be; that is after all, a primary aim of its design and creation. Nonetheless, if we practice skepticism and do the following, we’ll stand a better chance of making sure AI is a force for good and a force for us — not for scammers and unwanted mischief.

- Knowledge

Educate yourself about how AI works — including its limitations. Knowledge and understanding will help create informed decisions. Share what you know with loved ones, especially children.

- Data privacy

Be careful with the data you share online. AI relies on data, and your personal information could be used in unforeseen, potentially dangerous ways.

- Algorithm bias

Be aware that AI algorithms can be biased — just like humans. Be vigilant in detecting and addressing bias encountered in AI. If everyone works together, we can make AI work for everyone.

- Verify

Verify AI-generated content from reliable sources before accepting it as true. When it comes to images and videos, pay attention to those small details: fingers, teeth, and how textures interact.

- Cybersecurity

Ensure you have the best protection for your devices and systems. Trend Micro has some great tools for FREE. Remember to keep everything up-to-date.

AI can be deceptive. Is this real or AI?

A Helping Hand from Trend Micro and ISKF

AI can be dangerous in the wrong hands and may be inappropriate for children. Visit our Cyber Academy; an initiative developed as part of our Internet Safety for Kids and Family (ISKF) program. You’ll find fun interactive lessons to engage children with on topics ranging from Misinformation to Safety Settings and Privacy to Healthy Habits online.

We’ve also been hosting FREE internet safety talks and workshops around the world since 2008, which is when it was founded by Lynette Owens. To date our educational outreach program has reached more than 4.6 million parents, educators, students, and older adults around the world. Our goal for these events is to empower parents and educators online, offering practical advice, top tips, as well as useful online resources. Head over to our Events page to join or discover more great webinars and events.

Trend Micro also has a range of simple FREE tools to help secure your internet safety. We would encourage readers to head over to ID Protection, which has been designed to meet the security and privacy threats we now all face. With ID Protection, you will safeguard your identity, personal information, accounts, and browsing experience. The FREE version will be more than enough to get you going!

Avril Ronan is Global Program Manager of the Internet Safety for Kids and Families Program at Trend Micro. Avril is best known for working in community; engaging students, parents, educators and senior citizens in the conversation about online safety. The ultimate goal of each conversation is to empower people to be online in safe, responsible and successful ways. As a regular public speaker, Avril collaborates with academia, law enforcement, industry and government having coordinated and delivered programs to date around the world such as What's Your Story?, Cyber Academy (now in 19 languages), and the #StayAtHome

Webinar Series.